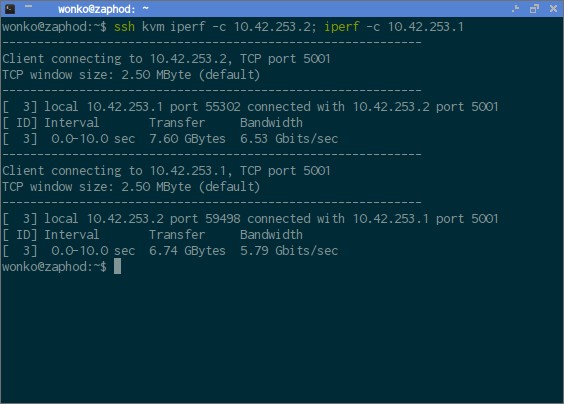

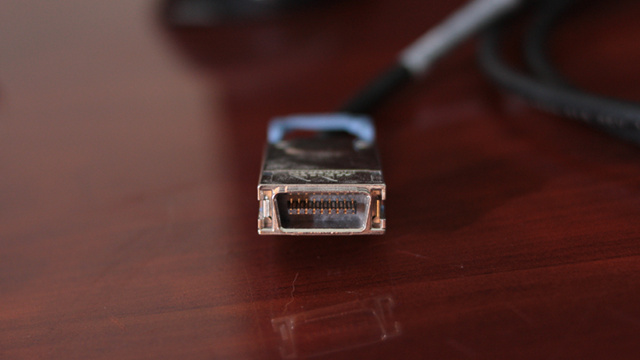

Two years ago, I added InfiniBand cards to two machines on my home network, and it was a very inexpensive upgrade. My raw network throughput between these two machines has increased from 1 gigabit per second to around 6.5 gigabits per second, and the two cards and one cable cost less than $60.

I’m not seeing the full 20-gigabit speeds that these cards should be capable of maintaining, because I only have 4x PCIe slots available in these two computers, and the older, more inexpensive cards operate at PCIe 1.0 speeds. If I had 8x PCIe slots, the speeds of these cards should double, and more expensive InfiniBand cards support PCIe 2.0.

I’m quite pleased with my results, though. My Samba sequential throughput tops out at around 320 megabytes per second. This is a limitation of my low-power server and Samba’s single-thread-per-client limitation—faster InfiniBand cards wouldn’t change that. I can usually double that throughput when running three or more file transfers at the same time.

- Low-Power Ryzen Virtual Machine Host at patshead.com

- Low-Power AMD 5350 Virtual Machine Host at patshead.com

- Infiniband: An Inexpensive Performance Boost For Your Home Network at patshead.com

- Building a Cost-Conscious, Faster-Than-Gigabit Network at Brian’s Blog

- Maturing my Inexpensive 10Gb network with the QNAP QSW-308S at Brian’s Blog

Why am I writing this two years later?

I’m not. Most of this blog post has been sitting in a draft for two years. I’m just fact-checking and adding a few closing paragraphs today. I’ve done a bad job here.

I don’t just write these blogs for you. They’re also documentation for my future self. If my KVM machine with InfiniBand burns down in a fire, I know I could get a new machine up and running. I ran into minor issues when I got this all set up the first time, and if I don’t document this, I’ll most likely run into the same problems again!

Limitations of IP over InfiniBand

You can run IP over InfiniBand, but that isn’t what it was designed for. It works great, but it operates at layer 2—that means you can’t bridge over the InfiniBand interfaces. This isn’t a huge problem, but it does require more effort than when you’re using something like 10 Gigabit Ethernet.

I ended up creating two new private subnets for my InfiniBand network—one subnet for the physical hosts to use, and another subnet on a virtual bridge for the KVM virtual machines. The routing between those subnets should have been easy, but I had trouble getting good speeds.

To get great speeds out of IP over InfiniBand, you need to set the MTU of each interface to 65520, and you can’t miss any interfaces. I had trouble setting the MTU of the bridge device. You can’t set the bridge’s MTU when there are no interfaces connected to it, and I couldn’t set the MTU of the bridge to 65520 until I connected a virtual machine and set the virtual machine’s MTU to 65520. This is fun, right?

What about Ethernet over InfiniBand? (EoIB)

Ethernet over InfiniBand would solve all my problems, but it would create new difficulties.

When I set this up two years ago, all of the EoIB implementation was maintained outside. This wouldn’t have been convenient for my setup. There are only two InfiniBand devices on my network, and one of those is my desktop computer. I often run bleeding edge kernels with scheduler patches on my desktop. Would they be compatible with the current versions of the EoIB modules? Probably not.

Some quick searches on Google lead me to believe that this may no longer be the case, at least if you’re running RHEL or Centos.

Why not skip this nonsense and use 10gbe?

I convinced my friend Brian to go the 10-Gigabit Ethernet route. His pricing says he can do two cards and a cable for less than my InfiniBand setup, and he’s getting a solid 9.8 gigabits per second and Samba speeds just like mine. So if you’re only connecting two machines, 10gbe is probably a wise decision.

InfiniBand starts to become a much better value as you connect more machines. There are 8-port InfiniBand switches on eBay as low as $50. The options for 10gbe switches start at more than ten times that price!

Brian is doing well with three machines, but adding a fourth 10gbe device will be problematic for him. I’m still in search of a good excuse to add a third InfiniBand device to my network!

IPoIB between physical hosts

IP over InfiniBand was extremely easy to set up. You just have to make sure your InfiniBand network adapters are in “connected” mode—they default to the much slower “datagram” mode.

1 2 3 4 5 6 7 8 | |

Once I got into connected mode, my iperf test numbers went up to over 6 gigabits per second. That’s well short of InfiniBand’s 16-gigabit-per-second limit—InfiniBand encodes data using 10 bits per byte. This is just about as fast as I can go with my combination of a PCIe 1.0 InfiniBand card in my available 4x PCIe slot.

I guess I’m going to have to route!

It should be easy to set up a handful of IP subnets and route from my desktop to the KVM server, then from the KVM server to a virtual machine, right? It turned out to be much more difficult than I had anticipated. It took me an embarrassingly long time to pinpoint my problem, too. You’ll get to benefit from all the time I spent investigating this conundrum.

My Ethernet subnet is 10.42.254.0/24. My two InfiniBand ports are on the 10.42.253.0/24 subnet—10.42.253.1 is the KVM server and 10.42.253.2 is my desktop computer. I created a lonely bridge device on the KVM server named ibbr0—InfiniBand Bridge Zero. This bridge isn’t connected to any physical Ethernet devices, and its subnet is 10.42.252.0/24.

1 2 3 4 5 | |

I added a second virtio network interface to my NAS virtual machine and connected it to ibbr0. At first, this seemed to work quite well, since I was able to ssh from my desktop to the NAS virtual machine via this route.

As soon as I ran iperf, though, I knew something was wrong. To say the speeds were slow would be an understatement. I was seeing speeds less than one megabit per second!

Don’t forget to enable packet forwarding!

I didn’t forget to enable packet forwarding on my KVM host, but I did forget to write about it here! If you don’t have ip_forward enabled, your host won’t bother to route packets for you.

To manually enable forwarding, you can run this command:

sysctl -w net.ipv4.ip_forward=1

To permanently enable IP packet forwarding, you can add this line to your /etc/sysctl.conf file:

net.ipv4.ip_forward=1

There are similar sysctl options to enable forwarding of IPv6 packets.

Don’t miss any MTU settings

I set the MTU of my new network device on the NAS virtual machine to 65520. This partially solved my problem. I was seeing 5 or 6 gigabits per second from the virtual machine to my desktop, but I still couldn’t even reach T1 speeds in the opposite direction.

To be honest, I was starting to pull my hair out at this point. Why was it slow in one direction? Every network interface I could think of had its MTU set to 65520. I even tried running socat on the KVM server to bypass the additional route, and that got me up to 1.5 gigabits per second. Why couldn’t I hit that when routing?

As it happens, I missed setting the MTU on an important interface. No matter how hard I tried, I just couldn’t set the MTU on ibbr0. You do need to set the MTU on the bridge device, but you can’t set the MTU on the empty bridge.

When my NAS virtual machine starts up, it creates a virtual Ethernet device named vnet0 and attaches it to ibbr0. Once I set the MTU on vnet0, it lets me set the MTU on ibbr0. From that point on, I can get InfiniBand speed traffic through to my NAS virtual machine!

I do lose some speed when talking to the virtual server—roughly one gigabit in each direction. This is over five times faster than my Gigabit Ethernet connection, so I’m still quite pleased with the results!

Configuring the network interfaces

Setting up the IP-Over-InfiniBand configuration is easy enough.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Setting the MTU on the bridge device took a little more effort. I only have one virtual machine that benefits from InfiniBand speeds—my Network Attached Storage server. I need to set the MTU after my NAS virtual machine is booted. Thank goodness QEMU offers hooks for this sort of thing!

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

The configuration inside the NAS virtual machine is much more straight-forward. Just bring up the interface, and add the route to the InfiniBand subnet.

1 2 3 4 5 6 | |

And finally, here’s the InfiniBand configuration on my desktop.

1 2 3 4 5 6 7 8 9 | |

Conclusion

Setting this up wasn’t difficult. The MTU issue wasn’t easy to spot. Now that I’m aware of it, though, it is simple enough to circumvent.

My InfiniBand setup has been running for more than two years now without any issues. At first, it was running on my power-sipping AMD 5350 KVM host. I’ve since transplanted the hardware to my new Ryzen 1600 KVM box. I didn’t have to reconfigure anything. I just moved the hard drives and InfiniBand card to the new machine, booted it up, and everything just worked.

I store the raw files from my Canon 6D and the quadcopter videos from my GoPro Session on my NAS virtual machine, and I interact with all those files over InfiniBand. For the most part, it is comparable to working with the files on the local machine. If I had to start from scratch, I would still choose InfiniBand.

Are you using InfiniBand or 10-Gigabit Ethernet at home? I’d enjoy hearing about your experiences! Feel free to leave a comment or join us on our Discord server!

- Low-Power Ryzen Virtual Machine Host at patshead.com

- Low-Power AMD 5350 Virtual Machine Host at patshead.com

- Infiniband: An Inexpensive Performance Boost For Your Home Network at patshead.com

- Building a Cost-Conscious, Faster-Than-Gigabit Network at Brian’s Blog

- Maturing my Inexpensive 10Gb network with the QNAP QSW-308S at Brian’s Blog