So, you’ve decided that you want to build your own NAS. I bet you’re investigating hard drive prices and your options for RAID levels. At least, I hope you are!

Should you buy the biggest disks on the market? Do you have to buy all your disks up front? Should you use ZFS or a more traditional RAID setup? How much redundancy do you need?

There are so many questions, and there is no single answer that fits everyone situation. I’m going to attempt to answer some these questions from my current point of view. My hope is that you and I are in similar situations!

My home NAS stores my important, personal data. I created all of this important data myself, and I’m spending money out of my own pocket on this hardware. I paid for the cameras that take the photos and record the video. I paid for the drones that capture the video that accounts for the bulk of my storage these days. I paid for the server and all the hard drives.

I prefer to keep as much money in my wallet as possible. In the past, it has been my job to spend other people’s money on things like this. They paid me to choose the hardware. They paid me to configure the hardware. They paid me to maintain and support the hardware. Well, not just me. In most cases, I was part of a team of people.

When spending other people’s money, I’m happy to pay for extra stuff. If there’s a 0.1% chance that using something like ECC RAM could keep me from getting out of bed at 3:00 AM, that would have been worth quite a few hundreds or thousands of extra dollars; after all, it isn’t my money!

If my home NAS fails at 3:00 AM, I won’t notice until the afternoon of the next day. I have backups. I know my data will be easily available on another machine in the house. If the house burns down, all my data is sitting on a different continent. I’ll be able to get it back.

- What Should I Do If I Can’t Fill My New NAS Server Full Of Disks?

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: 2019 Edition at Brian’s Blog

- Adding Another Disk to the RAID 10 on My KVM Server

- Brian’s Top Three DIY NAS Cases as of 2019 at Butter, What?!

Do I need to buy all my drives up front?

It depends.

When my friend Brian built his first DIY NAS back in 2012, I told him that he needed to give ZFS a try. ZFS has been around a long time, but it was only a part of FreeNAS or FreeBSD for a couple years at that point. ZFS has a lot of useful, advanced features: block-level checksums, extremely flexible snapshot and volume management, and RAID rebuilds don’t touch unused parts of the file system.

Even today, though, ZFS has one major disadvantage over something like Linux’s software RAID system. ZFS doesn’t allow you to add additional drives to an existing zpool. If you want to be efficient with ZFS, you need to build your array with as many disks as you can right away.

Let’s say your little home server has room for eight drives. For the sake of simple math, let’s say you decided to use 1 TB drives that cost $100 each, and you want to have one disk worth of redundancy in your array.

If you buy eight disks and configure set them up as a ZFS RAID-Z array or a traditional RAID 6 array, it will cost $800 for 7 TB of usable space.

You don’t need 7 TB today. You only need 3 TB, and you know these 1 TB drives will be half the price in a couple years. So you buy four disks. Now your smaller RAID-Z has 3 TB of usable space, but it only cost you $400.

What do you do in two or three years when you run out of space? If you’re running ZFS, you can’t just plunk in another drive.

You can replace your 1 TB drives one at a time with 2 TB drives. When you replace the final drive, you will have doubled your space. This can be an expensive operation, and you’ll may be stuck with four old, unused drives.

You can add four more drives. You’ll have to create another zpool, which means you will lose one more disk’s worth of storage to your RAID-Z redundancy. Maybe you’ve lucked out, though, and 2 TB drives now cost $100. You’ll have two redundant disks and a total of 9 TB of usable space. That’s nearly 30% more storage for the same price, and you’ve managed to gain a little more redundancy.

Will disks be twice the size for the same cost when you decide to upgrade? I can’t tell you that. So far, we’re just making up prices to help form a picture in your mind.

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: 2019 Edition at Brian’s Blog

- Adding Another Disk to the RAID 10 on My KVM Server

- Brian’s Top Three DIY NAS Cases as of 2019 at Butter, What?!

You can add disks to Linux’s software RAID arrays after they’re built

Let’s go back to the point where you decided to start with four drives, but instead of running ZFS, let’s use Linux’s MD layer to built a RAID 5 array.

What happens in two years when you run out of space? You don’t have to spend $400 on another set of disks. You can buy just one more 1 TB disk, or you can future-proof yourself a bit and add a 2 TB disk instead. Either way, you can pop that disk in, run a few simple commands, and in a few hours you’ll have an extra 1 TB of space available on your RAID 5 array.

Each time you begin to run low on space, you can simply buy another disk—at least until you fill up your case!

Don’t use RAID 5 or RAID-Z

RAID levels that use parity for redundancy, like RAID 5 or RAID-Z, are great when you’re on a budget and write speeds aren’t important for your workload. Any reasonable RAID 5 array will have no trouble saturating that Gigabit Ethernet network you have at home in either direction, and let’s face it, if write performance were truly important, you’d be using solid-state disks!

At some point, though, the single disk’s worth of redundancy started to get worrisome. You have an increased likelihood of a disk failure during a RAID rebuild, because you’re probably going to be touching every sector on every disk. On top of that, as drives have gotten bigger the odds of hitting a spontaneous read error during the rebuild started approaching 100% when disks were just 300 or 400 GB.

Disks are ten times larger now, so you really want to have two disks worth of redundancy in each array—this really goofs up the math on my RAID 5 examples above, doesn’t it? This means you should be using at least RAID 6, RAID-Z2, or RAID 10.

My previous example isn’t so bad with Linux’s software RAID 6!

Let’s go back. You bought four hard drives, but you’re going to use RAID 6 or RAID-Z2. This means you’re giving up two drives’ worth of storage to redundancy in each array.

In the case of both RAID 6 and RAID-Z2, you’ll spend $400 on hard drives, and you’ll have 2 TB of usable storage capacity. In both cases, half of your storage is allocated as redundancy.

In two years, you decide to add another four drives, but this time they’re 2 TB at $100 each. If you’re running RAID-Z2, you can create a second RAID-Z2 pool. You will have 6 TB of usable storage, and half of your storage space will be allocated as redundancy.

If you’re running a Linux MD RAID 6 array, you can restripe across these additional disks, and you don’t have to buy them all at once. Just to keep it fair, though, lets say you did buy those same four additional 2 TB drives at $100 each.

Unless you rely on some fancy partitioning footwork, you’ll end up in roughly the same position. Linux’s RAID 6 implementation will ignore the second half of those 2 TB disks. You’ll have 6 TB of usable storage on your RAID 6 array, but you’ll have 4 TB sitting around. When you eventually replace the original four drives with larger ones, that space will become available.

What do you think? Should I write a blog post about that supposedly fancy partitioning footwork?

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: 2019 Edition at Brian’s Blog

- Adding Another Disk to the RAID 10 on My KVM Server

There’s too many made up prices

I’ve been adding drives to my arrays at home for decades, but before I had a blog, I had absolutely no record keeping. In the real world, things tend to be messier than this. When you start with four drives, at least one of them will probably fail before you need to upgrade to expand your array.

What do you do when a drive fails? I usually order a fresh drive from Amazon, then I wait for the manufacturer to process my RMA. That way, I don’t have to worry for too long about a degraded array. That also means I’ll have a spare disk in a few weeks, so I get to expand my array early!

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: 2019 Edition at Brian’s Blog

- Adding Another Disk to the RAID 10 on My KVM Server

That’s great, Pat, but what do these dollar figures look like in real life?

I am no longer the kind of guy that builds great, big NAS machines. There was a time when I stored a lot of data at home. My old desktop at home in 2001 had an 8-port 3Ware IDE RAID card and a stack of 30 GB IDE drives! Even after that, it was common for me to have a machine with at least six large SATA disks at home—my arcade cabinet has room for nine hard drives!

If you’re looking for a larger build, you need to talk to my friend Brian Moses. These days, my storage needs are rather small. When I built my Linux KVM virtual machine server for my office, I used a pair of 4 TB hard drives in a RAID 10 array for the bulk of the storage. You did read that correctly. It is a RAID 10 array with only two disks.

Flying FPV freestyle quads has resulted in a sharp increase in my data hoarding rate. If I had just left well enough alone, it would have taken at least six years for my DSLR photos to fill the storage on my NAS VM. I’m now accruing more FPV video every single month than I accrued in six years of shooting RAW photos with my DSLR!

This forced me into an early upgrade last year. This means I do have some real-world data!

In 2015, I bought a pair of 4 TB 7200 RPM drives for $140 each. That gave me 4 TB of usable storage for $280.

In 2018, I bought a third 4 TB 7200 RPM disk for $110. That brought my usable storage up to 6 TB for a total cost of $390. I know. That’s only a $30 savings! I wasn’t expecting to expand so soon, though.

Today, almost exactly one year later, I can buy an HGST 4 TB 7200 RPM disk for $72 with free one-day shipping. If I could have held out a little longer, I would have saved $70!

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: 2019 Edition at Brian’s Blog

- Adding Another Disk to the RAID 10 on My KVM Server

- Brian’s Top Three DIY NAS Cases as of 2019 at Butter, What?!

Who cares about $70? This is too much work for such a small savings!

I haven’t just saved money. If I had bought three disks in 2015, all my drives would be five years old today. That’s not the case, though. Two of my drives are five years old, and one is only two years old.

I’m on track to add a fourth disk in about 12 months. Assuming I don’t have a drive failure before then, I’ll have two six year old drive, one three year old drive, and a brand new disk. I start to get nervous when all the drives in my array are four or five years old!

Next year, the average age of the disks in my array with me 3.75 years, and by that time I will have saved at least $140.

What made you decide to use RAID 10? How can you have three disks in a RAID 10?

I didn’t need much storage, and I prefer to have two disks’ worth of redundancy. You can’t have fewer than four drives in a RAID 6 array. You can build a RAID 6 with a prefailed drive, and it will act like a RAID 5, but you still need three drives. At the time, I only needed about 1 TB of storage for my NAS and some extra scratch space for my other virtual machines.

I only needed two drives to build a RAID 10, and my virtual machines could benefit from the faster write speeds compared to RAID 5 or RAID 6.

I like to say that Linux’s RAID 10 implementation follows the spirit of the law rather than the letter. Linux’s RAID 10 implementation makes sure that each block exists on two disks—more if you want to be paranoid.

If you have two disks, Linux’s RAID 10 operates just like a RAID 1 mirror. You can lose either disk, and you won’t lose any data.

If you have an odd number of disks, like the three disks in my current array, Linux’s RAID 10 implementation will stagger your data. The first block will be on disks one and two, the second block will be stored on disks three and one, and the third block will be stored on disks two and three. With three disks, I can lose any single disk without losing my array.

In all cases, there are two copies of my data spread across array.

Things will be safer once I reach four disks. At that point, I will be able to lose up to two disks without data loss. Unlike RAID 6, which two disks I lose is extremely important! Your odds of surviving the loss of two disks in a RAID 10 increase with drive count. With RAID 6, you can lose any two disks without losing data.

- What Should I Do If I Can’t Fill My New NAS Server Full Of Disks?

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: 2019 Edition at Brian’s Blog

- Adding Another Disk to the RAID 10 on My KVM Server

Isn’t your cost per terabyte higher with RAID 10?

Yes, and the comparison will continue to get worse as my array grows. I appreciate the extra performance of my RAID 10 array, but I could definitely live without it. The biggest advantage for me was the up-front cost.

On day one, it cost me $280 to have 4 TB of usable space. Assuming I used 4 TB drives, and I would have, it would have cost $540 to have at least that much space available with RAID 6—only $420 if I cheated. I would have had double the usable space, but I didn’t feel this was necessary at the time.

Next year, I expect to have 8 TB of usable RAID 10 storage, and it will have cost me $460 or less.

As far as storage is concerned, at four drives, RAID 10 and RAID 6 would a tie. Starting with the fifth drive, RAID 6 would have netted me 50% more storage per additional drive. Because of the pair of SSDs in my home server, I can’t use more than four hard drives without adding a PCIe SATA controller. I’m going to do my best to avoid that anyway!

Calculating data growth, age of disks, and whatnot

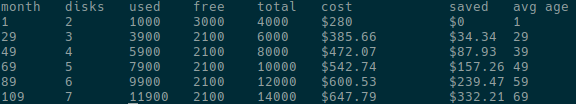

When I was growing my RAID 10 last year, I was already thinking about writing this blog post. I even wrote up a quick and dirty script to help predict some useful information: how often you’ll need to add a new drive, how much each new drive may cost, how much money you’ve saved so far, and the average age of the disks in your array.

My little Perl script is extremely dirty, and it isn’t nearly intelligent enough—that’s what happens when you spend less than 10 minutes on something like this!

I built in a concept something like depreciation. Each month, it assumes that a new drive will be slightly cheaper than the month before. I massaged that number to roughly align with my real-world experience, but that prediction will eventually fail.

4 TB drives will continue to drop in price right up until they stop manufacturing drives that small. Then the prices will start to slowly rise for a while. How do you build brains into the script to estimate when you should buy larger drives?!

I’m thinking about making the script a little smarter and converting it to Javascript. We’ll see if I ever get around to it!

Conclusion

I feel like this post rambled on longer than it should have, so I guess it is time for my tl;dr!

I prefer being able to purchase my storage as needed, at least here at home. It saves me some money. More importantly, it keeps my hard drives fresher. The drives in your NAS are spinning around 24 hours a day. They’re going to wear out. The odds of a drive failure increase dramatically in the fourth and fifth years of service. It is best to keep your hard drives as young as possible!

Most importantly, please remember that RAID is not a replacement for backups! RAID helps prevent downtime. RAID does not keep your data safe.

Do you run ZFS? Do you fill your NAS with disks when you build it, or do you add storage as needed? Do you just hope to win one of Brian Moses’s NAS builds that is already stuffed full of hard drives? Tell me about it in the comments, or stop by [our Discord server][bw] to chat!

- What Should I Do If I Can’t Fill My New NAS Server Full Of Disks?

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines at patshead.com

- DIY NAS: 2019 Edition at Brian’s Blog

- Adding Another Disk to the RAID 10 on My KVM Server

- Brian’s Top Three DIY NAS Cases as of 2019 at Butter, What?!