A few weeks ago I decided on precisely how I was going to implement my own self-hosted cloud storage, and I even wrote up a blog entry describing what I was planning to do. I discovered Seafile the very next day, and I knew that I was going to have to throw my entire plan out the window.

Out of all the options I’ve investigated so far, Seafile is the most Dropbox can also be configured to keep a history of changes to your files. That means it can be used as a replacement for a significant portion of my backup plan.

Scaling back

I did make an attempt at syncing my entire home directory to Seafile. It was an epic failure. Seafile seemed to grow exponentially slower as it indexed more and more of my data. It took about six hours to index the first 19 GB. Eight hours later, and it was barely past the 20 GB mark.

Seafile slows down as the number of files in the library grows. My home directory has over 275,000 files, which seems to be about 200,000 files too many. Most of the solutions I investigated seem to have similar problems, so syncing my entire home directory is probably not going to be a viable option.

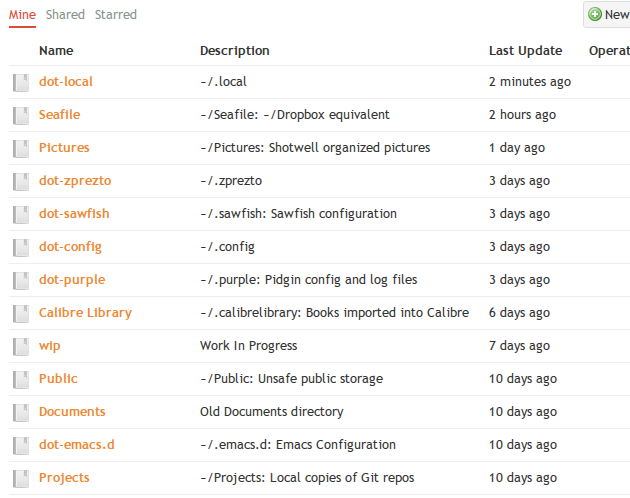

Seafile stores your data in “libraries”, and it lets you sync existing directories up to these libraries. I ended up creating a library for each of the directories that I currently back up on a daily basis, and I also created a more generic library that I called “Seafile”. I’m using the “Seafile” library much like I’ve been using my “Dropbox” directory.

These have been syncing for over a week now, and except for a few problems, I am very happy with the results. None of these libraries have more than about 25,000 files.

Some things to watch out for with Seafile

I ran into two problems, but they are both easy to rectify.

Don’t auto-sync directories that change constantly

I am currently syncing approximately 6.5 GB of data. Four or five days after moving this data into my Seafile libraries, I nearly ran out of space on my Seafile virtual server. Seafile was eating up over 60 GB of space, and it had used a comparable amount of bandwidth.

I was syncing my Pidgin log files and configuration, and also my ~/.config directory. Most of the bandwidth and history space was being used by my constantly updating Pidgin logs and Google Chrome’s cookie database. When I disabled auto-sync on these libraries, the Seafile server dropped from a constant 200 to 300 KB per second of network usage to zero.

This was not a good permanent option, though. I definitely wanted to back up these two directories. Seafile’s command line tools don’t seem to have a way to force a sync on these libraries. I was able to cheat my way around that. I stole the URL from the “sync now” link in the local web interface, and I am querying it every 6 hours from a cron job.

1 2 3 4 5 6 7 | |

Server side garbage collection

You have to periodically stop the Seafile server and manually run the garbage collector. That was the only way I could get rid of the 60 GB of cruft my server had collected. If I were still accumulating 10 GB of useless data every day, this might be problematic.

It now seems to be growing at a much more acceptable limit, though, so I’m not too worried about it. I’ll keep an eye on things for a month or so; then I’ll have a much better idea of how much bandwidth and disk space Seafile is going to eat up on me.

Performance

I didn’t think to run a stopwatch until I was syncing my final directory. It was 5 GB of photos. I kept an eye on the throughput numbers in Seafile’s local web interface. It would sit up at 4.5 MB per second for a while, and then it would idle for a while. Sometimes it would sit in between somewhere for a while.

I do know that the entire process from indexing to uploading all my photos came out to an average speed of around 2.5 MB second. I’ve watched Dropbox.

My Seafile control script

I put together a little script to make starting, stopping, and garbage collecting a little easier and safer. I was worried that I would have to run the garbage collector repeatedly, and I wanted to make sure I wouldn’t accidentally do that with the service running.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

My installation is running under nginx for SSL support. If you’re not running under a standalone web server you will need to change ./seahub.sh start-fastcgi to ./seahub.sh start.