That title should be longer. I don’t want to exclude 4-bay and 8-bay USB SATA enclosures, but I didn’t want to waste so many extra words in the front of the title!

If you had asked me this question ten or twenty years ago, I would have laughed at you.

When I consolidated down from a desktop and a small laptop to a single beefy laptop sometime around 2008, I stuffed all my old 40-gigabyte IDE hard drives into individual USB 2.0 enclosures so I could continue to back up my important data to my old RAID 5 array. It did the job, but even with light use I would get timeouts and weird disk restarts fairly often. I wish I had a photo of this setup.

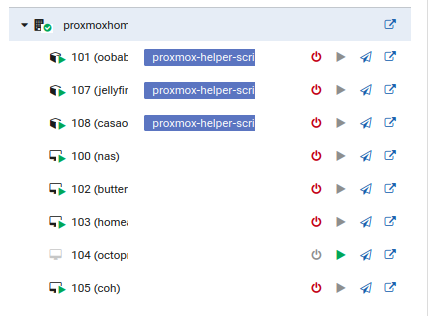

The Cenmate 6-bay enclosure is about half as wide as the Makerunit 6-bay 3D printed NAS case. My router-style N100 mini PC Proxmox server is my only mini PC that is WIDER than the Cenmate enclosure!

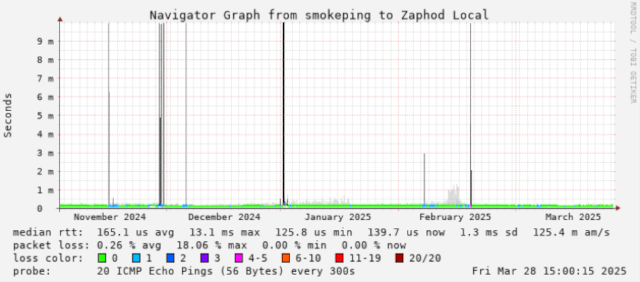

A lot of time and many USB upgrades, updates, and improvements have happened since those days. I have had a Raspberry Pi with a 14-terabyte USB drive at Brian Moses’s house since 2021, and I have also had a similar 14-terabyte USB hard drive set up as the storage on my NAS virtual machine since January of 2023. Both have been running without a single hiccup for years.

External USB hard drive enclosure are inexpensive, reasonably dense, and don’t look half bad. They also allow for a lot of flexibility, especially if you want to mix and match your homelab’s collection of mini PCs.

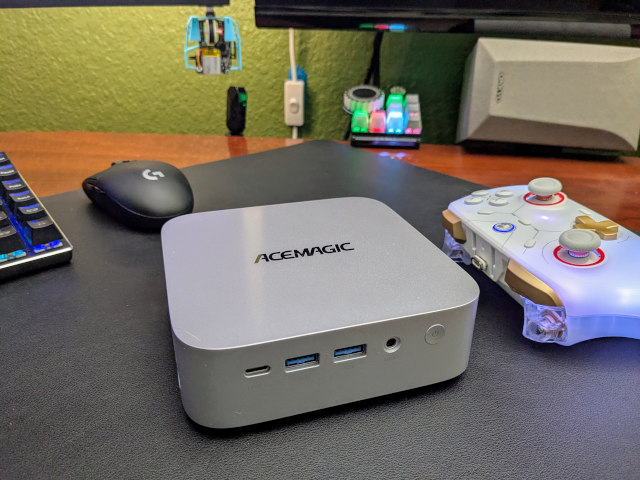

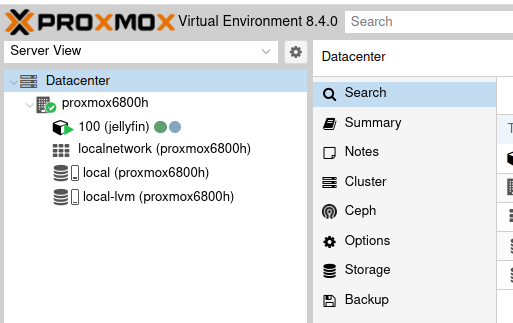

- Proxmox On My New Acemagician Ryzen 6800H Mini PC And Jellyfin Transcode Performance

- My First Week With Proxmox on My Celeron N100 Homelab Server

- Cenmate 6-bay USB SATA Hard Disk Enclosure at Amazon

Let’s talk about the pricing!

A good way to compare storage servers is using price per 3.5” drive bay. I’ve usually said that anything under $200 per bay isn’t bad, and anything down at $100 per bay is quite frugal.

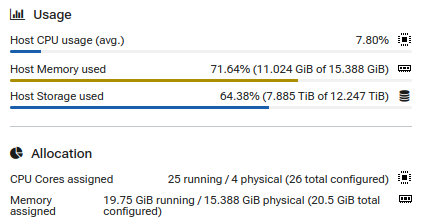

You can buy a two-bay toaster NAS with an Intel N100 for $230, which works out to $115 per bay. There is also the AOOSTAR 4-bay WTR PRO NAS with quite a bit of CPU horsepower for $539, and that’s $134 per bay. UGREEN has been aggressively pricing their small NAS boxes pretty aggressively. The UGREEN 4-bay regularly goes on sale for $500, and the 8-bay sells for $1350. That is $125 or $168 per bay, but you do get a CPU and network adapter upgrade out of the latter.

USB SATA enclosures seem to range from $25 to $40 per 3.5” drive bay. The 6-bay model that I ordered cost $182, which works out to $30 per bay. Of course, that isn’t directly comparable to a full NAS box from UGREEN or AOOSTAR on its own.

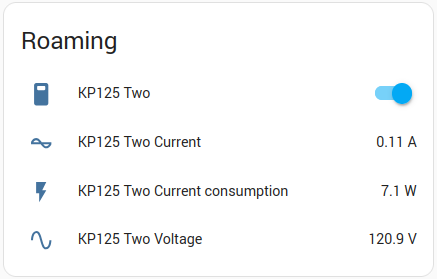

The Intel N100 mini PC that I plugged my enclosure into cost me $140. Adding that up works out to a delightfully frugal $53 per drive bay!

My most expensive mini PC is the Ryzen 6800H that I currently use for living-room gaming. Let’s assume we are buying a RAM upgrade to push that Acemagician M1 as far as it will go. We’d be up at about $450 with 64 gigabytes of RAM. That would put our 6-bay Ryzen 6800H NAS at $105 per bay. That is still a really good value.

- Using A Ryzen 6800H Mini PC As A Game Console With Bazzite

- AOOSTAR WTR PRO 4-bay NAS at Amazon

- UGREEN NASync storage servers at Amazon

- Trigkey N100 Mini PC at Amazon

USB drive enclosures keep you flexible

When you buy or build a purpose-built NAS server, you wind up locking yourself in. If you choose a case with 5 drive bays, then you’re probably going to have to swap all your gear into a new case if you decide you need 8 bays.

As long as I have a free USB port, I can plug in another 6-bay or 8-bay enclosure when I run out of storage next year. Many of the options on the market can be daisy chained, so you can plug one enclosure’s USB cable into the previous enclosure. Even if they didn’t, you could always buy a quality USB hub and have the same flexibility.

Daisy chaining or using a hub will limit your available bandwidth.

The Cenmate enclosure is here hanging out with a Ryzen 6800H mini PC, an Intel N100 mini PC, and a Seagate 14-terabyte USB hard disk while I was loading it up with disks. It is roughly the same width as the Acemagician M1.

Outgrow your Intel N100 mini PC? Swap in a new mini PC with a Ryzen 5700U or Ryzen 6800H. The mini PC running your NAS virtual machine is acting up? Migrate the VM’s boot disk to another mini PC and move the USB enclosure. You aren’t locked in to any single configuration.

Not only do I have those options, but all my computers run Linux. If something goes completely wrong, I could carry the USB enclosure from my network cupboard to my desk, plug it into my desktop PC, and have immediate, fast, direct access to all my data. If there’s a fire, I can wander out of the house with my laptop and the drive enclosure, and I will have all my extra data with me in a hotel ready to be worked with.

Piling things on top of a drive enclosure in the homelab is pretty reasonable. The enclosure is roughly as wide as all but my largest mini PC, and that mini PC is 80% heat sink. If you go with an 8-bay enclosure, you should be able to fit two stacks of mini PCs on top if you tip the enclosure on its side.

There is a limit to how many mini PCs, 6-bay hard drive enclosures, and small network switches you can stack in your homelab before it gets unwieldy, but unlike a full 19” rack, you can almost always balance just one more mini PC on top if you have to!

Which enclosures did I look at?!

The first one I liked was an older Syba model that is available in 4, 6, or 8 bays. It looks older. It doesn’t support daisy chaining, and it uses the older 5-gigabit USB 3 standard. It has enough unused space on bottom that it should be able to fit another drive in the same space, and that feels like a bummer. Syba has some of the lowest pricing per bay, and they also have an eSATA port on some of their enclosures. I used to use eSATA pretty regularly, but USB 3.0 is faster, and I don’t have any eSATA ports to available plug it into.

Then I was looking at various enclosures from Yottamaster. Their enclosures carried the highest price tags. They look attractive, but Yottamaster doesn’t seem to have one model of enclosure available in different sizes. They all look completely different. Some models have daisy chaining, some have 5-gigabit USB ports, some have 10-gigabit USB ports. My favorite thing about these was that they were easy to find on Aliexpress.

I decided to purchase an enclosure from Cenmate. Their lineup of enclosures with 2, 3, 4, 6, and 8 bays all look identical aside from height. They support daisy chaining, and their newest models have 10-gigabit USB 3. You could save a few bucks and go with their older 5-gigabit enclosures, but I figured it would be better to start with a newer model.

- Cenmate 6-bay USB SATA Hard Disk Enclosure at Amazon

- Syba 8-bay USB SATA Enclosure at Amazon

- Yottamaster 5-bay USB SATA Enclosure at Amazon

Why did I choose a 6-bay enclosure?

I was trying to balance the amount of storage I would waste on parity against wasting SATA bandwidth.

Running a RAID 5 with a 4-disk enclosure would dedicated 25% of my storage to parity, while running a RAID 5 with a 6-disk enclosure would only eat up 16%. It also helps that smaller disks tend to cost less per terabyte than larger disks, though I will be spending a bit more on electricity.

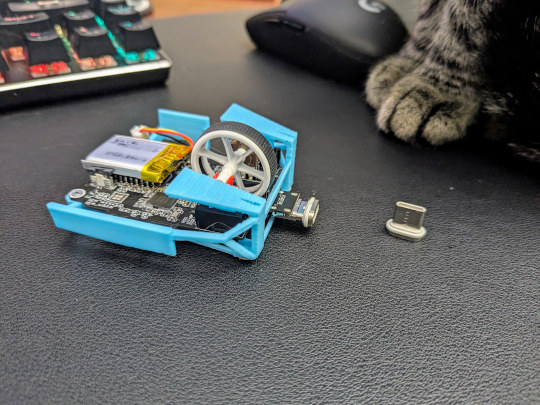

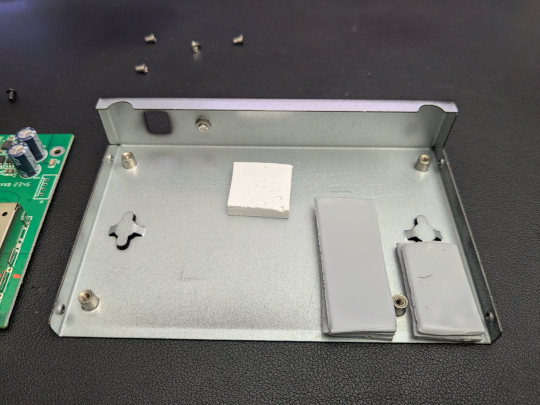

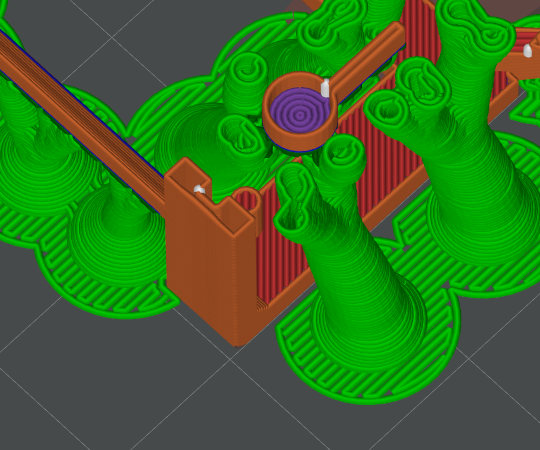

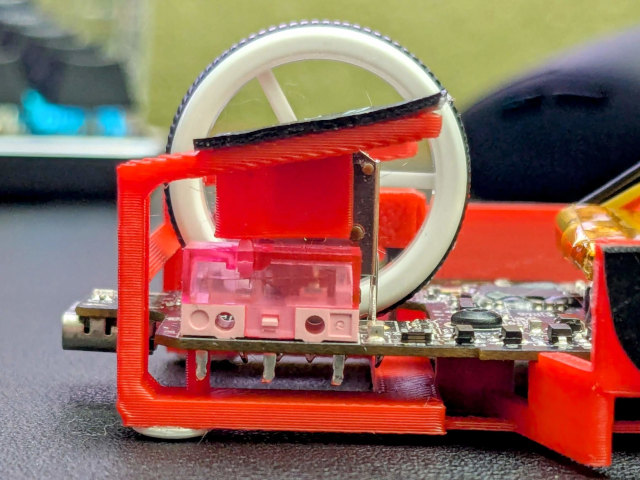

The Cenmate enclosure’s tool-free 3.5” trays are easy to use and the latch mechanism is quite satisfying to operate!

A 7200-RPM hard disk might top out at 250 megabytes per second on the fast end of the platter and something as low as 120 megabytes per second on the inside tracks. A 10-gigabit USB 3 port can theoretically move nearly 1,000 megabytes per second.

That leaves us at an average speed of around 160 megabytes per second when all six drives have to be choohin’.

What do you think? Is that a reasonable compromise between maximum speed and wasted parity space? I think it is fine. My NAS virtual machine will be bottlenecked by my mini PC’s 2.5-gigabit Ethernet ports anyway.

Nothing even manages to be apples and oranges

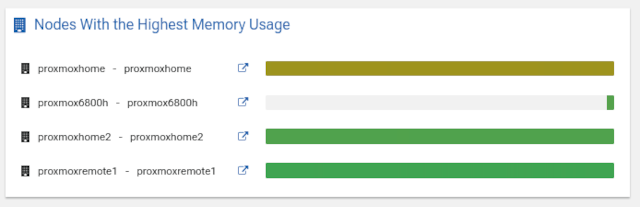

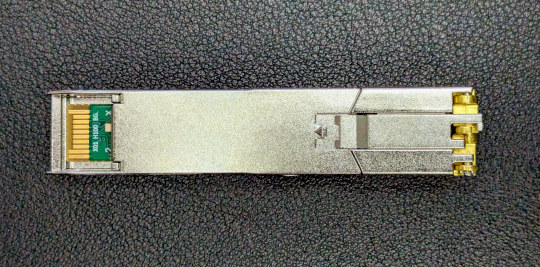

There’s a gap here. An N100 or N150 mini PC from Trigkey or Beelink can be found with a pair of 2.5-gigabit Ethernet ports just like a $500 4-bay UGREEN DXP4800, but the most costly 8-bay UGREEN NAS gets you an upgrade to a pair of 10-gigabit ports. Mini PCs with 10-gigabit ports are the worst combination of rare, large, or expensive.

If you have a need for 10-gigabit Ethernet ports, then a 6-bay or 8-bay UGREEN NAS might work out to a better value. My suspicion is that the Venn diagram of people who need 10-gigabit Ethernet and the people who can get by using slow mechanical hard disks would be two circles that are barely even touching.

What are you planning on doing with your big hunk of bulk storage? I watch the occasional movie, but I can easily stream video to every screen in the house with 1-gigabit Ethernet. Sometimes I dump 30 gigabytes of video off my Sony ZV-1, but the microSD card is also way slower than 1-gigabit Ethernet. I run daily backups both locally and remotely, and my remote backups finish in a reasonable amount of time over my 1-gigabit Internet connection, so I won’t notice the difference between that or a 10-gigabit local backup.

How do we know the USB enclosure won’t be junk?!

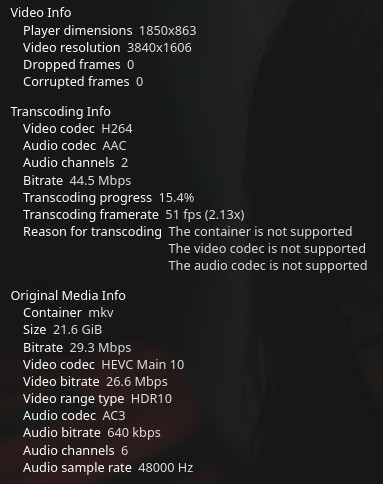

At the moment we have no real idea! My Cenmate enclosure only just arrived, but I am working on being as mean to it is I can.

I stuffed it full of spare 4-terabyte SATA disks that I had lying around my office. I plugged the Cenmate enclosure into one of my mini PCs, I set up a RAID 5, and I attached that RAID 5 to a virtual machine. I made sure the virtual machine is light on RAM so not much will be cached.

I fired up tmux, and I have one window continuously looping over a dd job writing sequentially to a big, honkin’ file. I have another window running dd that will be forever reading the RAID 5 block device sequentially. I have a third window running and old school bonnie++ disk benchmark.

I don’t care how fast any of this goes. The two separate sequential tasks will be fighting the benchmark task for IOPS, so it is all going to run very poorly. What I do care about is whether I can make any disks or the USB SATA chip reset or error out.

I will feel pretty good about it when it survives for a couple of days. I will feel great about it after it has been running for more than a week.

How are things going so far?

The follow up to this blog post will be a more direct review of the Cenmate unit, but it seems appropriate to include what I learned on the first day with the enclosure!

I have a box with five 4-terabyte SATA drives. These used to live in the homelab server I built for myself in 2015. My plan was to stick those in the enclosure along with an underutilized 12-terabyte to build a 6-drive RAID 5.

One of those 4-terabyte disks is completely dead, and I haven’t extracted the 12-terabyte drive yet. I was impatient, so for today I set up a quick RAID 5 across the first terabyte of the four good drives.

1 2 3 4 5 6 7 8 9 10 | |

1 2 3 4 5 | |

The enclosure is plugged into a 10-gigabit USB port on my Trigkey N100 mini PC, and mdadm during the RAID rebuild said I am hitting 480 megabyte per second reads and 160 megabyte per second writes. That is as fast as these old hard drives can go to build a fresh RAID 5 array. I also verified that smartctl is able to report on every drive bay.

I pulled my spare 12-terabyte drive from my desktop PC, stuck it into a free bay in the Cenmate enclosure, and running dd to read data sequentially from five drives got me up to 950 megabytes per second. I am just going to call that 10 gigabits per second.

The Cenmate enclosure is louder than I hoped yet quieter than I expected. I usually measure the sound of my office with the meter sitting on the desk in front of me, because I care about what I can here while working. Usually my idle PC’s quiet fans put me at around 36 dB.

The Cenmate is off to my side just barely in arm’s reach, and its fans push that up to 45 dB. I get a reading of 55 dB when I hold the meter up next to the unit. It isn’t ridiculously loud, but I will be happy to move it to my network cupboard at the end of the day!

The conclusion so far

So far so good! I paid around $30 per bay for a USB SATA enclosure with six 3.5” drive bays, and it is for sure able to move data four times as fast as a 2.5-gigabit Ethernet port. It is cheap, fast, dense, and it even looks nice and clean. We’ll see if it winds up being reliable.

I have already moved the Cenmate enclosure to my network cupboard. Long-term testing is progressing, but it is progressing slowly. I keep finding out that my old hard disks are starting to have bad sectors or other weird problems, so I won’t be able to start properly beating on a full enclosure for a couple of weeks.

I believe that USB hard drive enclosures are a great way to add additional storage to your homelab, especially if you need space for big video files or more room for backups. The enclosures are inexpensive, extremely dense, and it sure looks like they’re going to wind up being reliable as well.

Have you been using a USB enclosure for your homelab’s NAS storage? Or are you a diehard SATA or SAS user? Join our Discord community! We’d love to hear about your successes or failures with USB storage!

- Proxmox On My New Acemagician Ryzen 6800H Mini PC And Jellyfin Transcode Performance

- My First Week With Proxmox on My Celeron N100 Homelab Server

- Upgrading My Home Network With MokerLink 2.5-Gigabit Switches

- Mighty Mini PCs Are Awesome For Your Homelab And Around The House

- Cenmate 6-bay USB SATA Hard Disk Enclosure at Amazon