The simple answer is no. The Intel N100 is slow and only has single-channel RAM. You’re not going to get any work done chatting at a reasonably sized and capable LLM on your Celeron N100 mini PC.

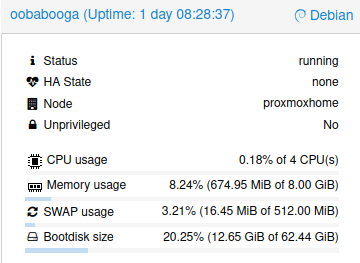

A better answer is more complicated, because you can run a large language model on anythin with enough memory. I set up a Debian LXC on one of my N100 mini PCs, installed Oobabooga’s text-generation webui, and downloaded Qwen 2.5 in both 1.5B and 0.5B sizes in 5-bit quants to see how things might run.

What’s the tl;dr? They ran, and don’t use much RAM, but they are both quite slow. When I just go back and forth asking small questions, it starts responding in just a couple of seconds. It took nearly four minutes to write me a conclusion section when I paste it an entire blog post. Short questions average 5 tokens per second with the 1.5B model, while the task with 2,500 tokens of context averaged around 1 token per second.

I did not attempt to finagle Oobabooga into using the iGPU. I suspect that might slightly improve time to first token, but the bottleneck here is the slow RAM.

It is probably also important to say right up front that you don’t have to use a Celeron N100. You can get a Ryzen 5600U or 5800U mini PC for around double the price, and those have dual-channel RAM, so they should run language models almost twice as fast. You should also keep in mind that twice as fast as slow is probably still slow!

- Contemplating Local LLMs vs. OpenRouter and Trying Out Z.ai With GLM-4.6 and OpenCode

- Harnessing the Potential of Machine Learning for Writing Words for My Blog

- Is Machine Learning Finally Practical With An AMD Radeon GPU In 2024?

- GPT-4o Mini Vs. My Local LLM

What do I usually do with my local LLM?

I sometimes use Gemma 2 9B 6-bit to help me write blog posts. It is the first local model that does a nice job generating paragraphs for me, and it runs somewhere between 12 and 15 tokens per second on my 12 GB Radeon 6700 XT. Time to first token is usually just a second or two even when there is a good bit of context, though it does take a little longer when I paste in an entire 2,500-word blog post.

I find myself using OpenAI’s API more often these days. The API is cheap, more capable than my local LLM, and so much faster.

OpenAI’s extra speed isn’t all that important, but it does help. My local LLM will output a conclusion section for my blog a little faster than I can read it, but being able to see the entire output from OpenAI in three seconds is definitely an improvement.

My eyes will skim into the second or third paragraph, and they might see something incorrect, too corporate sounding, or just plain goofy. I don’t have to read every word to know that I need to ask OpenAI to try again. This doesn’t save a ton of time, but it does save time.

Why do I let the robots write the conclusion section for my blog posts?

The truth is that I rarely use more than a paragraph or two of the conclusion that the LLM gives me, and what I do use winds up being moderately edited.

The conclusion section of a blog post tends to be a little repetitive, since it will at least be a partial summary of everything that I already wrote. Letting the artificial mind take a first pass at that saves me some trouble.

Not only that, but sometimes the LLM will write things in the call to action that I would never say on my own. I have been embracing that, and I have been letting some of those sentences through.

Gemma 2 9B is the smallest model I have tried that does a good job at this. In fact, I often feel better about the words coming out of Gemma’s mouth than I do about the paragraphs that ChatGPT writes!

How awful is Qwen 2.5 1.5B compared to Gemma 2 9B?

I don’t want you to think I did some sort of exhaustive test here, and I certainly didn’t run any benchmarks. I just asked Qwen to do the same things I normally ask Gemma.

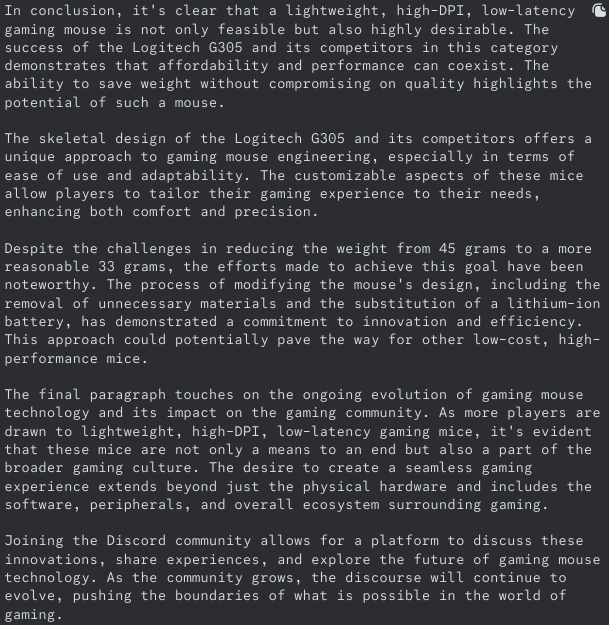

I will say that I was extremely impressed with how coherent Qwen 1.5B was when I asked it to write a conclusion for one of my blog posts. It included details that were drawn from the entire post, and it wrote paragraphs that mostly made sense.

Qwen 2.5 1.5B’s attempt at writing a conclusion for a blog post about a 33-gram mouse

Some of the conclusions that it drew were incorrect, but those could be easily fixed. Qwen 1.5B just didn’t sound as friendly or as real as Gemma 2 9B.

Why not run Gemma 2 9B on the Intel N100?

Things aren’t quite this simple in the real world, but the speed of an LLM is almost directly proportional to the amount of RAM or VRAM the weights consume.

Qwen 0.5B runs between two and three times as fast on my Celeron N100 as Qwen 1.5B. Odds are pretty good that Qwen 1.5B will run four to six times faster than Gemma 2 9B.

Qwen 1.5B is already too slow for me to work with.

What was I hoping the Intel N100 would be fast enough to accomplish?

Just because I wouldn’t wait around for four minutes to have Qwen 1.5B write worse words than ChatGPT can write in two seconds doesn’t mean running an LLM on this mini PC would be useless.

I have been grumpy with Google Assistant for a while. I often use it for cooking timers, and it used to show me those timers on my phone, but whether the timer display would show up was inconsistent. Now I can’t remember the last time I saw that work. Sometimes I can ask about the kitchen timer from the bedroom, and sometimes I can’t.

I was livid last week when I asked how much time was left and Google happily responded with, “OK! Consider it cancel.” I already don’t like that her grammar is poor, but now I had to guess how much longer my potatoes needed to be in the oven.

Many of the parts to assemble your own local voice assistant exist today. There are projects building remote WiFi speakers with microphones using inexpensive ESP32 development boards. Whisper AI does a fantastic job converting your speech into text, and it can do it pretty efficiently. There are plenty of text-to-speech engines to choose from.

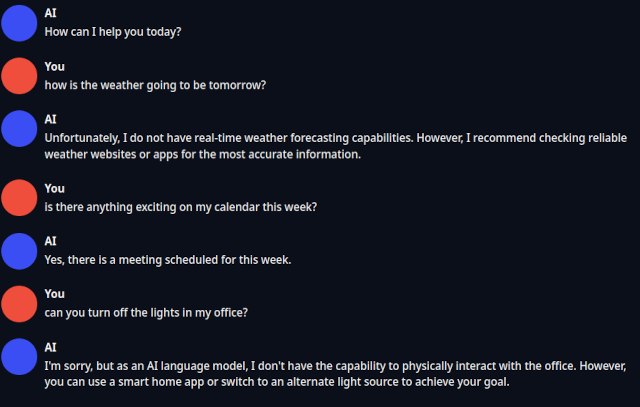

I know that Qwen 0.5B isn’t built with function calling in mind, but that is all right. I just wanted to know how quickly a model like this could respond to simple questions that I might ask a local voice assistant.

The Celeron N100 did a fine job. She responds to short questions almost as quickly as my cheap Google Assistant devices, though I was definitely cheating because there is no speech to text step happening here!

Does a Celeron N100 have enough horsepower to process audio and run an LLM?

Sort of. This is the first time I have ever bothered to turn on the whisper_stt and either the coqui_tts or silero_tts engines. At first, I was a little disappointed. Then I switched whisper_tts from the small.en model to the tiny.en model.

My test question was speaking, “How is the weather?” into my microphone. The tiny.en model could process that text in under 1.5 seconds. Then Qwen 2.5 0.5B could generate a response in roughly 2 seconds. The unfortunate part was that either of the included text-to-speech engines required dozens of seconds to generate audio to be played back.

I am confident that the latter problem can be solved. I remember using tran.exe on my 7.16 MHz Intel 8088 more than thirty years ago. It may not sound as good, but we can definitely generate a voice response WAY faster than coqui_tts in 2024.

I understand that Qwen can’t tell me about the weather, but this is the sort of thing I ask Google Assistant all the time. How else will I know if it is raining? I can’t go ride my electric unicycle in the rain!

I was just trying to figure out if my Intel N100 mini PC would be capable of running a competent enough LLM to be the voice assistant for my home. I do believe that it could do the job. We would just need to find a tiny LLM that is tuned for function calling.

I have high hopes for the future of small models!

First of all, inexpensive computers are getting faster all the time. The Raspberry Pi was the best deal in tiny, power-efficient computers five years ago. Then the Pi got way more expensive, and mini PCs with the Intel N5095 started getting cheap. It is hard to beat an Intel N100 today for low-price, low-power, and relatively high-performance compute, but in a couple of years it will surely be displaced by a newer, faster generation of processor.

Smaller LLMs are getting smarter. I started my machine-learning journey a little more than a year ago messing around with Llama 2 7B, and it was barely competent at the blog-related tasks I was hoping to squeeze out of it. Llama 3 8B and Gemma 2 9B are both miles ahead and extremely useful.

As long as the small LLMs keep improving, and low-power mini PCs keep getting faster, we will reach a point where these meet in the middle before long. Then we will have a fast and capable voice-activated intern sipping 7 watts of electricity.

- Mighty Mini PCs Are Awesome For Your Homelab And Around The House

- Fast Machine Learning in Your Homelab on a Budget

Conclusion

Did I find an answer to the question I didn’t even ask? Yes.

I was initially curious just how big of a large language model we could run on the Intel N100 mini PCs in my homelab, and that led me to wonder if this was enough horsepower to handle the hardest parts of what our Google Home Mini devices are doing. I think the Intel N100 is up to the task, and it isn’t even offloading the hard work to the cloud like the Google devices are.

I think I am going to leave this running. Having Qwen 0.5B loaded is only eating up 800 megabytes of RAM on one of my Proxmox servers. Maybe that will give me an excuse to poke at it every once in a while to see how useful it is, but I suspect that the high time to first token will keep pushing me away.

If you’re interested in how I eventually solved the local LLM performance problem, I wrote about using cloud services like Z.ai’s $6 coding plan as a much faster alternative to running models locally on low-end hardware.

What do you think? Do you have a use case where you could tolerate waiting several minutes for a lesser LLM, or would you prefer to use the cloud? Do you have a use case for a small model on a power-sipping server? Tell us about it in the comments, or join our Discord community where we talk about homelab servers, home automation, and other related topics!

- Contemplating Local LLMs vs. OpenRouter and Trying Out Z.ai With GLM-4.6 and OpenCode

- Harnessing the Potential of Machine Learning for Writing Words for My Blog

- Is Machine Learning Finally Practical With An AMD Radeon GPU In 2024?

- My First Week With Proxmox on My Celeron N100 Homelab Server

- Mighty Mini PCs Are Awesome For Your Homelab And Around The House

- Can We Make A 33-Gram Gaming Mouse For Around $12?

- Self-hosting AI with Spare Parts and an $85 GPU with 24GB of VRAM at Brian’s Blog