I am changing course again. I used to have a small 40-gigabit Infiniband network segment in my home office. This was necessary at the time because I stored video files on my NAS that I needed access to on my workstation, and video editing is a lot smoother when those files feel just as fast as if they were sitting on a SATA SSD installed in my workstation. It was also quite inexpensive and exceedingly cool.

I eventually decided to move my NAS and homelab server out of my office to cut down on the fan and hard disk noise in here. I didn’t have a good way to extend my 40-gigabit connection all the way to the other side of the house, so I wound up installing a 12-terabyte hard disk and a 1-TB SSD for lvmcache in my workstation. That let me keep a synced copy of my video files on my workstation, my NAS on the other side of the house, and my off-site server at Brian Moses’s house.

My home office’s new 4-port Mokerlink 2.5-gigabit switch with two 10-gigabit SFP+ ports in its new 3D-printed home

While I was setting that up, I made a sort of informal decision that I would attempt to keep my file storage setup configured in such a way that I would never need more than a 100-megabit Ethernet connection. This was a good fit for the symmetric 150-megabit Internet connection I had at the time.

Things have changed since then. I have upgraded our home FiOS internet service to symmetric gigabit. Brian upgraded his service to symmetric two gigabit. I also swapped out my old homelab server and NAS for a mini PC with four 2.5-gigabit Ethernet ports. I knew it would only be a matter of time before I added a second device with 2.5-gigabit Ethernet ports.

That day has already passed, and I have enough USB 2.5-gigabit Ethernet dongles to upgrade two more machines. It seems like it is about time to upgrade my network cupboard to 2.5-gigabit Ethernet, and it also seems like it would be fun to connect my home office and my workstation back to the cupboard using 10-gigabit Ethernet.

- 10-Gigabit Ethernet Connection Between MokerLink Switches Using Cat 5e Cable

- Degrading My 10-Gigabit Ethernet Link To 5-Gigabit On Purpose!

- Six Months of lvmcache on My Desktop

- Eliminating My NAS and RAID For 2023!

- I Bought The Cheapest 2.5-Gigabit USB Ethernet Adapters And They Are Awesome!

- MokerLink 4-port unmanaged 2.5-gigabit Ethernet switch at Amazon

- MokerLink 8-port managed 2.5-gigabit Ethernet switch at Amazon

Here’s the tl;dr

Everything is working great. It took less than five minutes to swap out the switch in my office, and I was immediately seeing better than 2.3 gigabits per second between my desktop PC and my office’s Proxmox server via iperf3.

It didn’t take much longer to temporarily stash the 8-port managed MokerLink switch into my network cupboard. The web interface is basic but capable, and iperf3 is running just as fast in both directions between devices connected anywhere in my home.

The real summary is that the MokerLink 2.5-gigabit switches are exactly what they are supposed to be: boring! I plugged them in. They work. They are faster than the switches they replaced.

How fast is 2.5-gigabit Ethernet? Is it fast enough?

The speed of 2.5-gigabit Ethernet is just math, but math is boring, and it may not help us understand what these speeds are equivalent to. You want to know if the network is going to be your bottleneck, and you’d like to figure out if you could use the upgrade.

I put together a little table that describes what each Ethernet standard’s maximum performance is roughly equivalent to. The equivalents aren’t exact, but they’re reasonably close.

| Ethernet | megabytes/s | Rough Equivalent | |

|---|---|---|---|

| 100 megabit | 12.5 | Slow | |

| gigabit | 100 | 500 GB laptop hard disk | |

| 2.5-gigabit | 250 | 3.5” hard disk or older SATA SSD | |

| 5-gigabit | 500 | the fastest SATA SSDs | |

| 10-gigabit | 1,000 | the slowest NVMe drives | |

| 40-gigabit | 4,000 | mid-range NVMe drives |

What is wrong with these rough equivalents? Hard disks don’t have a steady throughput. They are faster at the outer edge of the platter and slow down as they approach the center. The largest modern 7200-RPM disks can reach a little over 300 megabytes per second on the fast end, but they’ll drop down to something a little below 150 megabytes per second on the slow end.

Your 10-gigabit Ethernet network also has a lot more latency than your local NVMe drive. While it is no trouble for a mid-range NVMe to manage 500,000 input/output operations per second (IOPS), introducing a fraction of a millisecond and an extra protocol like CIFS or NFS will severely impact your IOPS when accessing a remote NVMe drive over 10-gigabit or 2.5-gigabit Ethernet.

Upgrading to 2.5-gigabit Ethernet is kind of a no-brainer these days. We are at or below $10 per switch port, USB 2.5-gigabit Ethernet dongles are under $10, and PCIe cards are in that ballpark as well. Not only that, but both 2.5-gigabit and 5-gigabit Ethernet will almost definitely work with the cables that are successfully carrying gigabit Ethernet signals.

I can tell you that one switch for each end of my house, three 10-gigabit SFP+ modules, a two-port 10-gigabit Ethernet card for my workstation, and a couple of USB 2.5-gigabit Ethernet adapters cost less than $200.

- 10-Gigabit Ethernet Connection Between MokerLink Switches Using Cat 5e Cable

- MokerLink 4-port unmanaged 2.5-gigabit Ethernet switch at Amazon

- MokerLink 8-port managed 2.5-gigabit Ethernet switch at Amazon

Is 10-gigabit Ethernet worth the hassle?

This is a fantastic question! 10-gigabit Ethernet pricing is getting pretty good, but the price of the switches and network cards isn’t the only thing to consider.

Both 10-gigabit Ethernet switches and network interface cards tend to use a lot more electricity, so they also generate a lot more heat. The protocol is also much pickier about the cabling that it will manage to run over. Some people have success on shorter runs over Cat 5e cable. You’re only supposed to need Cat 6 cable, but some people have trouble even then, and they have to try multiple SFP+ transceivers in the hopes that they will get a good connection.

I wouldn’t even be worrying about connecting my office to my homelab with 10-gigabit Ethernet if I weren’t writing a blog. I don’t need that extra performance, but it will be fun to tell you whether things work or not!

I helped Brian Moses run Cat 5e to every room in this house almost a decade before I bought the house from him. I know that there is somewhere around 50’ to maybe 60’ of Cat 5e cable between my home office and the switches in my network cupboard.

That is short enough that I may have success over Cat 5e. The RJ-45 transceivers I picked out also support 2.5-gigabit and 5-gigabit Ethernet, so it will still be a worthwhile upgrade even if I only manage half the speed.

UPDATE: The trio of Xicom SFP+ modules arrived, and they are working great. The connection to the other side of the house is running from a 10-gigabit port on my office switch to the wall via a Cat 5 cable from the nineties, then through the attic on around 40 to 50 feet of Cat 5e cable Brian and I pulled in 2011, then connected from the patch panel in the network cupboard to the 10-gigabit port on the switch. It connected at 10 gigabits per second, and I managed to run enough simultaneous iperf3 tests over various 2.5-gigabit and 1-gigabit Ethernet connections to push around 6 gigabits per second over that link.

- Xicom 10-gigabit SFP+ copper modules at Aliexpress

- Should You Buy An Intel X540-T2 10-Gigabit Ethernet Card?

When are you going to tell us what you ACTUALLY BOUGHT?!?!

MokerLink has three sizes of switch that I was considering, and each size is available in a managed or unmanaged variety. I waffled a lot on whether I was going to pay the extra $20 to put a managed switch in my network cupboard.

The number one reason on most people’s list for using a managed switch is to set up secure VLANs for their skeevy, untrusted Internet of Things hardware and oddball IP cameras with sketchy firmware that might phone home, and they don’t want that gear with janky firmware being used as a foothold to hack their important computers.

I am not worried about that in the slightest. I don’t run anything important outside of my Tailscale network. As far as I am concerned, my private and protected VLAN is my Tailnet. That is all I need, but I bought a managed MokerLink switch for my cupboard anyway. I thought $20 was a small price to pay to let you know how I feel about MokerLink’s managed switches.

The three sizes on my radar were the 8-port and 5-port 2.5-gigabit Ethernet switches, each with a single 10-gigabit SFP+ port, and the 4-port model with a pair of SFP+ ports. I thought that I wanted two SFP+ ports in the cupboard so that future Pat would have more options available, but I realized that I could probably connect every in-use port in the house to a single 8-port switch. Math also told me that four 2.5-gigabit ports in the cupboard wasn’t going to be enough.

I put a MokerLink 8-port managed 2.5-gigabit switch in the cupboard. Then I placed a 4-port unmanaged switch with a pair of SFP+ ports in my home office.

I only need a single 10-gigabit port in the cupboard to connect to my office. When they day comes that I need a second port, then it will definitely be time to pick up a dedicated 10-gigabit switch!

- Tailscale is Awesome!

- MokerLink 4-port unmanaged 2.5-gigabit Ethernet switch at Amazon

- MokerLink 8-port managed 2.5-gigabit Ethernet switch at Amazon

Fanless switches are awesome!

We put a pair of small, inexpensive, fanless gigabit Ethernet switches in Brian’s network cupboard in 2011. They are still running in my network cupboard today. Aside from power outages, they have been running 24/7 for 13 years.

They don’t use much power. They don’t generate much heat. Even more important, though, is that they don’t have any moving parts.

Moving parts like fans will eventually fail. It was important to me that my new switches have no moving parts, and these two MokerLink switches have no fans. There is a very good chance that both of these switches will still be in service in my house in another 13 years.

The network is the road that all of my computers have to travel on. I don’t want that road to be exciting, fancy, or even interesting. I want it to work, work well, and continue to function almost indefinitely.

Why MokerLink?

The homelab community in general seems to be pleased with MokerLink’s hardware, and I have friends who are already using some of their gear. The prices are pretty good, too!

I was extremely tempted to try one of the more generic-looking 2.5-gigabit switches. There are more than a few that look physically identical and have the same specs as MokerLink’s offerings, and those switches are 30% to 40% cheaper than MokerLink’s devices. The price gets even lower if you shop at Aliexpress.

I know that 40% sounds like a lot, but with these small switches, that winds up being only $25 or so.

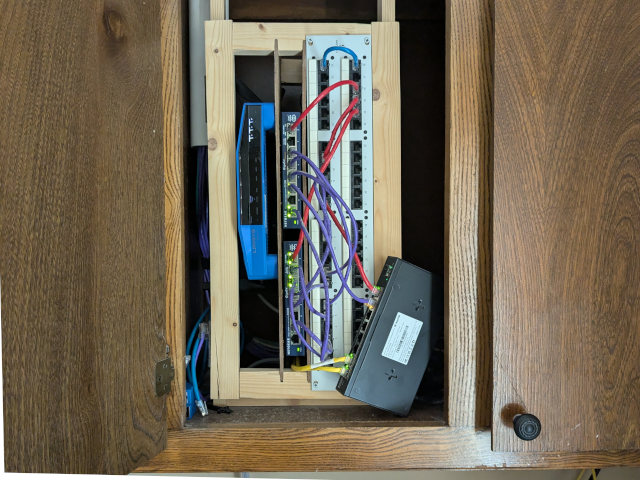

The 8-port MokerLink 2.5-gigabit switch is just hanging by its cables because I can’t install it until the 10-gigabit modules arrive!

MokerLink doesn’t have an 8-port 2.5-gigabit switch with TWO SFP+ ports. I did see some rather sketchy-looking and quite inexpensive 8-port switches that do, but I was weirded out by their odd faux-VLAN toggle button on the front of their hardware. This option seems similar to WiFi client isolation. I was tempted by the extra SFP+ port, but extremely turned off by the odd VLAN option.

- MokerLink 4-port unmanaged 2.5-gigabit Ethernet switch at Amazon

- MokerLink 8-port managed 2.5-gigabit Ethernet switch at Amazon

I am a dopefish and have a poor memory!

The back corner of where my two desks meet is REALLY far back there. I can’t reach that far unless I stand up and bend way over. It is a reasonable place to keep my office’s network switch. I can see if things are working, and I can reach back there if I have to when I am setting up a new laptop or server.

I designed a custom bracket to hold my old gigabit switch, and that bracket helps wrangle the patch cables and keeps everything tidy. I figured I should print a new bracket for my smaller MokerLink switch.

When I was working on that, I noticed that I was already out of switch ports, and I was kicking myself for not buying an 8-port switch for my office. I wound up redesigning the bracket to hold both the new MokerLink switch and my old gigabit switch.

The bracket is awesome, and I will leave it installed, but I didn’t realize until after I was finished installing it that I won’t need both switches.

I completely forgot that I would soon be using the two SFP+ ports! I’ll be moving the uplink cable from the wall, and I’ll be moving my workstation to the other. I will have two ports free, and that is more than enough for testing things at my desk.

I also remembered why I didn’t order an 8-port switch for my office—MokerLink doesn’t even make a fanless 8-port switch with TWO SFP+ ports!

How is everything working out?

You have already read more than 1,500 words, and I haven’t even told you how the new hardware is working out so far. I will have to stick a tl;dr up at the top!

Everything is working great. I am getting slightly better than 2.3 gigabits per second via iperf3 in either direction. This is true between devices connected to my office switch, and between devices plugged into my office switch to and from devices connected to my network cupboard switch.

The web interface on the managed MokerLink switch is basic but quite functional. It is possible that I missed something, but I couldn’t find a way to limit MokerLink’s web management interface to particular ports of particular VLANs.

I would expect that if you want to divvy up your network into VLANs for security purposes, that you’d want to make sure your guest WiFI, IoT, and DMZ VLANs wouldn’t be able to hammer away at the password to your management interface. I also wouldn’t expect that interface to be well hardened. It is up to you to decide if this is a deal-breaker for you.

I don’t have enough 2.5-gigabit devices to simultaneously beat the crap out of every port on these switches, but the review on the unmanaged 8-port Mokerlink switch at servethehome seems to indicate that you can get quite close to maxing every port out.

I thought I would finally be able to max out Tailscale on the Intel N100 CPU!

I have a vague recollection of us learning that Tailscale’s encryption speed tops out at somewhere around 1.2 gigabits per second on the older Intel N5095 CPU. Depending on what you are measuring, the Celeron N100 is a 25% to 40% faster CPU. Surely it won’t quite be able to max out a 2.5-gigabit Ethernet port, right?!

My older model but roughly comparable mini PC with a Ryzen 3550H CPU gets really close to maxing out all its CPU cores while reaching 2.15 gigabits per second via iperf3 over the encrypted Tailscale interface.

My Intel N100 mini PC manages 2.26 gigabits per second with a sizable chunk of CPU going unused. That is around 90 megabits short of maxing out the physical link, which is about how far short Tailscale falls when running over gigabit Ethernet as well.

I won’t have a good way to find the actual limit until my 10-gigabit hardware arrives in a couple of weeks, but I did skip ahead and set up a bonded LACP link using two of my unused ports on my router-style Proxmox server. We’ll see if that will help me max out Tailscale on the Intel N100 in a couple weeks!

UPDATE: I am able to get pretty close to 100% CPU utilization running iperf3 over Tailscale over my four bonded 2.5gbe ports. I am stuck at about 2.26 gigabits per second in the inbound direction, but I can reach 2.84 gigabits per second when going outbound.

- Tailscale is Awesome!

- I Added A Refurbished Mini PC To My Homelab! The Minisforum UM350

- My First Week With Proxmox on My Celeron N100 Homelab Server

- Mighty Mini PCs Are Awesome For Your Homelab And Around The House

Honorable mention

I picked up a 5-pack of CableGeeker’s 5’ flat-pack Cat 6 patch cables in a variety of colors. I dropped these cables down through a hole in the network cupboard to the table where our mini PCs and servers sit.

I am finally running out of good patch cables after more than two decades of hoarding. I have enough stock that I could crimp some fresh ones, but I thought it would be useful to color-code these cables that are difficult to trace, and I don’t know that I have enough colors on hand to do that.

I am using 10’ CableGeeker flat-pack cables all over my office now, and I have packed my various network toolkits and laptop bags with their cables. They are well made, and they have all done a good job of carrying 2.5-gigabit signals. We will find out if the modules capable of carrying 10-gigabit Ethernet in a couple of weeks.

I am a big fan of flat network cables. They roll back up nicely, and they take up less room in your bag even if you’re not careful about how you wind them up. They’re also easier to hide along floorboards.

- Flat-pack 5-foot Cat 6 Ethernet cable multicolor assortment at Amazon

- Flat-pack 10-foot Cat 6 Ethernet cables at Amazon

What’s next?

I wasn’t going to publish this until after I had a chance to properly install the 8-port switch in my network cupboard, but I realized that I might need to bring it into my office for testing if I wind up having trouble with the 10-gigabit SFP+ modules. It isn’t going to be easy to fish it back out once installed in its proper home in the cupboard!

I have a three-pack of Xicom 10-gigabit SFP+ copper modules and a 2-port Intel 10-gigabit PCIe card ordered and on the way. The SFP+ modules arrived and they are working splendidly!

I did stick the corner of the new switch in between the two pieces of pegboard in my network cupboard, and the new switch will definitely fit where it needs to go. I am definitely relieved by this. Cutting a new swinging 19” rack would be a fun little CNC project, but the project that Brian and I worked on 14 years ago has historical significance. I’d like to see that simple rack still doing its job in another 14 years!

If you want to dive deeper into the world of home networking and connect with other tech enthusiasts, you should check out the Butter, What?! Discord community. We have a dedicated channel for homelab setups and discussions where you can share your experiences, ask questions, and get some helpful advice.

Leave a comment below and let me know what you think of this post!

- 10-Gigabit Ethernet Connection Between MokerLink Switches Using Cat 5e Cable

- Degrading My 10-Gigabit Ethernet Link To 5-Gigabit On Purpose!

- I Bought The Cheapest 2.5-Gigabit USB Ethernet Adapters And They Are Awesome!

- Should You Buy An Intel X540-T2 10-Gigabit Ethernet Card?

- It Is My Network Cupboard Now!

- I Added A Refurbished Mini PC To My Homelab! The Minisforum UM350

- My First Week With Proxmox on My Celeron N100 Homelab Server

- Xicom 10-gigabit SFP+ copper modules at Aliexpress

- MokerLink 4-port unmanaged 2.5-gigabit Ethernet switch at Amazon

- MokerLink 8-port managed 2.5-gigabit Ethernet switch at Amazon