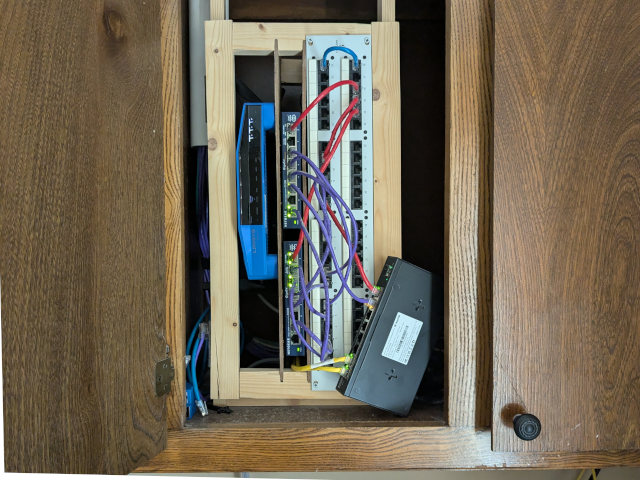

I ordered and installed a pair of MokerLink 2.5-gigabit Ethernet switches in my home about two weeks ago. I put an 8-port managed switch with one 10-gigabit SFP+ port in my network cupboard, and I put a 4-port unmanaged switch with two 10-gigabit SFP+ ports in my home office. I got that all running at 2.5-gigabit speeds immediately, but my intention was to set up a path from my workstation to the cupboard at 10 gigabits per second.

The MokerLink 2.5-gigabit switch sitting in its custom 3D-printed stand with all its 10-gigabit SFP+ ports running

The hardware to set up the required 10-gigabit Ethernet just wound up taking a little longer to arrive.

- Degrading My 10-Gigabit Ethernet Link To 5-Gigabit On Purpose!

- Upgrading My Home Network With MokerLink 2.5-Gigabit Switches

- Should You Buy An Intel X540-T2 10-Gigabit Ethernet Card?

- My Networking and NanoKVM Pouch For My Laptop Bag

- It Is My Network Cupboard Now!

Update: I’ve been having problems that I haven’t properly diagnosed!

I could write an entire 2,500-word blog post about what might be my problem, what I have done to troubleshoot, and why it is a pain in the butt to do the final bits of troubleshooting that might actually pinpoint my exact problem. I am going to try to keep it to three paragraphs.

This didn’t start happening immediately after publishing this blog, but at one point I started dropping packets on the 10-gigabit link across the house once a week. I’d switch to a 2.5-gigabit port for a few hours, swap back to the fast port, and everything would be fine for a week.

I decided that an overheating SFP+ module might be my problem. I swapped in my old 1-gigabit switch, took apart my MokerLink switch, and ordered a whole mess of thermal pads. When they arrived, I thermally connected the bottom of the PCB under the SFP+ module to the case, swapped the MokerLink back into service, and I could no longer get a stable 10-gigabit Ethernet connection for more than 20 seconds.

I left things at 2.5 gigabit for a month or so, then I decided to plug back into the 10-gigabit port a few days ago. Once again, I had no dropped packets for something around a week. I had minor packet loss overnight, but everything was fine by the time I was here to troubleshoot.

My suspicion is that I have two problems. The 10-gigabit link wasn’t working at all during the coldest months of the year. Is a nearly freezing attic doing something to my Cat-5e cable? Are things better now because it has been warming up? We had a lot of rain, so maybe it is more humid now?

Maybe my intermittent problem of a few dropped packets is one of the SFP+ modules overheating. I added thermal pads to the MokerLink switch in my cupboard, but I didn’t modify the switch in my office. I had no need to try, because the link wasn’t working at all when the thermal pads arrived.

That was more that three paragraphs. I just wanted to post an update. This blog makes it seem like everything was sunshine and rainbows, and it was for a while. I’ve been avoiding this update because I wanted to be able to say something concrete. I don’t think I am there yet!

One last update!

I wound up buying a [5-gigabit SFP+ module][5gfbesfp] to install in the MokerLink switch in my home office. I did switch back and forth between the 5-gigabit and 10-gigabit modules a few times, but I wound up sticking with the slower 5-gigabit module.

There is another Cat-5e cable in the same wall. It is connected to an RJ-45 jack in the bedroom on the other side. I have thought about digging into the wall to swap these around to see if the 10-gigabit link stays solid for more than a few months at a time, but I haven’t had the gumption to crawl around behind furniture to make that happen. There is a giant and fully-loaded 5x5 Ikea Kallax blocking that port in the bedroom, and I am really not excited about trying to get behind it!

The 5-gigabit link has been absolutely rock solid.

- Lianguo 5-gigabit SFP+ module at Aliexpress

Let’s talk about the title of this blog!

Brian Moses and I ran Cat 5e cable to almost every room in this house in 2011. That was eight or nine years before I bought the place!

Both 2.5-gigabit and 5-gigabit Ethernet are designed to run over Cat 5e or Cat 6 cable with lengths up to 100 meters. 10-gigabit Ethernet over copper requires Cat 6A cable. I am not using any Cat 6A cable, but I also don’t have 328 feet of cable between my office and the network cupboard!

You can run 10-gigabit over pretty crummy cables if they’re short enough, but how short is short enough? I haven’t found any good data on this. I have mostly only read anecdotes, so I figured I should document my own anecdote here!

Some SFP+ modules only support 10-gigabit Ethernet. I decided to try these Xicom SFP+ modules because they support 2.5-gigabit and 5-gigabit Ethernet. The latter would still be an upgrade, and the 3-pack of modules from Aliexpress cost about half as much as three of the cheapest SFP+ modules I could find on Amazon.

I had good luck. My connection across the house negotiated at 10 gigabits per second, and I haven’t seen any errors accumulating on the counters of the managed switch.

UPDATE: I added a bunch of RJ-45 couplers and extra Ethernet patch cables to make sure these Xicom SFP+ modules would actually attempt to negotiate a 5-gigabit Ethernet between themselves. I was a bit worried that I’d be encouraging you to buy these with the hope that they could fall back if your wiring was up to snuff, but then they would fail to do what they were supposed to. I tested it. They dialed back to 5 gigabits per second just like we expected!

Exactly what kind of cabling am I working with here?

My house is a little over 50 feet wide, and the network jack in my office is almost that far away from the cupboard. The cable has to go up 8 feet to get to the attic, then it has to come back down 4 feet to reach the patch panel in the network cupboard. It isn’t a perfectly straight line through the attic, and Brian and I definitely didn’t pull the cable taut.

Let’s just say there might be 70 feet of Cat 5e between the port on the wall in my office and the patch panel.

The tube that collects all the cables leading to the 48-port patch panel in my network cupboard

The cable from the patch panel to the 10-gigabit SFP+ module is an extremely short color-coded patch cable that I crimped myself. It is probably Cat 5e.

The 10-foot cable connecting my office’s switch to the wall has some historical significance for me! It is a yellow Cat 5 cable that almost definitely somehow made the journey here from the days when I worked for Commonwealth Telephone in Pennsylvania. Almost all our patch cables there were yellow, and this cable is from the days before the Cat 5e standard even existed.

- Flat-pack 10-foot Category 6 Ethernet cables at Amazon

- Flat-pack 5-foot Cat 6 Ethernet cable multicolor assortment at Amazon

What if I couldn’t get 10 gigabit out of these cables?

What would I personally have done? Me?! I would have swapped that old Cat 5 cable from the nineties between the 4-port switch and the wall, and if I was still stuck at 5 gigabits per second, I would have stopped there. That is more speed than I actually need, anyway!

What could you do if you REALLY want that faster connection? The easiest thing to do would be to try a different brand of SFP+ module. They are not all identical. Maybe you could borrow a module or two from friends to see if you have better results.

The only relevant photo I could find. This is a stack of old 3com switches stacked on what was at one time my desk! Every one of these switches would have had 23 yellow patch cables plugged in.

I honestly expect that most people will be lucky enough to have 10-gigabit Ethernet work across old Cat 5 or Cat 5e wiring in their homes. I definitely don’t live in the biggest house around, but I also don’t live in a small house, and it is only a single story. That makes it a pretty wide house. Your longest cable run is likely to be shorter than mine if you are in a two-story house unless you’re approaching 5,000 square feet.

Maybe. I don’t know. I don’t live in your house!

I don’t think my MokerLink 8-port switch supports LACP, but I was able to bond four ports!

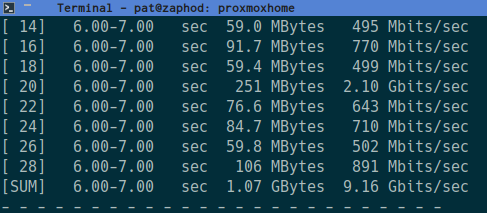

There are four 2.5-gigabit Ethernet ports on my router-style Intel Celeron N100 mini PC. I don’t really need to bond these ports together because a single 2.5-gigabit Ethernet port is about as fast as the 14-TB USB hard disk connected to my NAS virtual machine. Even so, trunking these ports made it easier to properly verify that I actually have 10 gigabits of bandwidth between my office and my network cupboard.

The documentation for MokerLink’s 5-port managed switch has slightly different screenshots that what show up in my 8-port switch’s trunk configuration. The 5-port switch lets you choose whether a trunk group is set to static or LACP. I can put any four ports in a single trunk group, but I don’t have a choice as to what sort of group it is, and the link doesn’t work when I set Proxmox to use LACP.

The trunk works great with the Proxmox’s default setting of balance-rr for the bond0 device, and I am able to push data at 9.36 gigabits per second using iperf3 as long as I use at least four parallel connections. Sometimes I have bad luck, and two or more of those parallel connections get stuck on the same 2.5-gigabit interface, but bumping it up to six or eight parallel connections almost always breaks 9 gigabits per second.

That is part of the bummer about channel bonding. A single TCP connection will max out at the speed of just one network interface. You need multiple connections to fully utilize all the ports in the group, and they don’t always wind up attaching to the ideal interfaces.

This isn’t a problem in situations where you would use bonded network interfaces in a business environment, because you probably have hundreds or thousands of clients sharing those two, three, or four network ports. When I want more speed at home, it is always between two clients.

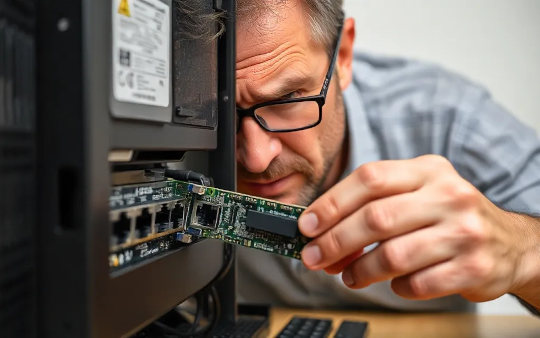

Troubles with my Intel X540-T2 PCIe card from Aliexpress

I could easily burn through 2,500 words explaining the problems that I have had with this 10-gigabit Ethernet card in great detail. I will probably dedicate an entire blog post to this card when I am confident that everything is going smoothly, but I will try to hit the important bits here.

I did not carefully choose this card. I had the three pack of Xicom SFP+ modules in my cart when I searched for 10-gigabit PCIe cards. I saw a dual-port card with an Intel chipset for $16 and almost immediately added it to my cart.

When I installed the card, my PC wouldn’t power on. I pulled the card, plugged it back in, and it booted just fine.

Then I noticed that I couldn’t get more than 6 gigabits per second out of the card, and dmesg said that the card was using two PCIe lanes. I wanted to reboot to check the BIOS, but the machine shut down instead and wouldn’t power back up. Many attempts at reinstalling the PCIe card failed to improve the situation.

I set the BIOS to 4x instead of auto for that slot, and I dug out an old computer for testing. The Intel card from Aliexpress worked just fine over and over again in that machine, so I gave it another shot in my PC. It worked on the first try now, it is using four PCIe lanes, and I can reach over 9 gigabits per second.

Will it work when I reboot next week? I did get the Intel X540-T2 card working just fine, but I am somewhat less than 50% certain what my problem actually was!

- Should You Buy An Intel X540-T2 10-Gigabit Ethernet Card?

- Generic Intel X540T2 PCIe 10-gigabit network cards at Aliexpress

- Generic Intel X540T2 PCIe 10-gigabit network cards at Amazon

Should I have bought a different 10-gigabit PCIe card?

For my situation, I don’t think it makes much sense to spend more than $20 on a NIC for my workstation. There are no other 10-gigabit Ethernet hosts on my network. I can max out the 2.5-gigabit Ethernet ports on three devices and still hit 900 megabits per second in both directions at speedtest.net. That’s pretty neat, but not terribly useful.

This older Intel NIC is pretty neat at $16. That’s about what I would have to pay for a 2.5-gigabit Ethernet PCIe card anyway, so it felt like a no-brainer to give it a try. The Intel X540 is old enough that it predates the 2.5-gigabit and 5-gigabit Ethernet standards, so this card will only work at 1-gigabit or 10-gigabit and not anything in between.

I still have the 8-port switch dangling in the network cupboard. I need to set aside some time to unscrew one of the old 1-gigabit switches and get that thing mounted correctly!

The next step up would be more legitimate-looking Intel X540 cards on Amazon for $60. After trying this one, I am not excited about paying so much for another 17-watt NIC. Prices only go up from there.

Conclusion

I think it is definitely time to stop buying 1-gigabit Ethernet hardware. You can get 2.5-gigabit switches for quite a bit less than $10 per port now. So much hardware is shipping standard with built-in 2.5-gigabit network adapters now, and you can sneakily upgrade some of your older machines with $7 2.5-gigabit USB dongles.

The 10-gigabit Ethernet stuff is fun, but it is both more AND less persnickety than I anticipated. I expected to have trouble with the inadequate wiring in the attic. I absolutely did not expect to have weird issues with an Intel network card, even if that network card might be made from discarded or unused enterprise network gear from a decade ago.

The 10-gigabit link between switches on opposite sides of my house is fantastic. This has turned this pair of switches into a single switch for all practical purposes, so it doesn’t matter which room my mini PC servers call home. They will always have a full 2.5-gigabit connection directly to any other server no matter how many of them are moving data at the same time.

I would very much love to hear from you in the comments! Are you running 10-gigabit Ethernet at home across older wiring? How old is that wiring, and how long do you think the runs are? What make and model of SFP+ module do you have on each end? It would be awesome if we could figure out which gear is working for people, and also what sorts of wiring they have managed to get a good connection on. If you are interested in chatting about DIY NAS and homelab shenanigans, then you should considering joining our Discord community. We are always chatting about servers, network gear, and all sorts of other geeky topics!

- I Bought The Cheapest 2.5-Gigabit USB Ethernet Adapters And They Are Awesome!

- Degrading My 10-Gigabit Ethernet Link To 5-Gigabit On Purpose!

- Upgrading My Home Network With MokerLink 2.5-Gigabit Switches

- Should You Buy An Intel X540-T2 10-Gigabit Ethernet Card?

- Mighty Mini PCs Are Awesome For Your Homelab And Around The House

- My First Week With Proxmox on My Celeron N100 Homelab Server

- Xicom 10-gigabit SFP+ copper modules at Aliexpress

- MokerLink 4-port unmanaged 2.5-gigabit Ethernet switch at Amazon

- MokerLink 8-port managed 2.5-gigabit Ethernet switch at Amazon