I bought a couple of 2.5-gigabit Ethernet switches with 10-gigabit SFP+ ports, and if I wanted to be able to utilize the 10-gigabit connection from my home office to the other side of the house, then I needed to buy a 10-gigabit Ethernet NIC for my PC. I did absolutely zero research. I saw a 2-port 10gbe PCIe card on Aliexpress for $17, so I added it to my cart alongside the three Xicom 10-gigabit SFP+ copper modules that I was already ordering.

The Intel X540 card has been working well, but I have had two problems. I am going to tell you about my problems so that you can make an informed decision. The only thing that I learned while searching the Internet to see if my problems were common is that the general vibe is that these cards can be problematic. Not in any specific way. They just aren’t great cards.

I think it is important for me to say that I am currently having zero problems with my 10-gigabit Intel X540-T2 Ethernet card. It was a good gamble at $17, and I will buy another if I need more 10-gigabit cards in the near future.

- 10-Gigabit Ethernet Connection Between MokerLink Switches Using Cat 5e Cable

- Upgrading My Home Network With MokerLink 2.5-Gigabit Switches

Why are these network cards so cheap?

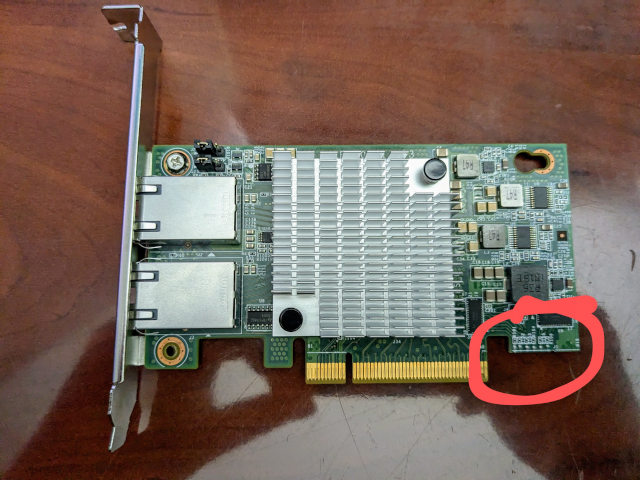

The cards on Aliexpress were originally intended for something not quite compatible with the PCIe standard. There is an extra connector in the back that has been cut off. You wouldn’t be able to put this card in a normal PCIe slot if that connector were still intact. I don’t know what sort of proprietary machine these were originally meant for.

The part circled in red notes where the PCB was cut at the factory after manufacturing. You can find nearly indentical cards in an image search where there is another edge connector at this location.

People on the Internet would like you to believe that these cards are assembled from salvaged components from used servers. I don’t think that is likely, but I wouldn’t be surprised if they’re assembled from Intel X540 chips that didn’t make the cut after testing by one of the reputable manufacturers.

The Intel X540 also happens to be a rather outdated 10-gigabit Ethernet chipset. This means that it doesn’t support 2.5-gigabit or 5-gigabit Ethernet, and it uses quite a bit more power than something newer. My cable only had to run 10’ to reach the switch, so I was confident that I would get a stable connection at full speed. You might want to find something that supports 5-gigabit Ethernet if your cabling situation is sketchier than mine.

- Generic Intel X540T2 PCIe 10-gigabit network cards at Aliexpress

- Generic Intel X540T2 PCIe 10-gigabit network cards at Amazon

My unique and serious problem

I couldn’t find anyone else with this problem, and I am not even quite 50% certain what actually solved my problem. I don’t expect that you will encounter this problem, but I feel like I need to tell you about it.

When I first installed the X540-T2 in my workstation, I couldn’t get it to boot. My fans and lights would turn on for a second, then everything would shut off. I reseated the card, and everything was happy. At least, I thought everything was happy.

When I rebooted my computer a few days later to check a BIOS setting, it shut off and wouldn’t turn back on again. Reseating the card didn’t help this time, so I scrounged up an old computer and tried the Intel X540 card in there. It worked just fine. It worked over, and over, and over again.

The most likely cause of this symptom is inadequate power delivery. My 850-watt power supply’s fan was making a gentle ticking sound last month, so I decided to replace it with a quieter fan than it came with. I have been running an old 500-watt power supply in the mean-time, and since it has been working just fine with my CPU and GPU maxed out, I haven’t been in a hurry to swap the repaired PSU back in.

So I did the work to swap the correct power supply back into my case. I noticed while doing this that the metal bracket on the Intel card wasn’t lining up well with the back of the case. I thought maybe it was pushing the 8x PCIe card slightly out of alignment in the 16x slot, so I gave the bracket a slight bend, and it fit much better.

A misalignment would explain why the computer wouldn’t boot, but it wouldn’t explain why a reboot would fail. You would expect it to run into a problem while running for days.

I checked the 12- and 5-volt lines on a drive power cable of the old power supply with a multimeter, and they weren’t dipping at all when powering up the computer, so I am not terribly confident that swapping the power supply was the fix. Those were the only two rails I could easily test.

One of these two things definitely helped, because I have been rebooting and power cycling my computer over and over again without issue. I am sure someone with a keen eye will notice the missing screw on the PCIe bracket in one of the photos on this blog post. I can only assume that I took that photo while swapping out the half-height bracket that was installed at the factory. There have been two screws installed every time I have had the card installed!

These Intel X540 cards tend to overheat

At least, I think an overheating issue is what I have run into. Heat is the number one complaint from owners of these cards. I don’t care how hot my silicon runs, but it definitely needs to be running within the design specifications.

I noticed the other day that some of my pings in Glances were failing. I pulled up speednest.net, and the results weren’t consistent. Reddit wasn’t loading full pages of content successfully, and my iperf3 tests to one of my mini PCs were bouncing between 0, 500, and 2,200 megabits per second.

I temporarily fixed the problem in an odd way. I fired up enough openssl speed benchmarks to max out all my CPU cores to get the chassis fans choochin’. It didn’t take long before all my network problems just straightened themselves out.

I have yet to properly address this issue. It hasn’t happened again, but I plan to bump up my minimum chassis fan speeds next time I am in the BIOS. My fan speeds are set really low to keep the noise down in here when I am recording podcasts, but I don’t think an extra 5% or 15% will make an audible difference.

The fans are usually set to spin at the minimum possible RPM. This is enough to keep my Ryzen 5700X and my Radeon RX 6700 XT at or below 45C when idle. That just doesn’t seem to move enough air past that poor Intel NIC.

The $60 Intel X540 cards on Amazon seem to ship with much larger heat sinks, and some cards ship with a tiny fan. I would expect the large heat sink to be a nice upgrade, but I definitely wouldn’t install a card with one of those tiny fans. I am trying to keep my office quiet, and I would expect to hear a high RPM fan like that.

- My New Radeon 6700 XT — Two Months Later

- Putting a Ryzen 5700X in My B350-Plus Motherboard Was a Good Idea!

- My Accidental Quest to Make My Gaming Computer Quieter

My ACTUAL solution to my overheating Intel X540T2

My 10-gigabit LAN connection started getting weird again. My small set of hosts that I track in glances was showing timeouts again, so I did an iperf3 test. It came back wit the same weird results where it was bouncing between zero, hundreds of megabits, or several gigabits per second.

I know that I explained in the previous section that I bumped up my minimum case fan speeds in an attempt to help with this problem, but I wasn’t so certain that I ACTUALLY went into the BIOS to make that change. I was about to do boot into the BIOS to make those tweaks, but I changed my mind. I decided to pull the Intel NIC, pop off the heat sink, and see how things were doing in there.

The heat sink was getting really hot. That should have been a good sign. That means that heat was leaving the chip on the NIC and transferring into the heat sink. Even so, whatever material they used for thermal transfer was dry and cracking, and there wasn’t actually any thermal compound directly between the chip and the heat sink.

I slathered on way too much of my old but preferred thermal compound, which is a tube of dielectric grease from Autozone that I have been using since 2008. I will probably be using this stuff for the rest of my life unless I manage to lose this tube. I didn’t know how hard the springs press the heat sink to the chip, so I figured I should err on the side of too much thermal grease. That way there wouldn’t be any air gaps.

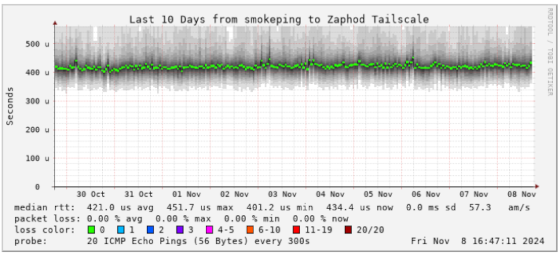

I honestly didn’t expect this to work, because the heat sink was already pulling what felt like a ton of heat out of the NIC. I have been monitoring the connection with Smokeping for more than ten days now, and I haven’t seen a single missed packet. I guess the upgrade to some nice, soft thermal compound was just enough to make the difference!

I do wish that I was smart enough to add my workstation to my Smokeping server BEFORE I repasted the heat sink.

Alternatives to the Intel X540

There’s no shortage of used Mellanox 10-gigabit Ethernet cards on Amazon between $25 and $50, but they all have SFP+ ports. You will need to add a $20 transceiver to plug in your Cat 6 cables. I could write an entire blog post about retired enterprise 10-gigabit network equipment, but that is a bit out of scope this time. I am trying to make use of the existing cables in my house this time instead of running short 40-gigabit connections between nearby computers.

Old Mellanox cards are probably the best option if you need 10-gigabit Ethernet and don’t want to use the inexpensive Intel X540 cards from Aliexpress.

| Ethernet | megabytes/s | Rough Equivalent | |

|---|---|---|---|

| 100 megabit | 12.5 | Slow | |

| gigabit | 100 | 500 GB laptop hard disk | |

| 2.5-gigabit | 250 | 3.5” hard disk or older SATA SSD | |

| 5-gigabit | 500 | the fastest SATA SSDs | |

| 10-gigabit | 1,000 | the slowest NVMe drives | |

| 40-gigabit | 4,000 | mid-range NVMe drives |

There are plenty of PCIe 5-gigabit Ethernet adapters on Aliexpress that use the RTL8126 chipset for about the same price as the older Intel X540 cards. The only thing disappointing about these cards seems to be the speed.

The Intel cards consume almost 20 watts of power, while the Realtek 5-gigabit cards claim to use less than 2 watts. That doesn’t seem like too big a deal when you only have one card, but I have mini PC servers in my homelab that use less than 10 watts.

Since the Realtek cards fit in a smaller 1x PCIe slot, they are easier to fit into your build than the bigger Intel X540 cards.

I have a working 10-gigabit Ethernet card, and I am not going to put in work for a downgrade, but I think the right choice for me would have been buying a 5-gigabit Realtek card instead. Those cards just sip power, and I don’t have a use for the extra 5 gigabits today outside of running iperf3 tests to make sure the 10-gigabit link between the switch in my office and the switch in my network cupboard is actually running at 10 gigabits per second!

- 5-gigabit Ethernet PCIe card at Aliexpress

The problem with 40-gigabit and 56-gigabit network cards

The 40-gigabit Infiniband cards I was using a few years ago were fantastic. Those same Mellanox cards could run either 10-gigabit Ethernet firmware or 40-gigabit Infiniband firmware, so they’re the same family of 10-gigabit Ethernet cards I was recommending in the previous section—except you can get 40 gigabits per second out of them when running Infiniband.

That sounds awesome, except it is extremely challenging to find enough fast PCIe lanes to max out even one of the pair of 40-gigabit ports on those cards. You’re going to be buying older enterprise hardware, so the maximum supported PCIe version of those cards will be a generation or two out of date. If you are anything like me, the servers in your homelab are often made up of old computers that you used to run at your desk.

Those older computers might have even older PCIe slots, and the newest machines on your Infiniband network might have a GPU in the only properly fast PCIe slot on the motherboard. The best I ever managed in my setup was 16 gigabits per second of PCIe bandwidth. That worked out to 12.8 gigabits per second via iperf3 on my IP-over-Infiniband link.

That’s only 30% faster than 10-gigabit Ethernet, and I had no easy way to extend that connection from one end of the house to the other. I can run plain old 10-gigabit Ethernet over the old Cat 5e cable in my attic.

My first 20-gigabit Infiniband setup was a fantastic deal in 2016. I was getting 8 gigabits per second over iperf, and my entire setup to connect two computers cost less than a single 10-gigabit Ethernet card.

10-gigabit Ethernet is priced much better in 2024.

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network

- Infiniband: An Inexpensive Performance Boost For Your Home Network (2016)

Conclusion

In the end, the Intel X540-T2 might be tempting with its low price, but it might be a bit of a gamble. I suspect that you will almost definitely get lucky and have a perfectly stable card, but there is the possibility that you will spend days wrestling with issues. While I personally wouldn’t hesitate to grab another X540 for myself, I think you should consider something like the $17 Realtek 5-gigabit cards unless you absolutely require 10-gigabit speeds—just make sure the other end of your connection actually supports 2.5- and 5-gigabit Ethernet!

This journey into the world of 10-gigabit Ethernet has been a reminder that even seemingly simple hardware choices can come with unexpected challenges. It highlights the importance of thorough research, understanding the nuances of different technologies, but also not being afraid to experiment. After all, what’s the fun in building a homelab without a few learning curves along the way?

If you’ve had similar experiences with networking hardware, or if you have any insights to share about overcoming these challenges, please leave a comment below! I’d love to hear your thoughts and experiences. And for even more in-depth discussions about homelab gear and tech troubleshooting, join the Butter, What?! Discord community.

- 10-Gigabit Ethernet Connection Between MokerLink Switches Using Cat 5e Cable

- I Bought The Cheapest 2.5-Gigabit USB Ethernet Adapters And They Are Awesome!

- Upgrading My Home Network With MokerLink 2.5-Gigabit Switches

- 5-gigabit Ethernet PCIe card at Aliexpress

- Generic Intel X540T2 PCIe 10-gigabit network cards at Aliexpress

- Generic Intel X540T2 PCIe 10-gigabit network cards at Amazon