I’m not new to the world of Infiniband. I bought a pair of 20-Gigabit Mellanox Infiniband cards in 2016 to connect my desktop PC to my virtual machine host. It has been chugging along just fine, though I’ve never gotten a full 20 gigabits per second out of this setup. We’ll talk more about that later.

My posts about my upgrade from gigabit Ethernet to Infiniband have always been solidly in my top ten posts according to my analytics, and that has only just barely been true lately. That got me thinking that my 20-gigabit setup is getting long in the tooth, and it might just be time for an upgrade!

- 10-Gigabit Ethernet Connection Between MokerLink Switches Using Cat 5e Cable

- Choosing an Intel N100 Server to Upgrade My Homelab

- My First Week With Proxmox on My Celeron N100 Homelab Server

- Infiniband: An Inexpensive Performance Boost For Your Home Network (2016)

- Using the Buddy System For Off-Site Hosting and Storage

- I spent $420 building a 20TB DIY NAS to use as an off-site backup at Brian’s Blog

Do I really need to upgrade?

No. I do not need to upgrade. When files are cached in RAM on my NAS virtual machine, my 20-gigabit hardware can hit about 700 megabytes per second over NFS. That’s more than twice as fast as it can pull data off the hard disks or SSD cache. I’m most definitely not going to notice an upgrade to faster hardware.

That’s not the only reason to upgrade. You fine folks shouldn’t be buying 20-gigabit hardware any longer. The 40-gigabit gear is nicer, and it costs about what I paid for used 20-gigabit gear in 2016. I don’t like recommending things I’m not using myself, so an upgrade was definitely in my future.

My server side's Infiniband card has 8x PCIe 2.0 lanes available. I don't have either an 8x slot or PCIe 3.0 4x slot available in my desktop. Either of those would just about double my speeds, but I'm not willing to move my GPU out of its 16x PCIe 3.0 slot! pic.twitter.com/IqlsB5ztod

— Pat Regan (@patsheadcom) February 6, 2021

My old gear wasn’t limited by the Infiniband interface. It was limited by the card’s PCIe interface and the slots I have available in my two machines.

My server side has a 16x PCIe 2.0 slot available and my desktop has a 4x PCIe 2.0 slot. I knew I wouldn’t hit 40 gigabits per second with the new hardware, but as long as they negotiated to PCIe 2.0 instead of PCIe 1.1, my speeds would surely double!

- Infiniband: An Inexpensive Performance Boost For Your Home Network (2016)

- Can You Run A NAS In A Virtual Machine?

I had a lot of confusion about PCIe specifications!

When I wrote about my 20-gigabit Infiniband cards in 2016, I claimed that the 8 gigabits per second I was seeing was a limit of the PCIe bus. I was correct, but in rereading that post and looking at my hardware and the dmesg output on driver initialization, I was confused!

1 2 3 4 5 6 7 8 | |

Mellanox claims my old 25408 cards are PCIe 2.0. When the driver initializes, it claims the cards are PCIe 2.0, but the driver also says they’re operating at 2.5 GT/s. That’s PCIe 1.1 speeds.

This isn’t relevant to the 40-gigabit or 56-gigabit hardware, but I think it is worth clearing up. All the cards in Mellanox’s 25000-series lineup follow the PCIe 2.0 spec, but half of the cards only support 2.5 GT/s speeds. The other half can operate at PCIe 2.0’s full speed of 5 GT/s.

Do you really need 10-, 40-, or 56-gigabit network cards?

We are starting to see a lot of 2.5 gigabit Ethernet gear becoming available at reasonable prices. This stuff will run just fine on the older Cat-5E cables running across your house, and 8-port switches are starting to go one sale for less than $20 per port.

Not only that, but inexpensive server motherboards are showing up with 2.5 gigabit Ethernet ports. The awesome little power-sipping [Topton N5102 NAS motherboard][ttp] that Brian Moses is selling in his eBay store has four 2.5 gigabit Ethernet ports.

Is 2.5 gigabit fast enough? It is faster than most SATA hard disks. It isn’t quite as fast as the fastest SATA SSDs, but 2.5 gigabit Ethernet is definitely playing the same sport.

It is a good bump in speed over gigabit Ethernet, and it is rather inexpensive.

- [I Am Excited About the Topton N5105 Mini-ITX NAS Motherboard!][ttb]

You might want to look at 56-gigabit Mellanox cards

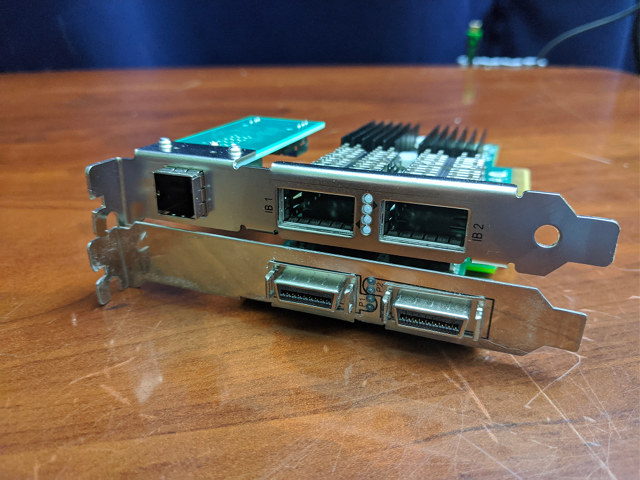

I looked at 56-gigabit Infiniband cards. I bought two, but I made a mistake. I accidentally ordered a pair of HP FlexibleLOM cards for only $25. FlexibleLOM is very close to being PCIe, but the pinout isn’t compatible and the form factor isn’t quite right. Actual PCIe 56-gigabit cards cost $80 on eBay.

I don’t know why I ordered FlexibleLOM cards. I think I was just super excited about 56-gigabit Infiniband cards for only $13 each. Don’t make my mistake.

No Infiniband upgrade for me today! I don't remember noticing that these aren't standard form factor PCIe cards when I ordered, but they sure are!

— Pat Regan (@patsheadcom) January 19, 2021

If this weren't for a blog post, I'd just make them work, but I'm going to reorder correct hardware. pic.twitter.com/xxJ1G1fzA9

NOTE: FlexibleLOM to PCIe adapters exist, and they might be a really good value, since you can get two FlexibleLOM 56-gigabit cards for $25 compared to $150 or more for a pair of PCIe cards. They didn’t seem easy to source, so I opted to go the easy route.

I wound up downgrading to 40-gigabit Mellanox ConnectX-3 PCIe cards. The 56-gigabit cards won’t run Infiniband any faster for me because my available PCIe slots are the real bottleneck here. If you’re running Infiniband, this will likely be true for you as well, and you can save yourself $80 or more.

If you want to run super fast Ethernet using these cards, it might be worth spending a few extra dollars. My 40-gigabit cards can only operate at 10 gigabits per second in Ethernet mode. The 56-gigabit Mellanox cards can operate as 40-gigabit Ethernet adapters.

Ethernet is easier to configure than Infiniband, especially if all you’re interested in is IP networking. I was hoping to test this out, because 40gbe would simplify my setup quite a bit. I opted to save the $80 and just continue routing to my virtual machines.

Did I mention that this is all used enterprise-grade hardware?

I’m not encouraging you to buy brand new Infiniband cards. You’ll pay at least twice as much for a single card as it would cost you to connect three machines with dual-port Infiniband cards from eBay.

The 20-gigabit Infiniband cards I bought in 2016 were already 10 years old when I started using them. The 40-gigabit cards I just installed are probably around 10 years old as well.

Can I run Infiniband across my house?

Not easily. I’m using a 1-meter QSFP+ cable to directly connect one Infiniband card to another. My desktop computer and KVM host both live in my office and they sit right next to each other. These QSFP+ cables can only be about 3 meters long.

Oh good. The Mellanox QSFP+ cable I ordered has a sticker that says FDR on it. That's an indication that I ordered the correct cable to match the pair of 56-gigabit FDR Infiniband cards that will be arriving Monday! pic.twitter.com/sMwCbcsY0k

— Pat Regan (@patsheadcom) January 16, 2021

If you need a longer run, you have to use fiber. I’m seeing some 50’ lengths of fiber with QSFP+ modules on each end for around $70. There are QSFP+ transceiver modules for $30. You’d have to find your own compatible fiber to plug into those modules.

What if I need to connect more than two machines?!

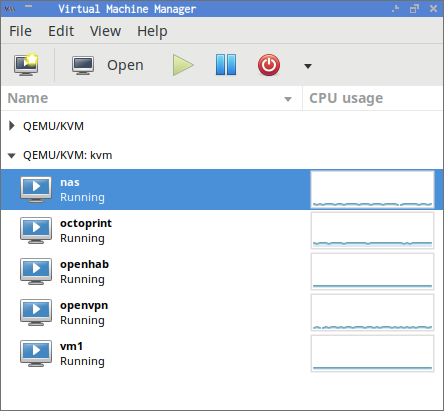

The vast majority of Infiniband cards on eBay have two ports. That’s enough ports to directly connect three machines. This is what my friend Brian did with his 10-gigabit Ethernet setup. In practice, our configurations are pretty similar. I just have one fewer machine on my super-fast network.

My desktop and VM server live on two different networks. They’re both connected to my home’s gigabit-Ethernet network, and they’re both plugged into my tiny Infiniband network. The Infiniband network has its own subnet, and I’m using the hosts file on my desktop to make sure the Infiniband connection is used to connect to any virtual machines that need super high-speed connections. This is especially important with my NAS virtual machine.

What if I need to connect more than THREE machines, Pat?!

You could install even more Infiniband cards, but I wouldn’t recommend it.

This is where my old 20-gigabit DDR Infiniband adapters had the edge. It was easy to find 8-port 20-gigabit Infiniband switches on eBay for $100 or less.

There are a few small 40-gigabit QDR Infiniband switches, but most are huge 36-port beasts. They’re not expensive. Some are as low as $150, but most are closer to $250.

This is quite a bump in cost compared to plugging three machines directly into one another in a star topology, but using an Infiniband switch also simplifies the network configuration considerably. It is still a fraction of the price of 10gbe over CAT-6 cable.

Why are you using Infiniband?

It just sounds cool, doesn’t it? It often starts fun conversations too. When people are chatting about network stuff, and you mention that you run Infiniband at home, folks are often surprised. More often than not they’ve never even heard of Infiniband. You also get to say goofy things like, “To Infiniband and beyond!”

For me, Infiniband makes my NAS feel like a local disk installed in my desktop. The virtual machine host where my NAS VM lives isn’t exactly high-end these days, and I didn’t build it to saturate a 10-gigabit connection. It has a pair of mirrored 250 GB Samsung 850 EVO SSDs and four 4 TB 7200 RPM hard disks in a RAID 10. The SSDs are the boot volume and are also being used as lvmcache for the hard disks.

I usually see read and write speeds in the 300 megabyte-per-second range. Small random writes get propped up by the SSD cache, but most of what I hit the NAS for involves video editing. The storage in my cameras is much slower than this, and my disks are rarely the bottleneck when editing video.

The fastest disks in my server are the 850 EVO SSDs, and their top benchmarked speed is somewhere around 350 megabytes per second. The spinning RAID 10 probably tops out around there too. My disk access wouldn’t be any faster if they were installed directly in my desktop.

This just means I have room to grow. I could upgrade to faster solid-state drives for my lvmcache and triple the count of disks in my RAID 10, and I would still have a bit of extra room on the network. That’s awesome!

- IP Over InfiniBand and KVM Virtual Machines

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines

- Can You Run A NAS In A Virtual Machine?

What can you do with Infiniband that you can’t do with Ethernet?

Infiniband supports Remote Direct Memory Access (RDMA). This allows memory to be copied between hosts without much CPU intervention.

The most common use of RDMA is in conjunction with iSCSI devices. iSCSI normally operates over TCP/IP. When using iSCSI on Infiniband, the IP stack is bypassed and memory is transferred directly from one machine to another. This reduces latency and increases throughput.

If you’re connecting virtual machines to a Storage Area Network (SAN), this may be of interest to you.

I’ve really only ever used iSCSI to say that I’ve done it and to tell people how easy it is to do. I’m not interested in setting things up here at home to rely on iSCSI and a separate storage server.

How do I set up Infiniband on Linux?

Everything I wrote about setting up Infiniband in 2016 works today. Sort of. A few weeks ago I upgraded my KVM host from Ubuntu 16.04 to 18.04 and then immediately to 20.04. One of those upgrades decided to rename my Infiniband interfaces.

1 2 | |

This goofed up my configuration in /etc/network/interfaces. Not only that, but the old network configuration using /etc/network/ has been deprecated in favor of NetworkManager.

I’m still using the old-style configuration on the server, and it works fine. All I did was pull the old 20-gigabit cards, install the new 40-gigabit cards, and all my configuration was just working on my first boot.

If you have a fresh install of Ubuntu 20.04 or any other distro that is using NetworkManager, I have to imagine that it is much easier to just use NetworkManager.

Using IPoIB with KVM virtual machines

There are two solutions for running regular network traffic over Infiniband. There’s Ethernet over Infiniband (EoIB), which runs at layer 2, and there’s IP over OB (IPoIB) which runs at layer 3. EoIB is not in the mainline Linux kernel, while IPoIB is. IPoIB just works out of the box.

I wanted to avoid using EoIB because it requires installing software from Mellanox. What if I want to upgrade my desktop to a bleeding edge kernel that Mellanox doesn’t support? What if there’s a conflict between my Nvidia driver and the Mellanox EoIB driver? I don’t want to deal with any of that.

That created a new problem. Since IPoIB runs on layer 3, I can’t just bridge virtual machines to that device. Bridging happens at layer 2. This means I am forced to route from the Infiniband interface to my virtual machines.

I touched on this a bit earlier when I mentioned that 56-gigabit Mellanox cards could also be used as 40-gigabit Ethernet devices. If you want to use drivers in the mainline kernel AND be able to plunk your virtual machines onto a bridged interface, it may well be worth spending the extra cash on 56-gigabit cards. The Ethernet drivers will have no trouble with this.

This is already a long blog post. I wrote about my adventures in getting IPoIB to work well with the 20-gigabit Infiniband cards, and the configuration hasn’t changed. There are some gotchas in there, for sure.

You need to get your MTU up to 65520. If any interface in the chain is stuck at the default of 1500, you might experience extremely slow speeds to your virtual machines. I had a persnickety interface hiding on me.

Even with everything configured correctly, you’re going to lose a little throughput when routing. On the 20-gigabit Infiniband hardware, I was losing roughly one gigabit per second when talking to the virtual machines. I’m doing better with the 40-gigabit gear, so your mileage may vary here.

Let’s talk about performance!

This is the part I’ve been waiting for ever since I pulled the trigger on the new Infiniband cards. Here’s what I know.

I tend to see 300 megabytes per second when connected to my NAS VM with my old 20-gigabit Infiniband hardware. That’s about three times faster than gigabit Ethernet, and it is pretty much the top speed of my solid-state and hard drives. This isn’t going to be improved, which is a bummer.

Let’s start with what the logs say when the driver initializes the Infiniband cards:

1 2 3 4 5 | |

If I had a PCIe 3.0 slot with 8 lanes available on each end, my maximum speeds would be around 64 gigabits per second. I’d need both ports to reach speeds like that!

In the server, there are 8 PCIe 2.0 lanes available giving us up to 32 gigabits per second. My desktop has 4 PCIe 2.0 lanes available, which is my limiting factor here. The only faster slot in my desktop is the 16x PCIe 3.0 slot where my Nvidia GPU lives. I’m just going to have to live with a 16-gigabit top speed.

Next up is the iperf benchmark. This will give me a more realistic top speed including all the IP network overhead.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

The old cards topped out at 6.53 gigabits per second. The new cards are nearly twice as fast!

When routing to my NAS virtual machine, my iperf tests would run about 700 megabits per second slower compared to testing directly against the KVM host. I was super hyped up when I saw the new numbers!

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

This is awesome! I’m losing zero to a couple of hundred megabits per second to my extra hop. That’s a big improvement!

iperf is fun. I get to throw around gigantic numbers that I can point at excitedly. That’s great, but I’m more interested in what these numbers mean for me on a day-to-day basis. What kind of speeds can my NFS server reach?

Caching and forcibly dropping those caches isn’t going well for me in my attempt to reproduce these tests. They’re all going too fast! Here’s the tweet with my original NFS tests:

As with the 20-gigabit cards, I'm getting about 270 megabytes per second on an uncached read over NFS. The 40-gigabit cards have brought a RAM cached read up to 1.2 gigabytes per second. A locally cached dd on my desktop is right where it always was at 9+ GB/s. pic.twitter.com/mO33YVeCGk

— Pat Regan (@patsheadcom) February 6, 2021

My unprimed, mostly uncached test copy of a 4 GB DVD image ran at 272 megabytes per second. That’s right around my usual speeds. It is limited by the SSD cache and the rather small number of ancient 4 TB mechanical drives in the server.

Then I dropped my local caches and transferred the same file again. There’s more than enough RAM in the NAS virtual machine to hold the entire DVD image in cache, so I should be testing the maximum throughput of my NFS server. You can see that I’m hitting 1.1 or 1.2 gigabytes per second. I’ve seen it hit 1.3 gigabytes per second just as often, so my NFS server is hovering right around the 10-gigabit-per-second mark. That’s not bad!

The most I’d ever seen out of the old 20-gigabit hardware over NFS was around 700 megabytes per second.

The last dd command winds up testing the local cache on my desktop. That can move the file at nearly 10 gigabytes per second. Isn’t it neat being able to move a file across the network at even 10% the speed of RAM?!

- IP Over InfiniBand and KVM Virtual Machines

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines

- Can You Run A NAS In A Virtual Machine?

What does this mean for Pat?

This is pretty much what I’d predicted and exactly what I was hoping for. My Infiniband network speed has just about doubled. That’s fun!

I’m not going to notice a difference in practice. My disks were my bottleneck before, and I knew they would continue to be my bottleneck after the upgrade.

I’m actually maxing out my available PCIe slots. That’s exciting! Not only that, but my network is actually truly faster than Brian’s 10-gigabit Ethernet. That’s even better!

For most home NAS builds, the gigabit Ethernet interface is the bottleneck. My tiny Infiniband network is rarely going to be using more than 25% of its capacity. I can grow into a lot more hard drives and faster SSD cache before I saturate this 40-gigabit hardware!

Conclusion

I’m pleased to be able to say that I feel the same way about the 40-gigabit Infiniband hardware as I did about the 20-gigabit hardware five years ago. At around $100 to connect two machines, it really is an inexpensive performance boost for your home network.

It may not have been a wise investment of time, effort, and $100 for me. I’m not going to see any real advantage over my old gear. If you’re already running 10gbe or 20-gigabit Infiniband, you’re probably in the same boat, and there isn’t much reason to upgrade. If you’re investing in faster-than-gigabit hardware for the first time, I think you should skip that stuff and go straight to 40-gigabit Infiniband or even 56-gigabit Infiniband cards that can do 40-gigabit Ethernet.

What do you think? Do you need to be able to move files around at home faster than the 100 megabytes per second you’re getting out of your gigabit Ethernet network? Is 40-gigabit Infiniband a good fit for you, or would you rather pay double for 40-gigabit Ethernet cards? Are you glad I paid for a useless upgrade just to publish my findings? Let me know in the comments, or stop by the Butter, What?! Discord server to chat with me about it!

- 10-Gigabit Ethernet Connection Between MokerLink Switches Using Cat 5e Cable

- Choosing an Intel N100 Server to Upgrade My Homelab

- My First Week With Proxmox on My Celeron N100 Homelab Server

- IP Over InfiniBand and KVM Virtual Machines

- Infiniband: An Inexpensive Performance Boost For Your Home Network (2016)

- Building a Cost-Conscious, Faster-Than-Gigabit Network at Brian’s Blog

- Maturing my Inexpensive 10Gb network with the QNAP QSW-308S at Brian’s Blog

- Building a Low-Power, High-Performance Ryzen Homelab Server to Host Virtual Machines

- Can You Run A NAS In A Virtual Machine?

- Using the Buddy System For Off-Site Hosting and Storage

- I spent $420 building a 20TB DIY NAS to use as an off-site backup at Brian’s Blog