I am quite grumpy about both cloud AI and local AI. I just can’t figure out where I want to land, and I don’t even want to choose just one or the other. I am happy to use the cloud where it makes sense, then use a local LLM or image generator where that might work out better. The trouble is that none of it makes sense!

I have been interested in the idea of grabbing a used server GPU on eBay for a long time. The 24 GB Tesla P40 used to be a great deal, but they increased in price from $150 to nearly $400 almost overnight. They still might be a reasonable deal at $400 if you really do need 24 GB of VRAM, but the even older 24 GB Tesla M40 can be had for less than $100.

This is an AI-generated image of me holding an immitation of my MSI Radeon 6700 XT generated using OmniGen V1 at fal.ai. I tried to generate a picture of me with a Radeon Instinct Mi50, but that didn’t go so well!

My friend Brian Moses beat me to that, and his results were more than a little underwhelming. Don’t get me wrong here! What you get for $100 is fantastic, but my 12 GB Radeon 6700 XT in my desktop is significantly faster. I feel like I am better off dealing with the LLM startup times just so I can keep my performance.

- Contemplating Local LLMs vs. OpenRouter and Trying Out Z.ai With GLM-4.6 and OpenCode

- Is The $6 Z.ai Coding Plan a No-Brainer?

- Is Machine Learning Finally Practical With An AMD Radeon GPU In 2024?

- Self-hosting AI with Spare Parts and an $85 GPU with 24GB of VRAM

Update from Pat from almost a year after writing this post!

Every few months, I flip between being warm or cool towards 16-GB or 32-GB enterprise GPUs for local LLM related nonsense.

I do think there is a place for them. The models that fit in 16 GB are getting really, really smart. I wouldn’t want to use one to help me write code or write a blog post, but they are most definitely good enough to scan my reciepts and other photos, or to tie into my Home Assistant voice assistant. I do not currently have a Home Assistant voice assistant, but we’re getting there.

I have been generally unimpressed with the $2,000 Ryzen AI 395+ mini PCs for LLM use. They’re fast enough for 12B or maybe 27B models, but those won’t even eat up half of the available 128 GB of moderately fast RAM. The trouble is that a 70B model that can make use of that much RAM also runs really slow on that hardware.

That seems to be changing. Qwen3 30B A3B is a pretty good model, and it runs at an extremely usable pace on a Ryzen AI 395+ machine. Even better, it is looking like Qwen3 80B A3B might wind up running EVEN FASTER on the same hardware. That is a much bigger, much more capable model, and it will need almost every bit of that 128 GB of RAM.

So what are we looking at? Cheap 16 GB and 32 GB used GPUs for the simple, fast stuff that runs in the background. Maybe moderately expensive Ryzen AI 395+ or 128 GB Apple M3 or M4 stuff to run Qwen3 80B A3B.

NOTE: At the time I am writing this, the code to support Qwen3 80B is slowly being merged into llama.cpp.

- GMKtec Ryzen AI Max+ 395 mini PC at Amazon

Why is the 16 GB Radeon Instinct Mi50 interesting to me?

The Tesla GPUs are awesome because they run CUDA. That makes everything related to machine learning so much easier. Some software won’t even run with AMD’s ROCm, and AI software tends to run slower on ROCm even when the AMD’s hardware should be faster.

That means that there are things that just won’t currently run on an Instinct enterprise GPU, and getting the things that will work up and running will be more work. I am already running a ROCm GPU, so I am confident that the things that I already have working could be made to work easily enough on a server with a Radeon Instinct GPU.

I would love to have 24 GB of VRAM, but I am mostly happy being stuck with my current 12 GB, so the 16 GB Radeon Instinct ought to be comfortable enough. The Mi50 has a massive 1 terabyte per second of VRAM bandwidth. That is three times more bandwidth than my GPU or either of the reasonably priced used Nvidia Tesla GPU models. That should be awesome, because LLMs love memory bandwidth.

There are currently several 16 GB Instinct Mi50 GPUs listed on eBay for $135. They will require a custom cooling solution, but I have a 3D printer, so that shouldn’t be a problem. There are 32 GB Instinct Mi60 GPUs with slightly more performance starting at around $300. I think that is quite a reasonable price for 32 GB of VRAM, but I don’t even know if these Instinct GPUs will be suitable for my needs, so I have only been looking at the 16 GB cards.

Why is 16 GB enough VRAM for my needs?

I am using a 5-bit quantization of Gemma 2 9B to help me write this blog post. It is definitely up to the task. This model uses around 9 gigabytes of VRAM on ROCm with the context window bumped up to 12,800 tokens. Dropping down to a 4-bit quant and lowering the context window a bit would allow this model to fit comfortably on a GPU with 8 gigabytes of VRAM.

Older Stable Diffusion models run great on my current GPU, and my settings give me decent images at around 20 seconds each. I have to run Flux Schnell’s VAE on the CPU, and I can generate decent 4-step images in around 40 seconds. I believe I am using a 4-bit quant of Flux Schnell.

Squeezing all of Flux Schnell into VRAM would be a nice upgrade.

My Radeon 6700 XT has roughly 35% faster gaming performance than an Instinct Mi50 according to Tech Power Up’s rankings. My hope was that the Mi50 would process more tokens per second since LLMs are usually limited by memory bandwidth, and the Mi50 has boatloads of bandwidth.

I keep waffling back and forth!

I just couldn’t bring myself to order an Instinct Mi50.

I do hate that I have to remember to spin up the Oobabooga webui every time I work on a blog post, and I have to remember to shut it down before playing games. I am also not good at remembering that I even need to spin it up before I start writing, so I wind up having to wait an extra 20 seconds while I am already prepared to paste in my first query.

I would like to have a dedicated GPU with reasonable performance in my homelab. Then I could just hit a key from Emacs and have some magic show up in the buffer five seconds later. I also feel that I would do a better job at even bothering to set things up to automatically query the LLM if I knew the LLM would always be there.

What’s wrong with the Instinct Mi50? It has no fans, yet it needs to be cooled. I would have to choose and buy a blower fan, and I would have to find or design a 3D-printed duct.

I don’t have any PCIe slots in my homelab any longer, because I have downsized to mini PCs. I can definitely plug my old FX-8350 server back in, but I am not confident that there is enough room for the length of an Instinct Mi50 with a blower fan clamped to the end. I can for sure make it work, but it might require swapping to a different case.

I have been on a repeating cycle in my brain for weeks. “I want an LLM available 24/7!” “The Instinct Mi50 is so cheap!” “I don’t want to do the work only to find out it is too slow!” “I want an LLM available 24/7!”

I managed to find a cloud GPU provider with a 16 GB Instinct Mi50 GPU available to rent. That meant that I could test my potential future homelab GPU without spending hours getting the hardware assembled and installed in my homelab.

Trying out the Radeon Pro VII at Vast.ai

I was disappointed at first, because I didn’t see any Instinct GPUs in the list of currently available machines. I only saw some goofy Radeon Pro VII. What the heck is that?

It turns out these are the workstation version of the Instinct Mi50. Same cores. Same VRAM. Same clock speeds. They are almost identical except for the cooler and the extra video output ports. While the Instinct cards rely on a rackmount server’s own airflow to keep them cool, the 16 GB Radeon Pro VII has its own fan. You can get these on eBay, but they cost more than a 32 GB Instinct Mi50. I don’t know about you, but I’d rather put in the effort to finagle a fan onto the 32 GB card than pay the same for a 16 GB card!

The Xeon Gold CPU in the available server is a pretty good match for my old FX-8350. They have similar single-core performance, while the Xeon has twice as much multi-core performance. That is because my old FX-8350 has half as many cores!

My Radeon Pro VII testing was disappointing

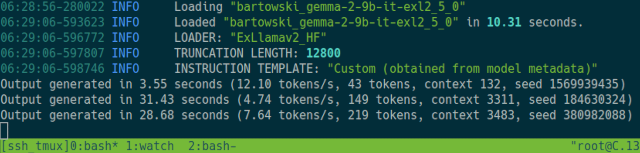

I used Vast.ai’s Ubuntu 22.04 image with ROCm 6.2 preinstalled. I cloned Oobabooga’s text-generation-webui repository, and I copied up the exact same Gemma 2 9B 5-bit model that I use locally.

Everything installed fine. My model loaded right up. I also saw that rocm-smi had me at 71% VRAM usage, and it was peaking at 236 watts during inference. Everything seemed to check out. My LLM was running on the GPU, and the GPU was running at full speed.

I pasted in a blog-editing session from my local Oobabooga install’s history, and I got the Radeon Pro VII into precisely the same state as my local machine. Then I had them both regenerate the conclusion section for my last blog post, since that is my most successful use case for my local LLM so far. It also uses as much of the context window as I am likely to ever need to use.

In fact, I clicked the regenerate button three or four times just to be sure the results were consistent.

My Radeon 6700 XT managed to crank out results at 14.75 tokens per second. The Radeon Pro VII’s best run managed 7.64 tokens per second.

I think it is fair to say that the Instinct Mi50 equivalent machine that I rented at Vast.ai was roughly half as fast as my own GPU. I tried poking around with the model settings, but I didn’t manage to coax any extra performance out of it.

Some posts in r/LocalLlama lead me to believe that I might have been able to push something closer to 40 tokens per second with an Instinct Mi50 GPU with a model like Gemma 2 9B. I had high GPU utilization. I was consuming the right amount of VRAM. It is possible something else is going wrong, and it might be something out of my control, but I don’t have a good guess as to what that could be.

I don’t think I have much use for a 24/7 LLM server today if it can only manage 7 tokens per second. I was already having trouble convincing myself that I needed to buy an Instinct GPU to try out. Even though I am concerned that this could just be a flaw in the cloud-GPU setup, this still just might be how fast this GPU will be. I don’t want to put in the effort with a used GPU just to wind up with an LLM that is too slow to be useful.

I have only nice things to say about Vast.ai so far

I have not tried any other cloud GPU services, and I definitely need to tell you that I didn’t do a ton of comparative research here. Vast.ai was on one of three or four price lists that I checked. They are at just about the lowest price point of any cloud GPU provider, but that means they are also one of the more manual options. They were saying nice things about Vast.ai all over Reddit, the prices are great, and most importantly, they had the GPU I was most interested in testing available.

How is Vast.ai more manual than some of their pricier competition? There are companies that will let you fire up a preconfigured LLM, Stable Diffusion, or Flux Schnell service in a matter of seconds. You have to bring your own Docker configuration to Vast.ai, though they do have a library of basic templates that you can choose from.

I added $5 to my account a few days ago, and I have $4.78 left over. It only cost me three cents to do my little benchmark of the Gemma 2 9B on the Radeon Pro VII.

I spent the other 19 cents messing around with the Forge webui and Flux Schnell on a middle-of-the-road 16 GB Nvidia A4000 GPU. Why I chose this GPU will probably be a good topic for another blog post, but telling you how things went will fit well in this blog post!

![]()

I was generating nice 4-step 1152x864 images at around 20 seconds per image. That’s half the time it takes to generate a 640x512 image on my Radeon 6700 XT. The A4000 didn’t go any faster at lower resolutions. That was a bit of a surprise but is also kind of neat! It is unfortunate that this is still miles away from sites like Mage.space that will generate similar Flux Schnell images in 3 seconds.

Spending 20 cents per hour to generate Flux Schnell images in 20 seconds seems like a good deal compared to Mage.space’s $8 per month subscription fee. The problem is that it took 15 minutes for my Forge Docker image to download Flux Schnell from Huggingface.com. This is mostly a problem with Huggingface, because I have the same complaint here at home.

You can avoid most of that 15-minute wait by storing your disk image at Vast.ai with Forge and Flux Schnell already installed, but that would cost almost $9 per month. Why pay $9 per month for slower image generation when you can pay Mage.space to manage everything for you at $8 per month?

This even more of a bummer since I discovered that Runware.ai added an image-generation playground to their API service. I can pop in there and generate all the Flux Schnell images that I need for a blog post in less time that it takes a server at Vast.ai to boot. Booting the machine at Vast.ai costs me a nickel. Spending the same time generating images at Runware.ai costs a fraction of that, and I don’t have to wait 15 minutes to get started.

I am excited that I bought some credits to use at Vast.ai. When I can’t get something cool to work locally, I can spend a few nickels or dimes messing around on someone else’s GPU. That is a cheap way to find out if it is something that I can’t live without having locally. I can always decide that I just have to buy a 24 GB Radeon 7900 XTX or Nvidia 4090 later, right?!

If you’re interested in how I eventually solved the local LLM performance problem, I wrote about contemplating local LLMs vs. OpenRouter and trying out Z.ai with GLM-4.6 and OpenCode and whether the $6 Z.ai coding plan is a no-brainer as much faster alternatives to running models locally on hardware like the Radeon Instinct Mi50.

The costs are small

I am grumpy about all of this. The prices on everything short of a current-generation GPU with 24 GB of VRAM are almost inconsequentially small.

I can add sluggish but not glacially slow LLM hardware to my homelab for $135, and I can power that new hardware for $70 per year. I don’t know how you want to amortize those dollars out, but lets call it $17 per month for the first year and $6 per month for every year after. That is peanuts if you ignore the work of configuring and maintaining the software.

That is about what it would cost to pay for a service like Mage.space for image generation, and they’ll do the work of installing new models and keeping the software up to date for you. I can hit OpenAI’s API as hard as I know how to, and it will only cost me pennies a month. Both of these services are faster than anything I can run locally even if I shelled out $1,800 on an Nvidia RTX 4090.

The cost of keeping a disk image ready to boot at Vast.ai would be about the same. There is a ton of flexibility available with this option, but they still can’t boot a machine instantly, because they have to copy my disk image across the globe to the correct server. It will still take two or three minutes at best for me to start generating images with Flux Schnell.

You can be flexible, cheap, and slow at home. You can give up instant 24/7 availability to be flexible, cheap, and fast at Vast.ai. Or you can give up flexibility to be fast and cheap with services like Mage.space and OpenAI’s API.

AI in the cloud is almost free when you pay by the image!

Everything in this blog post fit together really well when I started writing it, because a Mage.space subscription, storing a disk image at Vast.ai, or idling your own machine-learning server at home would all cost a bit less than $10.

I didn’t discover that Runware.ai implemented an image-generation playground until I was almost finished writing this entire blog post. Runware.ai didn’t have a playground a month ago!

This fits my needs so much better. I can generate 4-step 1024x1024 Flux Schnell images at Runware.ai in about 8 seconds, and I can log in and start typing the prompt for my first image in about 10 seconds. They will let me generate 166 Flux Schnell images for a dollar. All of this is fantastic, and it meets my personal needs so well.

I don’t think it is possible for me to use up more than 20 cents a month in OpenAI credits, and I would be surprised if I ever manage to use up an entire dollar’s worth of Runware.ai credits in any given month. Both of these services are five to ten times faster and cheaper than running local LLMs or local image generation.

Runware.ai’s playground isn’t as flexible as Mage.space. There are fewer models to choose from, and there is no way to automatically run a matrix of models, prompts, and scales like I can locally. It is tough to complain with Runware.ai’s price and performance.

I did some more searching just before publishing this blog when I wanted to try out OmniGen. I figured it’d be fun to combine a photo of myself with a GPU in an odd location, and that was fun! My search helped me discover that Fal.ai has much fancier Flux Schnell image generation features than Runware.ai. Fal.ai costs a bit more, but it is still tiny fractions of a penny per image.

I don’t know why I didn’t figure this out sooner. I remember when Brian was using Fal.ai to train a lora to generate weird images of himself!

What about sharing an LLM server with your friends?!

I don’t have any good plans here, but I thought the idea was worth mentioning. Tailscale would make it ridiculously easy to share an Oobabooga text-generation webui and a Forge image-generation webui with a bunch of friends. Splitting the energy costs of a small server and the price of a used Tesla M40 or Instinct Mi50 GPU would quickly approach the price of a cheap cup of gas-station coffee every month with only a handful of friends contributing.

That handful of three or four friends could easily make a used 24 GB Nvidia 3090 in a shared server approach the cost of the cloud services, and that would be fast enough to be properly competitive with OpenAI or Runware.ai.

Just about the most interesting reason to run your AI stuff locally is to protect your privacy. Running on a GPU at your friend’s house may or may not be something you consider private. Is it better to be one of millions of queries passing through OpenAI’s servers, or would I prefer to have all my queries logged by Brian Moses?

Conclusion

I started writing this conclusion section an hour before I discovered that Runware.ai has a playground, and my original plan was to explain how it makes me grumpy that both local and cloud machine-learning services have roughly equal pros, cons, and costs. Now I am suddenly happy to learn that I can run both LLM queries AND generate images with cloud services faster than I ever could at home for literal pocket change.

I am still excited about local LLMs. They have gotten quite capable at sizes that will run reasonably fast on inexpensive hardware. There is a good chance I will still wind up buying an Instinct Mi50 to run a local LLM, but I am waiting until I actually NEED something running 24/7 here in my home.

I would love to replace my Google Assistant devices around the house with something that runs locally, and an in-house LLM that can respond in a few seconds would be a fantastic brain to live near the core of that sort of operation. I would want this to be tied into Home Assistant, so I certainly wouldn’t want it to rely on a working Internet connection.

What do you think? Are you running AI stuff locally or using cloud services? Do you keep flip-flopping back and forth on what you run in the cloud vs. what you run locally? Tell us what you prefer doing in the comments, or join the Butter, What?! Discord community and tell me all about your machine-learning shenanigans. I look forward to hearing about your ML adventures!

- Contemplating Local LLMs vs. OpenRouter and Trying Out Z.ai With GLM-4.6 and OpenCode

- Is The $6 Z.ai Coding Plan a No-Brainer?

- Is Machine Learning Finally Practical With An AMD Radeon GPU In 2024?

- Harnessing the Potential of Machine Learning for Writing Words for My Blog

- Self-hosting AI with Spare Parts and an $85 GPU with 24GB of VRAM

- Cloud GPU rental at Vast.ai