The title of this blog is bordering on nonsense. You need at least a few qualifiers to determine if one thing is better than another. If you can’t let your information leak outside of your organization, then using a large language model (LLM) in the cloud isn’t even an option for you.

This blog post isn’t about you, and it isn’t going to be an attempt to be provide recommendations that apply to you no matter your situation. This is going to be about my experience, my choices, and what has been working for me. I will also touch on where I think the future is going for my use cases.

- Harnessing the Potential of Machine Learning for Writing Words for My Blog

- Is Machine Learning Finally Practical With An AMD Radeon GPU In 2024?

What am I using LLMs for?!

I don’t want to get too far ahead of myself, but I think it is safe to say that I am using LLMs for more and more small tasks. During most of my time using local and remote LLMs, I was usually smarter and more efficient than the artificial brains.

If I wanted to find the coordinates of a point on an existing line in OpenSCAD, I would punch in a few searches into Google, then craft my own function using the information I found. Today I can tell DeepSeek R1 to write me a function, and it will probably give me exactly what I want in under a minute.

My biggest use case for LLMs is still writing conclusion sections for blog posts. I never use the text verbatim, but the robots include phrases that I would never write on my own. They will include sentences that brag a little too much, or have a call to action that feels more enthusiastic than I would ever write.

I might let a third of those ridiculous sentences through now with minor edits. It feels like I have a colleague talking up my work for me. There is someone to blame for these words that isn’t me!

Local LLMs are getting smarter, but massive LLMs are outpacing them

I was using Gemma 2 9B to help me write blog posts for a long time. It was the first local model that I could ask for a conclusion section for a blog post, and it would give me back words that didn’t sound entirely unlike something I would write.

I would occasionally pass the same queries thought one of OpenAI’s mini models, and I wouldn’t say that OpenAI’s models were doing a significantly better job than my local 9B LLM. Those mini models may be significantly larger than my local LLM, but they weren’t giving me results that were significantly better for my purposes.

DeepSeek R1 excels at writing conclusions for blog posts. It ties together concepts that are a dozen paragraphs apart. It picks up on all sorts of details that smaller models miss. It doesn’t always draw the best conclusions, and it doesn’t always highlight things that actually matter.

Sometimes it calls out things that I wouldn’t have thought to, and those things actually work out. Sometimes I just drop whole paragraphs and put my own words in. Sometimes I ask DeepSeek to remove the bits I don’t like and try again.

I will not have the RAM or VRAM to run anything close to DeepSeek R1 locally for a long time. Even if I did, my local hardware would be an order of magnitude slower than the 80 tokens per second I usually see via OpenRouter.ai.

I deleted all my local models

I pretty much had to put a few dollars in my OpenRouter.ai account when DeepSeek R1 dropped. Once that happened, I had access to a plethora of fast, inexpensive models to choose from.

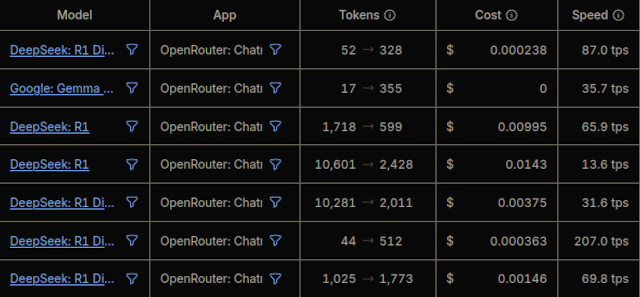

They have free yet slow providers for full DeepSeek R1 and Gemma 2 9B. At the time I am writing this, OpenRouter.ai’s free DeepSeek R1 is reasonable at around 20 tokens per second, but the latency is more than 15 seconds. You can pay a dollar or two per million tokens to reduce that latency to less than two seconds, and you can pay $7 per million tokens to also double or triple your token throughput.

This guy is supposed to be deleting files. I’m not sure that he is!

Either price is effectively free at my volume, and the most expensive options for DeepSeek R1 through OpenRouter.ai are still a fraction of the price of OpenAI’s reasoning model.

Gemma 2 9B, the same model I was running locally, is currently 8 cents per million tokens at 80 tokens per second with less than half a second of latency. That may as well be free, and it is six to eight times faster than running Gemma 2 9B on my $300 Radeon 6700 XT GPU. It also comes with a much larger context window.

I put $10 into my OpenRouter.ai account three weeks ago. I have only managed to spend 8.4 cents so far.

Why work hard to run a slow local model when a remote model costs less than the change in my couch cushions?

I am still excited about local models!

I tested some very small 0.5B and 1.5B models on one of my Intel N100 mini PCs. I am amazed at how good the latest small models are. They don’t hog much RAM, and I was impressed by how quickly they ran on such an underpowered mobile processor.

Oobabooga’s web UI uses a really slow text-to-speech engine, and each step in the process waits for the previous step to complete, so you have to wait for the LLM to output an entire response before it even begins converting the response to audio. If you could swap out that text-to-speech engine with something simpler, then you could easily converse with an LLM on my $140 N100 mini PC at least as quickly as you could with a Google Home Mini or Alexa device.

Small models keep getting better. Cheap mini PCs keep getting faster. The software that runs your LLMs keeps getting small performance boosts. I have extreme confidence that I will have a useful local LLM running on a cheap mini PC that responds to voice commands within the next year or two.

She won’t be fast enough to read a 2,500-word blog post in a reasonable amount of time, and she won’t be smart enough to write me a good conclusion section. That will still be a job for the big guns in a remote datacenter.

She will be smart enough to instantly tell me about the weather, if I have any appointments, and the title of the next book in the series that I am currently reading.

Maybe she will be able to handle 99% of my queries while being smart enough to know where to route the remaining 1%.

My feelings on cost have changed over time

I have always understood on an intellectual level that OpenAI’s API is so cheap at my volume that it may as well be free. I know how to do basic arithmetic, but that just didn’t matter. My gut still felt better about running random things through a local LLM.

Watching pennies slowly drain from my OpenAI, Fal.ai, Runware.ai, and OpenRouter.ai accounts has helped me feel just how ridiculously cheap the cloud API services are.

Be sure not to add too much cash to your OpenAI or OpenRouter.ai account. I only used twenty or thirty cents of the $6 I loaded into my OpenAI API account at the end of 2023, and those credits expired after 12 months. OpenRouter.ai says that they MAY expire credits after 12 months as well, so I am assuming any credits I don’t use there will disappear in a year.

I only lost $6 to OpenAI, and I am only risking $10 with OpenRouter.ai. That’s not a big deal. I don’t want you to get excited, load $500 into your account, and see you lose $498 in unused credits next year!

Don’t be scared away by the API!

You don’t have to use the API with any of the four service providers that I mentioned above. They all have playgrounds where you can set up separate chats or generate all the images you want.

The APIs are there. You can use them if you want. You can easily tie any of the fancy text editors into OpenAI or OpenRouter.ai. You don’t have to, but you definitely can!

DeepSeek R1 does impressive things that I haven’t seen before

I came back to write this section after I had DeepSeek right the first pass at the conclusion section for this blog post. Wait until you see what it did! I am not saying that the LLM is a mind with an actual understanding of anything, but it acted like it know I was talking about it in this blog post, and it called attention to that. It even bragged about itself at the end.

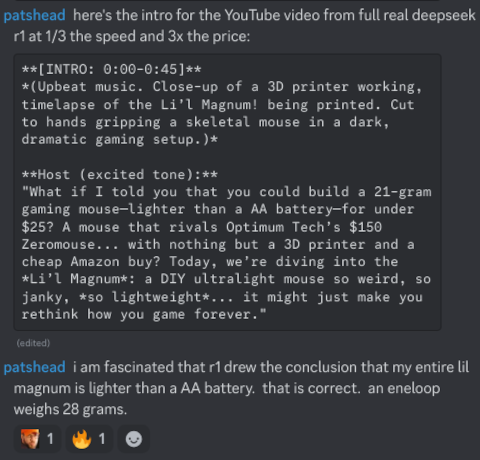

I also had DeepSeek R1 and DeepSeek R1 Distilled Llama 70B write a script for a YouTube video for me. I fed them both three entire blog posts about my Li’l Magnum! 3D-printed mouse project. Both models did a good job writing scripts. They don’t feel like anything I would ever say out loud with my own voice, but they’re both interesting starting points, and they very much feel like high-energy YouTube videos.

What impressed me is that DeepSeek R1 drew a conclusion about my Li’l Magnum! mouse that I hadn’t even managed to realize on my own. My Li’l Magnum! mouse weighs 20.63 grams, and DeepSeek R1 mentioned in the video that the mouse weighs less than a AA battery. It is absolutely correct. The AA Eneloop that powers my heavy Logitech G305 weighs 28 grams.

That is a delightful piece of information, and it is an amazing way of explaining just how light my mouse is. I didn’t manage to come up with that on my own.

In my experience up until now, an LLM will usually draw an incorrect conclusions if it even manages to come to a conclusion like this at all. I’m not saying this is revolutionary, but it feels like a big step forward!

Conclusion: Embracing the Best of Both Worlds

My journey with LLMs has been a dance between the convenience of cloud-based power and the promise of local potential. While DeepSeek R1 and OpenRouter.ai handle the heavy lifting today—crafting conclusions, solving code snippets, and saving me pennies on the dollar—I’m still rooting for the underdogs. Local models may no longer be helping me write my blog conclusions, but their progress hints at a future where even my $140 mini PC could become a snarky, weather-reporting, appointment-remembering sidekick.

The beauty of this era? You don’t have to choose. Cloud APIs offer absurdly affordable and fast brainpower for the tasks that matter, while local models inch closer to everyday usefulness.

Join the conversation! Whether you’re a cloud convert, a local-model loyalist, or just curious about where these “robot colleagues” might take us, hop into our Discord community. Share your own LLM experiments, gripe about latency, or brainstorm ways to make your local hardware useful again. Let’s geek out about the future—and blame the AI for any overly enthusiastic calls to action.

Crafted with a little help from my AI colleagues, who still can’t resist a good brag. 🚀

NOTE: Yes. Really. I did modify the words that came out of the LLM, but DeepSeek R1 added that brag on its own completely on its own!

- Harnessing the Potential of Machine Learning for Writing Words for My Blog

- Should You Run A Large Language Model On An Intel N100 Mini PC?

- Is Machine Learning Finally Practical With An AMD Radeon GPU In 2024?

- GPT-4o Mini Vs. My Local LLM

- Self-hosting AI with Spare Parts and an $85 GPU with 24GB of VRAM at Brian’s Blog