I’m not going to make you wait until the end to learn the answer. I’m going to tell you what I think right in the first paragraph. I believe you should subscribe to the Z.ai Coding Lite plan even if you only write a minuscule amount of code every month. This is doubly true if you decide to pay for a quarter or a full year in advance at 50% off.

I’m only a week and seven million tokens deep into my 3-month subscription, but I’m that guy who only occasionally writes code. I avoided trying out Claude Code because I knew I would never get $200 worth of value out of a Claude Pro subscription. I also now know that I could have paid for a full year of Z.ai for less than the cost of two months of Claude Pro.

I saw a Hacker News comment suggesting that Z.ai’s coding plan GLM-4.6 is about 80% as good as Claude Code. I don’t know how to quantify that, but OpenCode paired with GLM-4.6 has been doing a good job for me so far. Z.ai claims that you get triple the usage limits of a Claude Pro subscription, but what does that even mean in practice?

- Contemplating Local LLMs vs. OpenRouter and Trying Out Z.ai With GLM-4.6 and OpenCode

- OpenCode on a Budget — Synthetic.new, Chutes.ai, and Z.ai

- How Is Pat Using Machine Learning At The End Of 2025?

- OpenCode

UPDATE: Recent changes to plans and pricing

Most of what I wrote here still applies, but prices are drifting upwards and limits are shrinking across the board.

Z.ai added GLM-5 to their subscription. This is a huge upgrade over GLM-4.7, but it isn’t yet on their Lite plan. They are no longer offering 50% off, and their Lite plan has gone up in price to $10. They say they will be adding GLM-5 to the Lite plan in the near future, but it hasn’t happened yet. Z.ai’s deducts three times as much usage from your 5-hour limit when you use GLM-5, so the limits are feeling smaller here.

Chutes.ai no longer offers what they refer to as frontier models on the $3 plan. I can’t access GLM-5, Kimi K2.5, or MiniMax M2.5 on my plan right now. You have to move up to the $10 plan to use these models. They have not shrunk the limits on any of their plans, so the $10 plan seems to be the best value out there right now.

Synthetic bumped the price of their base plan to $30. The limit hasn’t changed, but they did expand the limit on lower-cost tool calls to 500, which means you can run 500 small requests without impacting your quota. They used to have a $60 plan with 10x the limits of the $20 plan, but that is gone now.

Let’s start with the concerns!

Z.ai is based in Beijing. Both ethics and laws are different in China than they are in the United States or Europe, especially when it comes to intellectual property.

I’m not making any judgments here. You can probably guess just how much concern I have based on the fact that I’m using the Z.ai Coding Plan while working on this blog post. I just think this is important to mention. Do you feel better or worse about sending all your context to OpenAI, Anthropic, or Z.ai?

Is Z.ai having performance problems with their subscription service?

This blog post has been up for a month, and I sure seem to be recommending a paid service here. A few people in our Discord community are using the service, so I asked how things are going for everyone there. I’ve also been keeping an eye out for people complaining in places like Reddit.

I had a weekend where I was getting connection errors every half dozen prompts or so, but the service was running at its usual speed. It was a pain having to hit the up arrow in OpenCode and send the same prompt again, but it didn’t really slow me down.

There are a few posts on Reddit complaining that the Z.ai GLM service has gotten so slow that it is unusable. The replies usually have a few people saying things are sometimes a little slower than usual.

Are these growing pains due to a large influx of new users snapping up the $2.40 per month rate? Will their capacity grow to meet their demand? Will things settle down on their own? Are things even all that bad? Will the increasing RAM and GPU prices make it hard for Z.ai to increase capacity? We don’t know, but these are the questions I’d ponder a bit before paying up front for a 12-month subscription.

I am only one anecdote, but everything is still completely usable for my limited needs. I am still happy that I am subscribed, and I would still risk $29 on a full year’s subscription to the Coding Lite plan to lock in at $2.40 per month for the first year.

Are the limits actually more than twice as generous as Claude Pro?

I assume that the statement is true. The base Claude Pro subscription limits you to 45 messages during each 5-hour window, while the Z.ai Coding Lite plan has a 120-message limit in the same window. That is very nearly three times more messages, but are these actually equivalent?

I haven’t managed to hit the limit on the Coding Lite plan. The fact that I haven’t hit the limit should be a good indicator of how light of a user I am!

I suspect that this is one of those situations where your mileage may vary. We know that Claude Opus is a more advanced model than GLM-4.6. Opus is more likely to get things right the first time, and Opus may need fewer iterations to reach the correct result than GLM-4.6.

I’d bet that they’re comparable most of the time, and you really do get nearly three times as much work out of Z.ai’s plan. However, I would also assume there are times when you might eat through some extra prompts trying to zero in on the correct results. If you’re curious about how GLM-4.6 stacks up against other affordable options, I’ve since written a comparison of Devstral 2 with Vibe CLI vs. OpenCode with GLM-4.6 that looks at how these tools feel in practice for casual programmers.

I’m not sure that an accurate answer to this question matters, since Claude subscriptions cost three or six times as much.

What have I done with OpenCode and Z.ai?

My Li’l Magnum! gaming mouse project is written in OpenSCAD. I have a simple build script that should have been a Makefile, but instead it is a handful of for loops that run sequentially. This wasn’t a big deal early on, but now I am up to three variations of eight different mice. Running OpenSCAD 24 separate times is taking nearly four full minutes.

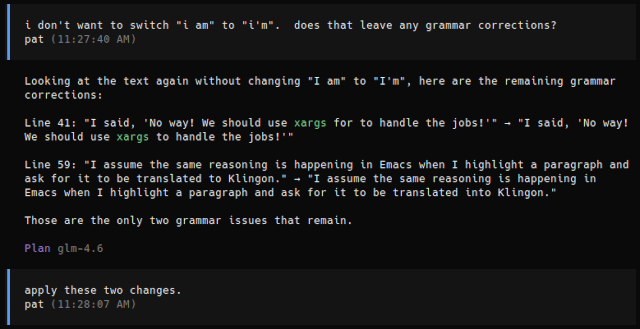

Instead of converting this to a Makefile, I decided to ask OpenCode to make my script parallel. OpenCode’s first idea was to build its own job manager in bash. I said, “No way! We should use xargs to handle the jobs!” GLM-4.6 agreed with me, and we were off to the races.

I watched OpenCode set up the magic with xargs. I eventually asked it to combine its large number of functions into fewer functions by passing variables around. I had OpenCode add optional debugging statements so I could verify that the openscad commands looked like they should.

We ran into a bug at some point, and OpenCode had to start calling my build script to make sure STL and 3MF files showed up where they belonged, but OpenCode didn’t know that my script only builds files that have been modified since the last build. After telling OpenCode that it needed to touch the *.scad files before testing, it started trying and testing lots of things. This is probably a piece of information that belongs in this project’s agents.md file!

I had something I was happy with during my first session, but I wound up asking OpenCode for more changes the next day. We lost the xargs usage at some point, but I didn’t pay attention to when!

There is still a part that isn’t done in parallel, but it is kind of my own fault. I have one trio of similar mice that share a single OpenSCAD source file. I have some custom build code to set the correct variables to make that happen, and OpenCode left those separate just like I did.

I’m pleased with where things are. Building all the mice now takes less than 45 seconds.

You can wire Z.ai into almost anything that uses the OpenAI API, but the Z.ai coding plan is slow!

I almost immediately configured LobeChat and Emacs’s gptel package to connect to my Z.ai Coding Lite plan. I was just as immediately disappointed by how slow it is.

Everything seems pretty zippy in OpenCode. Before subscribing, I was messing around with GLM-4.6 using the lightning-fast model hosted by Cerebras. I am sure Cerebras is faster while using OpenCode, but it isn’t obviously faster. OpenCode is sending up tens of thousands of tokens of context, and it is doing that over and over again between my interactions.

This is different than Emacs and LobeChat. I wasn’t able to disable reasoning in LobeChat, so I wind up waiting 50 seconds for 1,000 tokens of reasoning even when I just ask it how it is doing. I assume the same reasoning is happening in Emacs when I highlight a paragraph and ask for it to be translated into Klingon.

I assume the Coding Plan is optimized for large context, so I wound up keeping Emacs and LobeChat pointed at my OpenRouter account. Each of these sorts of interactive sessions only eat up the tiniest fraction of a penny. I am not saving a measurable amount of money by using my free subscription tokens here.

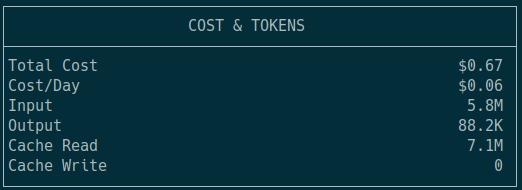

Six million input tokens would have cost at least $6 at OpenRouter, and I am only two weeks into my first month!

It’s tools like OpenCode, Claude Code, or Aider where you have to make sure you’re using an unlimited subscription service. I can easily eat through two million tokens using OpenCode, and that could cost me anywhere from $1.50 to $10 on OpenRouter. It depends on which model I point it at!

I am using OpenCode with Z.ai Coding Lite right now!

I messed around with Aider a bit just before summer. It was neat, but I was hoping it could manage to help me with my blog posts. It seemed to have no idea what to do with English words.

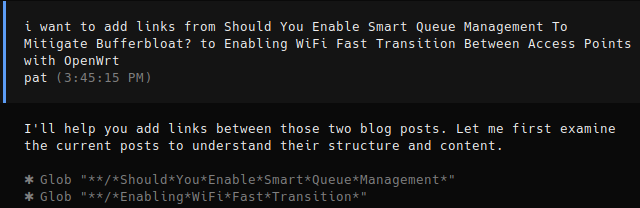

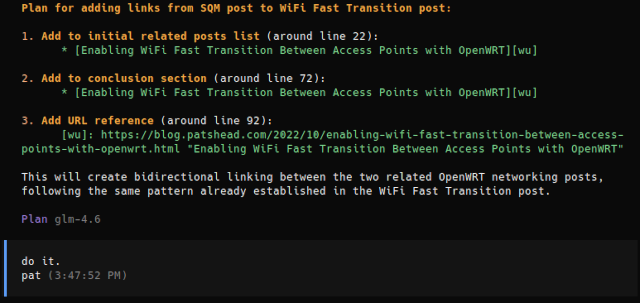

How well OpenCode worked with my Markdown blog posts using Cerebras’s GLM-4.6 was probably the thing that pushed me over the edge and made me try a Z.ai subscription. I can ask OpenCode to check my grammar, and it will apply its fixes as I am working. I can ask it to add links to or from older blog posts, and it will do it in my usual style.

I can ask OpenCode if I am making sense, and I can ask it to write a conclusion section for me. I already do some of these things either from Emacs or via a chat interface, but I have always had to do them very manually, and I would have to paste in the LLM’s first pass at a conclusion.

I could never burn through $3 in OpenRouter tokens in a month using chat interfaces—I probably couldn’t do it in a year even if I tried! Even so, OpenCode is saving me time, and I will use it for writing blog posts several times each month. That is worth the price of my Z.ai Coding Lite subscription.

Do you need the Z.ai Coding Pro or Coding Max plan?

If you do, then you probably shouldn’t be reading this blog! I am such a light user, and I suspect my advice will apply much better to more casual users of LLM coding agents.

That said, the more expensive plans look like a great value if you are indeed running into limits all the time. The Coding Pro plan costs five times more, and you get five times the usage limit. You also supposedly get priority access with 40% faster results from the models, and you also get upgraded to image and video inputs. The Coding Max plan seems like an even better value, because it only costs twice as much again, but it has four times the usage.

Z.ai has built a pricing ladder that actually provides some value for your money. Even so, the best deal is to pay only for what you ACTUALLY NEED!

I would also expect that if you’re doing the sort of work that has you regularly hitting the limits of Z.ai’s Coding Lite plan, then you might also be doing the sort of work that would benefit from the better models available with a Claude Pro or Claude Max subscription. I have this expectation because I assume you are getting paid to produce code, and even a small productivity boost could easily be worth an extra $200 a month.

Conclusion

The Z.ai Coding Lite plan offers exceptional value for casual coders and writers like myself. At just $6 per month (or $3/month with the current promotional discount), you get access to an extremely capable AI coding assistant. While it may not match Claude’s raw power, it is more than useful enough to justify its price, even if you only use it a few times a month.

The integration with OpenCode, which is ridiculously easy to set up, creates a seamless workflow that is easily worth $6 per month, and the generous usage limits mean I am unlikely to worry about hitting caps. For light users, hobbyists, or anyone looking to dip their toes into AI-assisted coding without breaking the bank, Z.ai’s Coding Lite plan is genuinely a no-brainer. If you use my link, I believe you will get 10% off your first payment, and I will receive an equivalent credit in future credits. Don’t feel obligated to use my link, but I think it is a good deal for both of us!

Want to join the conversation about AI coding tools, share your own experiences, or get help with your setup? Come hang out with us in our Discord community where we discuss all things AI, coding, and technology!

- OpenCode with Local LLMs — Can a 16 GB GPU Compete With The Cloud?

- Contemplating Local LLMs vs. OpenRouter and Trying Out Z.ai With GLM-4.6 and OpenCode

- How Is Pat Using Machine Learning At The End Of 2025?

- Should You Run A Large Language Model On An Intel N100 Mini PC?

- Is Machine Learning Finally Practical With An AMD Radeon GPU In 2024?

- Harnessing The Potential of Machine Learning for Writing Words for My Blog