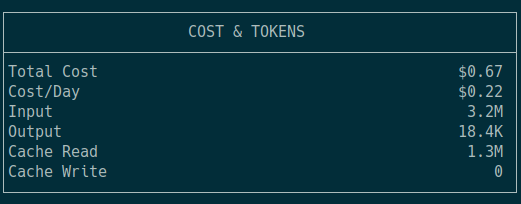

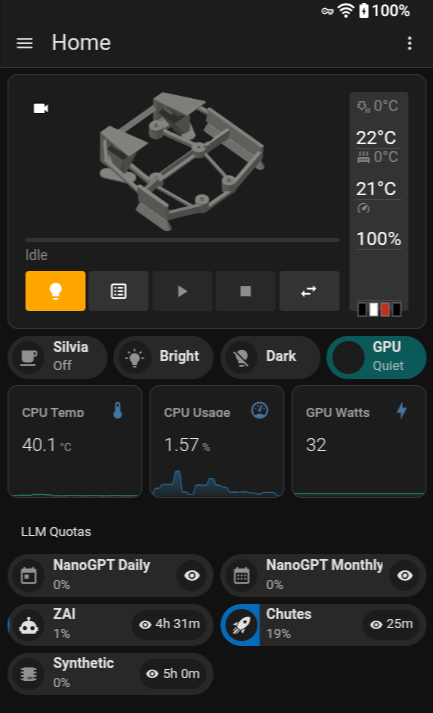

I can’t help myself. I don’t need to try another coding plan. My needs are simple. I never reach the quota on my Z.ai Coding Lite plan, though this may be tighter now that Z.ai is charging three times the quota for GLM-5. Even so, I couldn’t help myself. I signed up for a $3 per month plan at Chutes.ai. I had so much fun there that I wound up also signing up for a $20 per month subscription from Synthetic.new.

I will definitely pare this back down to one or two subscriptions, but I figured I should write about these while I have all three subscriptions active.

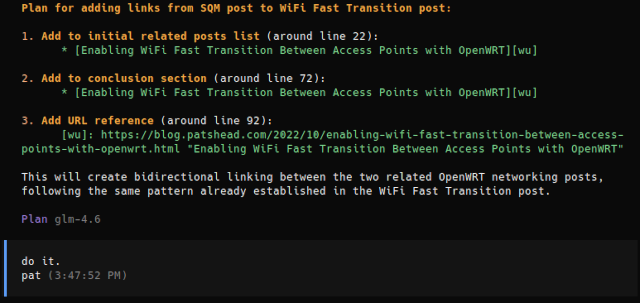

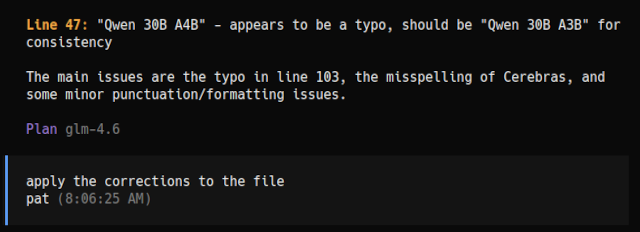

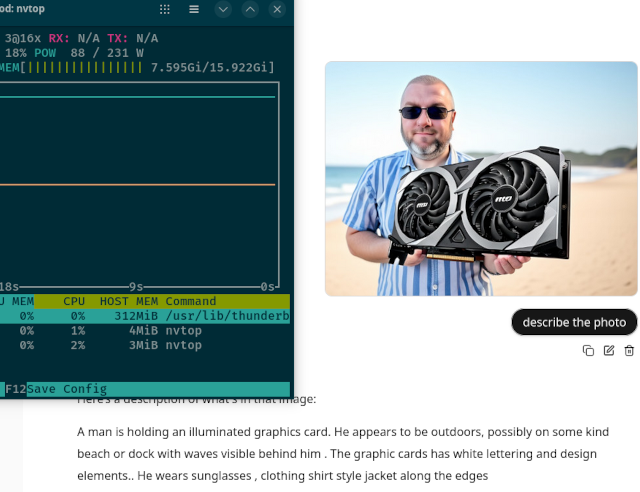

I added my coding plan quota status to my Home Assistant macropad dashboard on my desk. Isn’t that neat?!

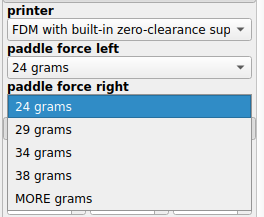

I am going to assume that you are like me. Just a guy at home. Maybe you have a homelab. You write the occasional script to glue things together. You use OpenCode to help you set up fancy things in Home Assistant. You use the robots to help you model things in OpenSCAD.

That means I am going to focus on the cheaper end of every service’s pricing chart. We don’t need 1,350 LLM requests per hour. We aren’t developing software eight hours each day. We have a busy day if we manage to burn through 120 requests.

I am not going to attempt to benchmark the speed of these services with any precision. I am not going to try to figure out which service has stronger models. I am only going to tell you about my experiences. Benchmarks are hard work!

Comparing coding plans isn’t straightforward

Some plans have better models. Some have quotas that reset every five hours, while others reset once a day. Some plans that aren’t in this comparison have a separate weekly quota in addition to the five-hour limits.

That reset timing might matter more than you’d think. Maybe you only sit down to crank out code for a couple of hours each day, so you’d like to burn through the 300 requests you’re allotted for the entire day all at once. But if you’re the type to chip away at projects throughout the day, you might get more for your money with a quota of 120 requests that reset every five hours.

Then there’s the whole model situation. Some services give you one family of models, and that’s it. Others hand you the full assortment of Kimi, DeepSeek, and MiniMax. Having choices is great, but I’ll admit I sometimes waste five minutes just deciding which model to use. Sometimes you just get things done when you don’t have a choice.

Oh, and don’t get me started on the pricing. One subscriptions includes useful MCP servers, some providers are faster, some are in the US. Privacy policies seem alright across the board, but their verbiage is all different. By the time you factor it all in, you realize comparing these things is like comparing apples to… slightly different apples.

I started writing this post based on incorrect information!

I signed up for Synthetic.new because I figured that I had to try it out. Surely you get something when you pay seven times more for comparable quotas, right? You do, but it isn’t quite as impressive as I first thought.

On my first evening, Synthetic’s Kimi K2.5 throughput was three times faster than Chutes’ or OpenCode Zen’s temporarily free Kimi K2.5. What I didn’t find out until a few days later is that Synthetic was having trouble getting Kimi K2.5 up and running on their hardware, so they were outsourcing inference to another party.

Synthetic is running Kimi K2.5 on their own network now.

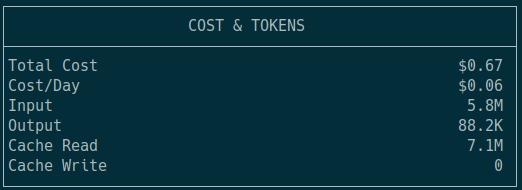

| run | Chutes | Synthetic | opencode free |

|---|---|---|---|

| 1 | 471 | 26.5 | 63 |

| 2 | 146 | 53 | 159 |

| 3 | 71 | 29 | 65 |

| 4 | 60 | 153 | 39 |

| 5 | 120 | 74 | 29 |

NOTE: The table shows the number of seconds each run took to check the grammar on this blog post using Kimi K2.5.

Don’t take this table as being proper science. I decided to point my grammar-checking swarm at three different Kimi K2.5 providers, and I ran a grammar check of this blog post at random times. I’m not averaging runs. I’m not running this pseudobenchmark every ten minutes. All that I can say for sure is that the numbers match my experiences.

We’ll talk about Chutes and Synthetic in more detail soon, but I do want to make a quick note about OpenCode Zen’s free Kimi K2.5. It won’t be free forever, and I have had instances where it rejects my prompts due to being overcapacity. It has taken 90 seconds or more before a prompt has gone through when that happens.

Z.ai’s coding plan

Z.ai has gotten a lot of criticism since I signed up for my Z.ai coding plan. Their service got slower for a while when they released GLM-4.7. I assume the hype of the release drove up usage and subscriber count, and I wouldn’t be surprised if it took them a while to figure out how to balance their GLM-4.6 and GLM-4.7 split on their inference servers.

They recently announced that they were going to be limiting the number of new users who can subscribe so they don’t go too far over capacity. Even so, their service is slower than ever. It is possibly too slow to reach the quotas of their Pro plan, and not nearly fast enough to properly utilize a Max plan.

Enough readers of my blog have used my Z.ai referral code that I wound up upgrading to the Pro plan. I’ve never managed to use more than 3% of my quota, but I also never work for an entire five-hour period.

Z.ai’s plan only gives you access to Z.ai’s models. GLM-4.7 is a capable model that works great with OpenCode, and their newly released GLM-5 feels like it might actually be a little better than Kimi K2.5.

The biggest perk of Z.ai’s coding plan is probably the MCP servers that they offer. I have them all configured in OpenCode. The work I do doesn’t hit them all that often, but I do rack up several dozen uses of the WebFetch and WebSearch MCP servers each month. They also offer a vision MCP with OCR and their Zread MCP for searching indexes of documentation for open-source repositories.

Z.ai’s Lite coding plan has a quota of 120 requests every five hours. There is no weekly quota, but they have been saying one is coming. They might already be here, but I can’t see them on my legacy account.

There is a big problem with Z.ai’s Lite plan at the time I am writing this. Their excellent new GLM-5 model is only available on the Pro and Max plans, but not on the Lite plan. Z.ai says they are working to add capacity so they can add GLM-5 to the Lite plan, but they haven’t said when that will happen. Until they do, this gives a big advantage to the next provider on the list.

- Is The $6 Z.ai Coding Plan a No-Brainer?

- OpenCode with Local LLMs — Can a 16 GB GPU Compete With The Cloud?

Chutes.ai

I have been searching Google for inexpensive LLM subscriptions every week since signing up for my Z.ai plan. I didn’t learn about Chutes until I was reading a random Reddit thread where somebody mentioned them. Their service is well hidden!

I was immediately intrigued. $3 per month with a quota of 300 requests per day. They give you access to all sorts of open-weight models including GLM-5, Devstral 2, Kimi K2.5, MiniMax M2.5, and DeepSeek V3.2.

That is a real $3. They aren’t telling you this is 50% off like Z.ai’s marketing has been doing for the last several months. It is just listed at $3. Even better, the next step up the ladder jumps to nearly seven times the daily quota for $10.

| Model | Z.ai | Chutes | Synthetic |

|---|---|---|---|

| GLM-5 | Pro | Yes | |

| GLM-4.7 | Yes | Yes | Yes |

| Kimi K2.5 | Yes | Yes | |

| MiniMax M2.5 | Yes | Yes | |

| Devstral 2 | Yes | ||

| DeepSeek V3.2 | Yes | Yes | |

| GPT-OSS-120B | Yes | Yes | |

| Gemma 3 27B | Yes | ||

| MCP Servers | Yes |

There’s a lot of value here. You get access to all the same models that Z.ai offers and then some. You can probably squeeze a bit more value out of Z.ai if you manage to burn through your Z.ai quota during three five-hour periods in the same day, but Chutes gives you more than double Z.ai’s five-hour quota for the entire day, and they’ll let you burn through all of them in a single session. This works better for me, and Chutes’ smallest plan is less than half the price of Z.ai’s offering.

While Z.ai has gotten slow over the last two months, Chutes has been slightly unreliable. Sometimes my OpenCode session will just seem to get stuck. I have to cancel the current operation and ask it to continue.

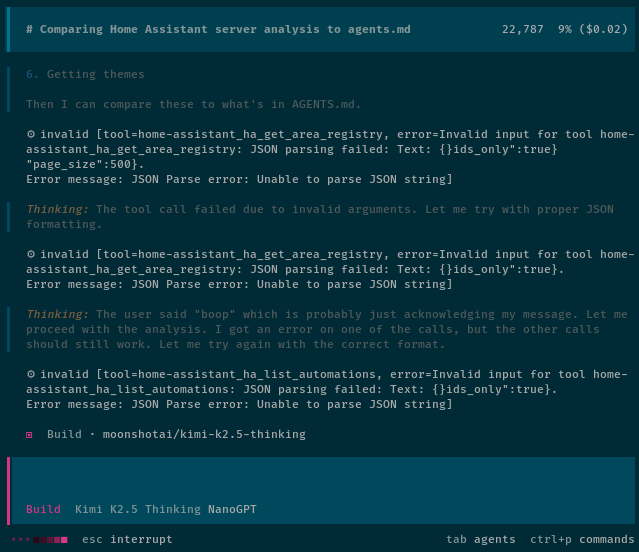

Is this a deal-breaker for you? I don’t mind sending a “boop” message every once in a while.

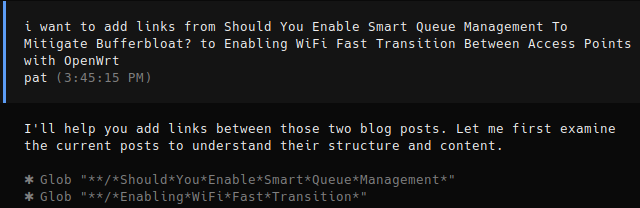

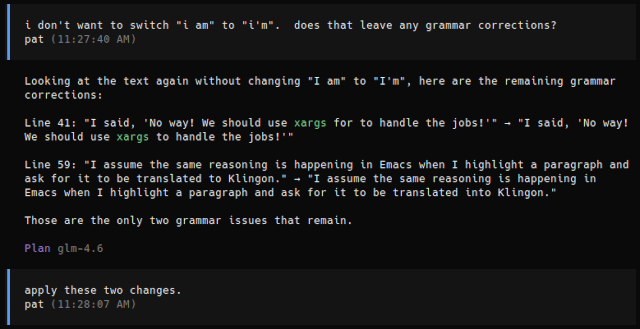

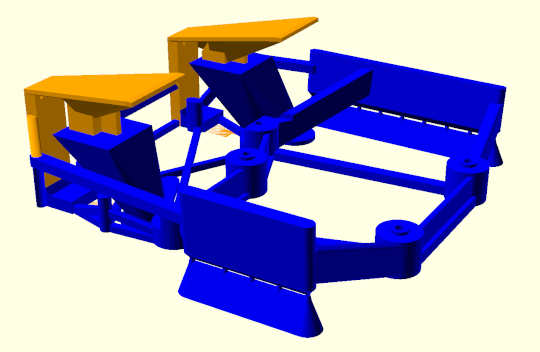

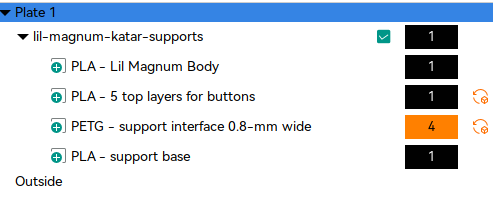

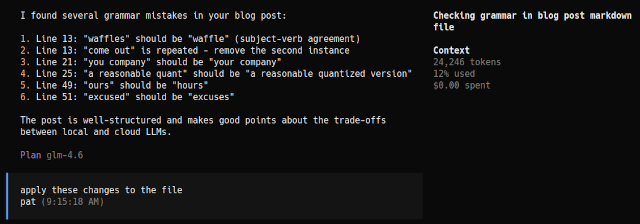

I’ve come to rely on Chutes for my blogging workflow. I have an OpenCode skill that launches a swarm of subagents to check my grammar. Each subagent uses a different model, and the main model collects the suggestions and shows me the ones that two models agreed on. I am using GLM-4.7 via my Z.ai subscription, and GPT-OSS-120B and Gemma 3 27B via my Chutes subscription. These three different lineages of models sometimes provide me with very different results.

Synthetic.new

Synthetic.new is priced in a different category than the other two. Their cheapest plan is $20 per month while having a similar quota to Z.ai’s $7 plan. They are based in the United States, and my understanding is that they do all their inference in the clouds of large providers in the United States.

Synthetic has a more professional and enterprise-grade feel than Chutes or Z.ai. The more time I spend flipping back and forth between the same models on Synthetic and Chutes, the more I think that professional veneer doesn’t matter.

Synthetic really needs to be as good or better than an OpenAI Codex subscription at this price.

The first thing I did was fire up two simultaneous OpenCode sessions using Kimi K2.5 using Chutes and Synthetic.new. I gave them the same code to work on and exactly the same prompt. It was obvious that Synthetic.new was running significantly faster.

They did NOT come back with identical plans, so comparing the completion times isn’t entirely fair or precise. That said, Synthetic.new can be three times faster than Chutes. Even so, sometimes Synthetic is slower than everyone else. I do feel that Synthetic is more consistent, but I don’t know if that small potential boost in speed is worth seven times the price.

I don’t feel that Synthetic.new fits all that well into this comparison. When I saw the pricing, I immediately thought their $60 plan would be a fantastic deal for professionals who are using Claude Max. The offerings from both Synthetic.new and Anthropic are definitely targeted at someone with bigger workloads than mine.

If Kimi K2.5, GLM-5, and Sonnet 4.6 were students, they would attend the same classes and all three would be getting passing grades. I suspect Sonnet 4.6 would be doing a little better, but they’re definitely peers. Maybe you would want to subscribe to Claude Max 5x at $100 per month to use your 75 or so requests with Opus for the planning phase, then use the 1,350 requests with Kimi K2.5 on your Synthetic.new Pro plan for implementation. You only get 900 Sonnet requests with a $200 Claude Max subscription.

NOTE: I am not sure how Anthropic does their math. Claude Max 5x gives you 225 Sonnet requests per five-hour window, and it is my understanding that they count one Opus request as three Sonnet requests. I can’t find any official documentation that says this. If you’re already using Claude Max, then you probably already have an idea of how much Opus use you get each day.

Z.ai and Chutes are good for the workhorse requests when using Claude, Codex, or Gemini for planning, but Synthetic.new’s service and privacy policy seem to match the three big providers more closely.

NOTE: I am not a legal expert. I’m not convinced that Synthetic’s privacy policy or terms of service are stronger than Z.ai’s or Chutes.ai’s, but there is something about Synthetic that feels more trustworthy to me.

There is something unique about Synthetic.new’s quotas!

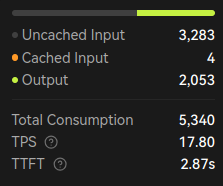

Synthetic gives you a limited number of LLM requests during a 5-hour window, just like almost every competing LLM subscription. What is unique about Synthetic is that they don’t charge you a full request for small prompts.

They charge you 0.1 for tiny tool-call requests. I see these tiny requests go past my OpenCode window quite often. It is normal for me to see a dozen of them go by in a 20-minute OpenCode session.

Remember when I said that it is difficult to directly compare coding plans? Add this to the list of reasons. In practice, you’re probably getting more like 160 or 180 requests every five hours.

How much does speed matter?

I keep wanting to use the word performance, but most people are comparing the quality of the output when talking about the performance of an LLM regardless of how long it takes. This blog post is mostly focused on Kimi K2.5 or at least models that perform similarly enough. Speed of the delivery of tokens is what I am noticing here.

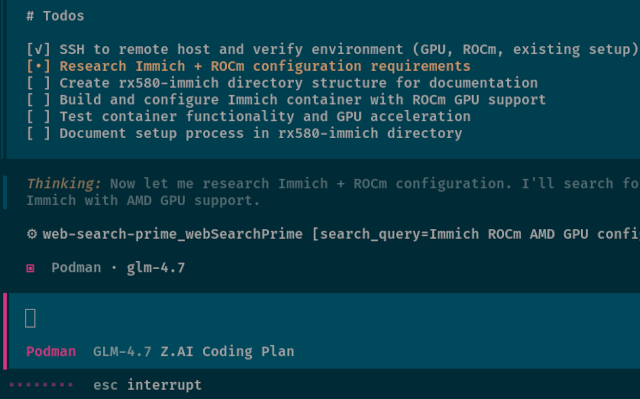

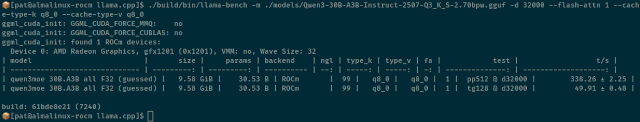

I was working on my blog post about local LLMs on a used Radeon RX 580 GPU the week I signed up for Chutes. I had OpenCode creating Podman containers on a remote host over SSH. I want to say that I averaged around 100 requests for each test container I created.

The speed of the LLM wasn’t a significant bottleneck. OpenCode was waiting for images to download and for llama.cpp to compile. It didn’t help that my test server was a high-performance gaming PC in 2013. A machine like that is a little slow these days!

I am enjoying the extra speed of Synthetic.new, even if it isn’t always faster than Chutes. When Synthetic is faster, it is almost twice as fast. That isn’t a game changer for me, but it is nice!

While it doesn’t make much difference for me, the extra speed might be a huge selling point for you!

- OpenCode with Local LLMs — Can a 16 GB GPU Compete With The Cloud?

- Fast Machine Learning in Your Homelab on a Budget

Is there a difference in quality of responses from different providers?

Yes, but I am not the right person to attempt to conduct the science to figure out exactly how well each provider is doing.

You can quantize models to fit in less VRAM. That means you can fit larger models on smaller GPUs, like how I squeezed a 3-bit quant of GLM-4.7-Flash onto my 16 GB 9070 XT GPU. A smaller quant could also be used to squeeze more parallel requests onto the same GPU. The smaller the quant, the lower the quality of the output.

Kimi K2.5 isn’t a model that a provider is likely to be squeezing down into a smaller quant. It is a massive model with 1 trillion parameters, but it is already natively running at 4 bits. That is between one-half and one-quarter of the native size per weight of most other models.

I have been signing up for more services specifically to try Kimi K2.5, so I am less likely to notice if one provider is serving degraded models.

I have swapped back and forth between Z.ai and Chutes for GLM-4.7 and GLM-5 quite a bit, and I haven’t noticed Chutes performing any worse. This is not science. This is only my anecdote.

Quantization doesn’t matter. The model you’re using doesn’t matter. What matters is that the model on the service you choose gives you results that you’re pleased with at a price you can afford.

Which provider should you choose?

Whatever you choose, I would suggest that you start small. Don’t just prepay for a year of Z.ai’s Max plan without trying the Lite or Pro plan first. Upgrading later is easy.

Synthetic.new is the answer if you want to support an American company with a good privacy policy or if a little extra speed, reliability, and consistency are more important than price. Odds are good that Synthetic is more likely to be serving higher quality quants or unquantized models.

I am not sure that Synthetic is worth the price, though, when you can pay a bit less for OpenAI Codex and have access to even stronger and faster models.

NOTE: I have had a couple of nights where Kimi K2.5 on Synthetic, Chutes, and OpenCode Zen’s free tier were all extremely slow. Synthetic does SEEM to perform better more often, but their service isn’t perfect.

Z.ai is a good choice if you need their MCP servers, or if you want to show your support to one of the companies that is releasing fantastic open-weight LLMs. Z.ai is the creator of all the models that it hosts. Maybe the lack of choice in models is a bonus for you. GLM-5 is a fine model, and GLM-4.7 works well with the build agent. You won’t be tempted to waste time trying to figure out which open-weight coding model is ideal for your use case if you don’t have them in your arsenal.

| Provider | Price | Requests every 5 hours | MCPs |

|---|---|---|---|

| Z.ai (Lite) | $3 | 120 | Yes |

| Z.ai (Pro) | $15 | 600 | No |

| Z.ai (Max) | $30 | 2,400 | No |

| Chutes.ai (Base) | $3 | 300/day | No |

| Chutes.ai (Plus) | $10 | 2,000/day | No |

| Chutes.ai (Pro) | $20 | 5,000/day | No |

| Synthetic.new (Standard) | $20 | 135 | No |

| Synthetic.new (Pro) | $60 | 1,350 | No |

NOTE: Z.ai is shaking up their plans a little, so I am not sure if the requests in the quota are actually the same today as they were when I made this table. I do know that Z.ai says they are charging 3 requests for every GLM-5 message.

Chutes is probably the best value. Chutes has all the best open-weight models that are currently available. Their pricing is the lowest. Their speed is adequate. If you told me to cancel everything and pick just one provider today, I would be choosing Chutes.

They are all inexpensive enough that you could try them all. You get a discount on Z.ai if you use my referral link, though I am not sure how much money you save. You definitely get $10 off your first month at Synthetic.new when using my referral code. Chutes doesn’t have a referral program.

You could spend less than $30 all at once and immediately have a ton of new toys to play with for the next month, or you could space out your testing over two or three months like I did. It is nice to have a week or two of overlap between services so you can see them operating side by side!

- Is The $6 Z.ai Coding Plan a No-Brainer?

- Squeezing Value from Free and Low-Cost AI Coding Subscriptions

- Synthetic.new

What about NanoGPT?

This is another service that was tough to find! NanoGPT gives you 30,000 requests per month at $8 per month, and they have all the latest open-weight models. Pricing, models, and usage are comparable in value to the $10 plan from Chutes.

I keep reading about problems with NanoGPT. I came very close to just explaining that the reviews were all poor, and the vibe didn’t seem right, and that I had no interest in trying the service. I couldn’t do that. I had to sign up and give it a try. Just stay away.

Kimi K2.5, GLM-5, and MiniMax M2.5 are all slow. I don’t think I’ve had a single successful tool call with Kimi K2.5 or GLM-5 in half a million tokens. I tried the same prompt with GLM-4.7, but it just kept coming back with no response. I did manage to see some successful tool calls with MiniMax M2.5, but it is going at an absolute snail’s pace.

Sometimes Kimi K2.5 via NanoGPT will just say that it is going to investigate, but it just stops after that statement.

These two issues don’t always happen, but when they’re happening with a model, they do not stop happening. I assume it depends on which provider NanoGPT is routing your requests through at that time. I have been having better luck using models that only have one provider on NanoGPT, like MiniMax M2.5. If I have to stick to such a limited selection of models, why should I use NanoGPT at all?

They have my $8. I’m not going to try to get it back, but my NanoGPT subscription is nearly useless with OpenCode.

Conclusion

At the end of the day, any of these services will get the job done for casual coding, tinkering, and creating Podman or Docker containers. You don’t need to overthink it. I have been happily using Z.ai for months, and I only started experimenting with the others because I enjoy comparing tools and finding good deals. I imagine that most people would be perfectly content picking one service and sticking with it.

I didn’t write this blog post to do an accurate, exhaustive head-to-head comparison of these services. It took me months to learn that Chutes and Nano-GPT even existed, and judging by the threads in r/OpenCodeCLI, most people using OpenCode don’t know about these subscriptions. I just want you to be aware that they exist, and I want you to understand where they might fit into your workflow.

If you have questions or want to share your own experiences, come hang out with us in our Discord community. We are a friendly group of people who are all figuring this stuff out together. We aren’t just talking about machine learning in there. We have a good overlap of homelabbers, 3D printing enthusiasts, and gamers.

- Is The $6 Z.ai Coding Plan a No-Brainer?

- OpenCode with Local LLMs — Can a 16 GB GPU Compete With The Cloud?

- Devstral with Vibe CLI vs. OpenCode: AI Coding Tools for Casual Programmers

- Squeezing Value from Free and Low-Cost AI Coding Subscriptions

- Fast Machine Learning in Your Homelab on a Budget

- Synthetic.new