Last year, I built a reasonably fast and energy-efficient server to host KVM virtual machines in my office. I included a pair of 240 GB Samsung EVO 850 solid-state drives. That gives me a fast RAID 1 for the operating system, and I was hoping to use the rest of the available space for dm-cache.

dm-cache (a.k.a. lvmcache) is a block device caching layer that was merged into the mainline Linux kernel back in April of 2013. It is a “hotspot” cache that lets you use fast SSDs to cache reads and writes to your slower, old-school spinning media.

When I set up my little server, I installed Ubuntu 14.04 LTS. This seemed like a good idea, since I didn’t want to have to touch the host operating system very often. Unfortunately, the dm-cache tools that ship with the 2014 version of Ubuntu aren’t very advanced—you had to do your own sector-level math when setting up your cache.

I tried to get dm-cache going anyway, but I didn’t do a very good job. It seemed to be caching, but my spinning disks were being written to at a constant 1 MB/s for days, and my benchmarks didn’t show any improvement. This seemed like a failure, so I turned off dm-cache.

The Ubuntu 16.04 release is now only a few months away, so I decided to upgrade early to properly test out dm-cache.

Should I be talking about dm-cache or lvmcache?

I haven’t figured this out yet. When I started researching this last year, I don’t remember seeing a single mention of lvmcache. This year, you almost exclusively use lvm commands to set up and control your dm-cache.

I don’t think you can go wrong using either name. It is dm-cache in the kernel, and lvmcache in user space.

What was I hoping to get out of dm-cache?

I had a feeling dm-cache wouldn’t meet all of my expectations, but I thought it was worth giving it a shot. Here are some of the benefits I was hoping to see when using dm-cache.

- Faster sequential reads and writes

- Faster random reads

- Much faster random writes

- Power savings from sleeping disks

Performance improvements

dm-cache is a hotspot cache—much like ZFS’s l2arc and zil. These hotspot cache technologies may not improve your synthetic benchmark numbers, as my friend Brian recently discovered. At first, I didn’t find any performance improvements either, but this was my own fault.

When I upgraded from Ubuntu 14.04 to 16.04, I didn’t notice that I was still running the old 3.19 kernel. There’s been a lot of dm-cache progress since then, and I wasn’t able to get the smq cache mode to work with the older kernel. Once I upgraded the kernel and switched to smq, things improved dramatically.

1 2 3 4 5 6 7 | |

As you can see from the benchmarks, smq has been a really big win for me. The write speeds are 75% faster than the uncached disks. In fact, the cached writes are almost as fast as the solid-state drives. The read performance is even more impressive—the cached mirror is 50% faster than the solid-state drives alone!

1 2 3 4 5 6 7 8 9 10 11 | |

My cache is over 200 GB. I haven’t even managed to fill half of that, and I’m certain it would work adequately at a fraction of that size. My virtual machines occupy about 2 TB, but the vast majority of that data is taken up by backups and media on my NAS virtual machine. The data that gets accessed on a regular basis easily fits in the cache.

What about power savings?

Most people don’t care about spinning down the hard drives in their servers. My little homelab virtual machine server is very idle most of the time. Spinning down a couple of 7200 RPM hard disks will only save me six or seven watts, but I think it’d be a nice bonus if I could make that happen.

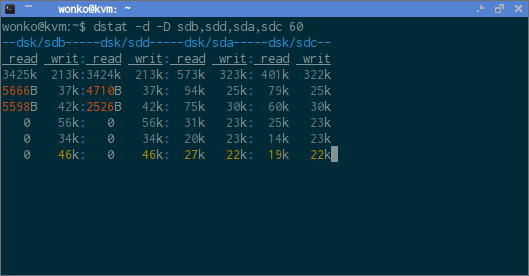

I’ve tried cheating as much as I can, but I just can’t get dm-cache to stop writing to the cached media. I see hours go by throughout the day with no reads on the magnetic media, but with the default smq settings, there is a slow but constant stream of writes to the cached disks. Most of those writes seem to be caused by OpenHAB’s log file.

I’ve tried cheating by setting the smq’s migration_threshold to zero—low values also seem to work. This often silences the writes to the cached disks, so at least I’m on the right track. Sometimes, the cached disks still see several small writes each minute.

Even when I manage to get dm-cache to keep the cached disks in a very idle state, something is still preventing my disks from going to sleep—even when they haven’t been accessed in over an hour! Google tells me the usual culprits are smartctl, smartd, or hddtemp. I’ve ruled all of these out. I can manually put the disks into standby with hdparm -y, and they will stay asleep for hours.

It would have been nice to get the drives to spin down. They don’t use much power—probably less that 1 kWh every six months—but they are the noisiest thing in my office. Fortunately for me, they don’t need to be in standby to be quiet.

Why not skip the ancient spinning disks?

Solid-state drives are nice, but they’re still small and expensive. At a minimum, I would need a pair of 2 TB ssds in my homelab server. Those 2 TB solid-state drives cost over $600 each. One of those drives costs almost as much as my entire server, and my server has twice as much storage.

The majority of the data my virtual machine server touches regularly fits very comfortably in my 200 GB dm-cache—it has a read cache hit rate of 84.9%. That means my server almost always has the responsiveness and throughput of solid-state drives, while still retaining the benefits of the large, slow, cheap mechanical disks.

I used to have an ssd and a mechanical drive in my desktop, but I had to decide which data to store on each drive. The best part about dm-cache is that I don’t have to worry about that, because dm-cache manages that for me!

Was dm-cache worth the effort?

For a virtual machine host, it was definitely worth the effort. My KVM host is performing much better since enabling dm-cache—almost as well as if I’d only used solid-state disks for my purposes! This is a great value to me, and I can easily and inexpensively add more rotating disks in the future to expand my storage capacity.

I could have saved $50 to $100 if I used smaller solid-state drives, but I’m pleased with my decision to use the 250 GB Samsung EVO 850 SSDs. They have a larger RAM cache than the 120 GB model, but they are still rated for a write load of 41 GB per day—the 500 GB Samsung EVO is rated for 82 GB per day. I won’t be needing the extra endurance of the 500 GB model, and the 120 GB model is just too small for me to make use of anywhere else in the future.