I don’t know about you, but I grew up in a house with incandescent light bulbs. In those dark days, we had multiple 60-watt light bulbs in every room. Not 60-watt-equivalent bulbs, but bulbs that actually consumed 60 watts of electricity, and they really weren’t all that bright! We were using the most advanced technology available at the local K-Mart.

My entire homelab and home-network setup uses less energy than one of those light bulbs. At least, I think it does. I have never measured the power consumption of my two extra WiFi access points around the house! I probably squeak in at just under 60 watts even if we count those, but it is possible I am going over by a couple of watts!

I doubt that all of the tips in this blog will apply to your particular home-server setup, but I suspect at least one or two could be useful for you!

- Pat’s Mini PC Comparison Spreadsheet

- Do Refurbished Hard Disks Make Sense For Your Home NAS Server?

- My Thoughts On Brian Moses’s DIY NAS 2025 Edition

- My First Week With Proxmox on My Celeron N100 Homelab Server

- Choosing an Intel N100 Server to Upgrade My Homelab

- How Efficient Is The Most Power-Efficient NAS?

Use fewer hard disks!

This might be some sort of a conundrum.

Larger hard disks tend to cost more per terabyte, but each additional hard disk will increase your electricity bill. Even though power is rather cheap here in Texas, each 5400-RPM 3.5” hard drive running for five years will cost me around $45. That will wipe out any savings I might see from buying two 10 TB drives instead of a single 20 TB drive.

There are a lot of places where electricity costs two or even three times as much. It would probably be a no-brainer to buy the biggest disks available in San Francisco or somewhere in Germany!

You are probably running a RAID on your NAS, so some of your storage is being used as parity. If you have a 3-disk RAID 5 or RAID-Z1, then 33% of your capacity is devoted to parity. If you have a 6-disk RAID 5 or RAID-Z1, then only 17% of your total capacity will be parity data. When you look at your storage from this angle, there is a big advantage to using as many smaller disks as you can.

You might need to do some math to figure out what makes the most sense for your situation.

I don’t use RAID with mechanical disks anymore. I have less than 10 terabytes of data to store, and that doesn’t grow by much more than 1 terabyte each year. I am fortunate because the largest available hard disks are getting bigger even faster than my data is growing.

I have three large mechanical hard drives. One is in my workstation, one is attached to my N100 homelab server, and one is attached to a Raspberry Pi over at Brian Moses’s house. All my data starts syncing within 30 seconds of being saved, and it gets replicated between those three machines as fast as my network will allow.

It is not entirely unlike having a 3-way mirror spread over five miles. It is now cheap to attach a mini PC to every large hard disk, and I have the minimum number of disks to maintain three separate copies. I think I might be doing a good job here, but I wouldn’t mind having one more backup copy!

- How Efficient Is The Most Power-Efficient NAS?

- Using the Buddy System For Off-Site Hosting and Storage

- Self-Hosted Cloud Storage with Seafile, Tailscale, and a Raspberry Pi

Run fewer servers! Even if they’re not as efficient!

There was a time when your company would have had a room full of specialized servers. The database servers would have been pushing their disks as hard as they could go, but probably had lots of idle CPU power and network bandwidth. Your file servers would have been maxing out their network ports, but they had plenty of CPU power and disk bandwidth being underutilized.

Even ignoring that server hardware costs money, every server you run pays a tax just by being powered on. If you have a busy datacenter, and you can somehow squeeze multiple services with different bottlenecks onto the same hardware, you can save a lot of money. The same can be true in your homelab.

My little N100 mini PC with its 14-terabyte USB hard disk uses about 20 watts of electricity. If I fill that machine up with as much RAM as it will hold, and I fill that meager 32 gigabytes of RAM up to the brim with virtual machines, then I might have to buy a second mini PC to run even more virtual machines. At that point, I will be up at 40 watts.

One of our friends in Discord has a Ryzen 5600G machine in his homelab. If you subtract out the watts from his short stack of 3.5” hard disks, his Ryzen server averages around 25 to 30 watts. His Ryzen 5600G is three times as fast and can hold four times as much RAM as my N100 mini PC.

NOTE: I would trust my math, the measurements, and my memory more if I personally tested the Ryzen 5600G build. It should be accurate enough for demonstration purposes!

I am doing way better as long as I only need one mini PC, but the scales tip into his favor if I outgrow my little machine. Not only that, but his build would idle about the same even with a beefier CPU.

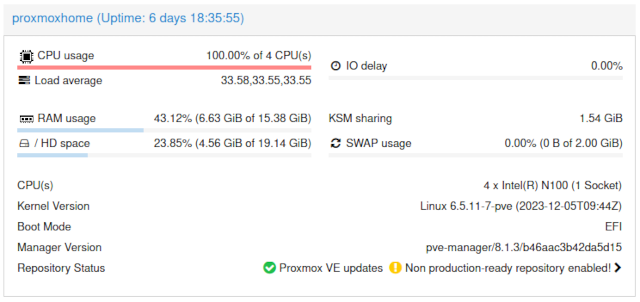

I am in no danger of outgrowing my N100 mini PC.

Don’t scale up too far!

This could be a 2,500-word blog post all by itself. Buying single, bigger, faster machines is a really good value right up until it isn’t! At some point, you will save more money by scaling out.

For every generation of Ryzen CPU so far, it has been about the same price or a little bit cheaper to build two 8-core machines instead of one 16-core machine, and that usually includes loading each 8-core machine with as much RAM as the single 16-core machine.

The 16-core Ryzen machines are not twice as fast as an 8-core. They both have the same size memory bus, so the bigger chip is usually fighting hard to keep busy. A 16-core Ryzen 5950X is roughly twice as fast as a 6-core Ryzen 5600.

Tune your CPU governor

The more powerful your CPU, the more this will help. I wrote a lot of words about experimenting with the conservative CPU governor on my ancient, power-hungry FX-8350 build. That CPU could pull an extra 200 watts out of the wall when it would spin up to full speed. Anything that made the CPU spin up prematurely incurred a pretty big efficiency penalty, so tuning the governor helped quite a bit here!

I focused on Tailscale while tuning all the various knobs. I wanted to make sure that the CPU would spin up to the maximum during a long data transfer, but it didn’t need to ramp up much at all for something that would finish in two or three seconds. I wound up setting the up_threshold for the governor just high enough to make sure I would hit full speed after around 10 seconds of sustained maximum CPU utilization.

My new N100 mini PC homelab server is on the exact opposite end of the spectrum. The difference between absolute 100% CPU utilization at maximum clock speeds compared to idling at the lowest clock speed is only 12 watts. Occasional short spikes that use an extra 12 watts add up to almost nothing.

The bigger the gap between your CPU’s idle power consumption and its full-tilt consumption, the bigger an impact the CPU governor can have. Tuning the governor on my FX-8350 had almost zero impact on apparent performance, but it saved me almost as much power as my new N100 homelab box consumes each day.

Choosing power-efficient peripherals

It is easy to make good macro-level choices in your NAS or homelab build. It is pretty obvious that a mobile N100 CPU will use less electricity than a 96-core AMD EPYC server CPU, and all 3.5” mechanical hard disks are all pretty similar as long as they are spinning at the same RPM.

Matt Gadient did a lot of work testing components to push his home server’s power consumption to the limits. He even went as far as disabling the keyboard to save an extra watt. He also tested multiple SATA cards with different chipsets, and he even learned that these cards used different amounts of power when plugged into 4x and 16x PCIe slots.

Trying out multiple SATA cards would be both time consuming and somewhat costly. Sure, the cards don’t actually cost that much, but buying extra PCIe cards that might wind up in a box in the closet might wipe out any potentials savings you will see on your electric bill!

Should you buy a more efficient power supply?

You should do the math to verify this, but the best power supply to use is the one you already have. The most efficient power supplies aren’t THAT much more efficient than the worst power supplies. You already spent $40 to $80 or so on the PSU that you already own, and you’d have to spend another $50 to $100 to buy something more efficient. You will never make up the difference on your electric bill.

You will see a bunch of 80 PLUS ratings for power supplies. The fancier the metal used in the name, the more efficient the power supply will be. The trouble is that these power supplies are most efficient at 50% load, and the graphs you will usually find for these power curves don’t even show you the efficiency below 20% load.

There is a very good chance your home server spends most of its day closer to 5% of your power supply’s maximum load. Pretty much every power supply is inefficient when it is barely utilized.

Let’s put this into perspective with some questionable math. If your server is idling at 30 watts with an 80% efficient power supply, and you upgrade to a 90% efficient power supply, then your server will idle at about 27 watts. That would save one kilowatt hour every two weeks. That is less than $4 per year where I live.

You might be able to make some significant gains here if you are able to use a PicoPSU matched with an efficient GaN power supply, but either half of that combination costs as much as or more than a regular PC power supply. I suspect you would wipe out any monetary savings even in a region with extreme electricity costs.

If you can get your hard disks to go to sleep, that can save a ton of power!

This is tricky. The first problem is that parking the heads to sleep and spinning the motors up and down causes extra wear and tear on the hard drive. This isn’t as big of a problem as it was 15 years ago, but it still isn’t exactly fantastic.

If your hard drives are only spinning up and down three or four times each day, that’s great. If they are spinning up and down a dozen times an hour, then you may wind up wasting all the money you saved on your electric bill replacing dead hard drives.

The other problem is that it is challenging to gets hard disks to spin down using any traditional RAID setup. You could probably do a good job here if you use Unraid, but if you’re using Linux’s mdadm RAID or ZFS, this isn’t something you will be able to do a good job of optimizing.

I tried pretty hard to tune lvmcache to the extreme to keep my old homelab server’s RAID 10 from spinning up, but it didn’t work out nearly as well as I had hoped.

- Do Refurbished Hard Disks Make Sense For Your Home NAS Server?

- Using dm-cache / lvmcache On My Homelab Virtual Machine Host To Improve Disk Performance

You are paying a power tax as soon as you boot your first virtual machine

This has gotten way better over the years. In ancient times, booting the most basic and barebones virtual machine on your laptop would absolutely demolish your battery life. Just having a virtual machine idling along still has an impact today, but it isn’t quite as bad.

I am looking at my notes on my N100 mini PC, and I am not confident in how I was notating things the first few days that I was testing. I hope this is correct, because I don’t have an easy way to retest any of this today!

It looks like my mini PC averaged 9 watts of power consumption with Proxmox installed but no virtual machines or containers installed. That goes up to 12 watts as soon as I booted a couple of completely idle virtual machines. Three watts doesn’t feel like much, but that is an extra 33%!

You sort of only pay this idling tax on the first virtual machine. Sure, every virtual machine you boot will be running a kernel that will be waking up the CPU a few hundred times each second, and adding more machines will make it even harder for the CPU to reach and stay in its deeper sleep states. Even so, you pay the biggest penalty booting that first virtual machine. More mostly idle virtual machines are not a big deal.

You won’t be able to prevent this. It is just something to keep in mind when comparing your NAS build to a friend’s NAS build. If you are running a handful of virtual machines, but they are just sharing files, then this may very well be the reason you’re burning an extra 10 or 15 watts!

Too much cooling can add up to quite a bit of power!

My anecdote on this topic is about the time I pulled two unnecessary 120 mm case fans out of my old homelab box. These were plugged directly into 12-volt power, so they were spinning at full speed, which means they were using as much power as they could.

Those two fans were using 6 watts all day long. My N100 mini PC idled at between 6 and 7 watts before I installed any virtual machines.

This little guy has FIVE NVMe slots!

If you built your own homelab server using consumer-grade parts, then I don’t expect you will be able to save 6 watts, but I bet dialing back your fan curves could make a small impact.

I suspect this could have a bigger impact if you are using older rack-mount server hardware. Especially with the high-pressure fans in 1U and 2U servers!

You CPU doesn’t need to be ice cold. It will run just as well at 30C as it will at 85C. There might be a few dollars to save if you aren’t trying to over cool your servers.

Rack-mount network gear is usually a power hog!

This isn’t always true. You should always check the specs, but it is true the majority of the time. Especially if you’re buying used enterprise gear on eBay.

I prefer my home network gear to be small, relatively low power, and fanless. The heart of my home network lives in my network cupboard. I helped Brian Moses build out that cupboard in 2011, and we picked out a pair of small 8-port gigabit Ethernet switches. I bought the house from Brian six years ago, and those same two switches are still chugging along.

I don’t remember what I might have been troubleshooting when I left that long blue patch cable in there, but I am not using it any longer!

Devices have fans when they tend to generate a lot of heat. Devices generate heat because they use a lot of electricity. Not only that, but fans tend to fail when they run 24 hours every day for a decade. I expect my fanless gigabit Ethernet switches will still be able to do their job a decade from now, but they will likely be replaced with fanless 2.5 gigabit Ethernet switches before then!

The pair of old switches and the Linksys WRT3200ACM running OpenWRT that handles my symmetric gigabit fiber Internet connection consume a combined total of 18.75 watts. That includes any small overheard from the small APC 425VA UPS. There isn’t a single fan to be found in the cupboard.

I may be saving twice as much money as I think I am!

I am sitting in Plano, Texas. We run the air conditioning nine months out of the year. Every watt that goes into my computers turns into heat, and I have to spend even more watts powering the air conditioner to pull that additional heat back out of the house.

The heat used to be a more direct problem for me. In our old apartment, my home office was on the second floor, and heat rises. I was on the south-facing side of the building, so I got a lot of heat from the sun. I also had one of the weakest vents in the apartment.

My home office would get quite toasty on July afternoons whenever I fired up Team Fortress 2. Any watt not being spent in my office was a watt not making me warmer!

But Pat! I need a cluster of mini PCs for educational purposes!

You should think about using virtual machines for testing purposes even if electricity isn’t a consideration.

You can run nested virtual machines, so you could build up a Proxmox test environment on your Proxmox server. You can set up three or four Proxmox virtual machines and treat them as if they were physical boxes. You can cut the virtual power cord to test what happens when a node fails. You can disable network interfaces to simulate pulling patch cables out of the wall. You can write junk data to one of the virtual disks to see what happens when a disk goes sideways.

You could build a Kubernetes cluster using a stack of virtual machines on a single Proxmox host. You could build some sort of virtual Docker cluster. You can build whatever you want on a single host as long as it has enough RAM.

One of the biggest advantages to using a single, big virtual machine host to test your clustering skills is that you can always add one more node to your cluster for free. If you buy three mini PCs, then you have three mini PCs. If you want to add a fourth node, you will have to buy a fourth mini PC.

This setup would be to help you learn things. It doesn’t matter if your three-node Proxmox cluster has that single point of failure that can take the whole thing down. You aren’t trying to run a big company’s IT department. You are running a test cluster.

Conclusion

There is some low-hanging fruit here that is definitely worth picking, especially if your new home server will be running every hour of every day for the next five years. You just have to figure out where to draw the line.

Maybe you enjoy squeezing every ounce of efficiency out of your setup as you can. If you are having fun, then keep going! If you are only trying to save a few bucks, just remember that your time isn’t free. If it takes you five hours to save $20 per year on your electric bill, then that might not be enough savings to justify the time spent, and maybe you should have spent that time doing something fun! Only you can figure out the balance.

If you’re curious how my Linux workstation setup has evolved beyond just efficiency, I recently wrote about five weeks with Bazzite on my workstation.

What does your homelab look like? Do you have a full 42U rack full of beefy servers and enterprise-grade network gear? Do you have a tight little cluster of mini PCs? Or maybe you are just using an old workstation packed full of extra hard disks and RAM?! Tell me about your homelab in the comments, or stop by the Butter, What?! Discord server to chat with me about it!

- Pat’s Mini PC Comparison Spreadsheet

- Do Refurbished Hard Disks Make Sense For Your Home NAS Server?

- My Thoughts On Brian Moses’s DIY NAS 2025 Edition

- My First Week With Proxmox on My Celeron N100 Homelab Server

- Choosing an Intel N100 Server to Upgrade My Homelab

- How Efficient Is The Most Power-Efficient NAS?

- Using the Buddy System For Off-Site Hosting and Storage

- Self-Hosted Cloud Storage with Seafile, Tailscale, and a Raspberry Pi

- Can You Save Money By Changing the CPU Frequency Governor on Your Servers?

- Should You Buy An Intel X540-T2 10-Gigabit Ethernet Card?