I am sure there will be somewhere around 2,000 words by the time I get done saying everything I want to say, but I most definitely will not make you read them all to learn the answer to this question.

The answer is yes. At least on my own aging homelab server, in my own home, with my particular workload. I am probably going to be saving just a hair under $10 per year by switching to the conservative CPU governor with some minor tweaks to the governor settings.

You don’t even have to wait to see my extra tweaks. Here’s the script I run at boot to switch to the conservative governor and tweak its settings:

1 2 3 4 5 6 7 8 | |

My motivation

I moved my homelab server out of my office to the opposite side of the house. It now lives on a temporary table underneath my network cupboard. This network cupboard used to belong to my friend Brian Moses, but it is mine now. Should I write up what I’ve done with it since acquiring the house?!

I had to unplug the server before moving it and its UPS across the house, so I figured I could plug it into a Cloudfree outlet and monitor its power usage in Home Assistant. Once that happened, I couldn’t help but monitor power usage with various max clock frequencies, and during benchmarks, and testing all sorts of other things.

Power is heat, and heat is the enemy here in Texas

I am quite a few years late for this change to have a significant impact on my comfort. In our old apartment, my home office was on the second floor on the south side of the building, and that room had particularly poor airflow from the HVAC system. Heat rises, the sun shines from the south, and you need airflow to stay cool.

I know we didn’t get to the numbers yet, but my changes may have dropped my heat generation by nearly 60 BTUs. That would have made a noticeable impact to the temperature of my old office in July and August.

My new office at our house has fantastic airflow. The only time I get warm is when I close the door and turn off the air conditioning to keep the noise down while recording a podcast.

That was the real motivation for moving the homelab server out of the room. Sure, that got 300 unnecessary BTUs out of here, but the important thing is that there are now four fewer hard drives and nearly as many fans spinning away near my microphone.

Here in Plano, TX, we wind up running our air conditioning eight or nine months of the year. I wouldn’t be surprised if we would spend $5 per year to cool the heat that would have been generated by that extra $10 of electricity.

The specs of my homelab server

I wrote a blog post in 2017 about upgrading my homelab server to a Ryzen 1600. I almost made a similar upgrade to my desktop the next year, but instead I decided to save some cash and I just swapped motherboards. My desktop machine is a Ryzen 1600 now, and my homelab is an old AMD FX-8350. Here are the specs:

- AMD FX-8350 at 4.0 GHz

- 32 GB DDR3 RAM

- Nvidia GT710 GPU

- 2x 240 GB Samsung EVO 850 drives

- 4x 4 TB 7200 RPM drives in RAID 10

When the FX-8350 was in my desktop machine, I had it overclocked to 4.8 GHz. I don’t think I have the exact numbers written down anywhere, but I recall that squeezing the last 300 MHz out of the chip would use an extra 90 or 100 watts on the Kill-A-Watt meter. The first thing I did on the homelab was turn the clock down to 4 GHz in the BIOS. I think it is supposed to be able to boost to 4.2 GHz when only two cores are active, but I had boost disabled when I was overclocking, and it is still disabled today.

This is what I know from measuring power over the last few weeks and scouring old blog posts for power data. My power-hungry FX-8350 machine never goes below 76 watts at the smart outlet. Old blog posts suggest that about 20 watts of that is going to the four hard disks, and up to another 19 watts could be consumed by the overly complicated GPU.

I am not done collecting data. I will clean up this table when I am finished. In the mean-time, though, here are all the things I know so far:

1 2 3 4 5 6 7 8 | |

All the power numbers are measured at the Cloudfree smart plug.

I am not really saving $10 per year

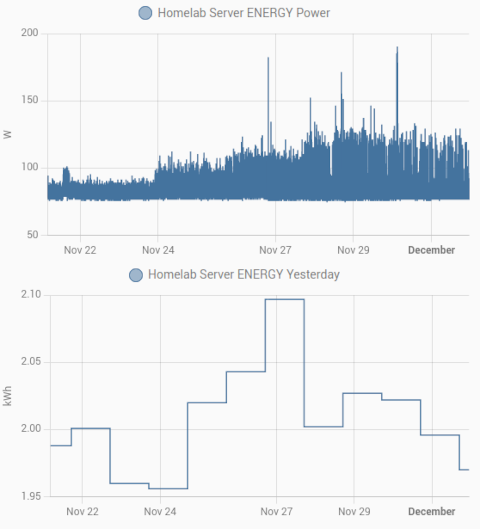

I have had the clock speed of my FX-8350 capped at 2.0 GHz even since I removed the Infiniband cards from my network. You can probably see from the chart that this is only 0.02 kWh per day, and I have learned that 0.02 kWh per day for a year only works out to about $0.50.

I was already saving $9.50 per year by capping the CPU speed at the absolute minimum, but I was also slowing everything down. Switching to the conservative governor and making a few tweaks both made my homelab server faster and saved me the next $0.50. I think that is a nice win!

The motivation for wanting a faster homelab server

My personal network and computing environment is heavily reliant on Tailscale. Tailscale is a mesh VPN that effectively makes my computers seem like they’re all on the same local network no matter where each machine is located in the world. I have been trying to leverage the security aspect of this more and more as time goes on, and one of the things I have been doing is locking down my network services so they are only available on my Tailnet.

NOTE: The first graph is misleading, because pixels are so wide! The graph always shows both the highest and lowest reading during that time period, but it can’t show you just how little time the server spent at the peak.

I have almost entirely eliminated my reliance on my NAS, but every once in a while I need to move some data around the network. As you can probably see in my charts, Tailscale tops out at around 350 megabits per second when I limit the server to 2.0 GHz. It is capable of going twice as fast as this, and even though that isn’t saturating my gigabit Ethernet port, it is still faster!

My testing methodology

My Cloudfree smart outlet runs the open-source Tasmota firmware. Tasmota keeps track of the previous day’s total power usage. I don’t know if you can set the time of day when this resets, but my outlets all cross over to the next day at 5:00 p.m. This is a handy time of day for checking results and setting new values for the next run.

All that data is stored in Home Assistant, so I can always go back and verify my numbers.

All of the most important tests were run for a full 24 hours. Some of the numbers in the middle are probably lazy. If I didn’t get a chance to adjust the governor until 6:00 p.m., I figured that extra hour at the previous setting wouldn’t skew the data significantly.

I would always wait for a full day when switching between the extreme ends of the scale.

NOTE: You probably shouldn’t buy an old-school Kill-A-Watt meter today. Lots of smart outlets have power meters, and you can set those up to log your data for you, and you can even check on them remotely. They also cost less than a Kill-A-Watt. The Cloudfree plugs that I use are only $12 and ship with open-source firmware.

My goals when tweaking the conservative CPU governor

I wanted to make it difficult for the CPU to sneak past the minimum clock speed. If something was really going to need CPU for a long time, I most definitely wanted the CPU to push its clock speed up.

I don’t have a good definition for what constitutes a long time. I figured that if I am going to scp a smaller volume of data around, that I don’t really care if the task runs for 10 seconds at 1.4 GHz instead of 5 seconds at 4.0 GHz.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

I will probably start to care if a 20-minute file copy takes 40 minutes.

I wasn’t able to plot a precise point on that continuum. I am able to slow down the clock speed increase by raising the sampling_rate, but if I push it too far, my iperf tests were never able to push past 2.8 GHz.

My tweaks to the conservative governor settings

I raised both the down_threshold and up_threshold above the defaults. I figured this would add some friction on the way up while making the trip back down to 1.4 GHz a little faster.

I bumped the sampling_rate from the default of 4,000 microseconds to 140,000 microseconds. In my tests, anything at 180,000 microseconds or higher wouldn’t let the CPU reach full speed. I may try lowering this value, but it takes 24 hours to verify the results.

Every time I made a change to the conservative governor, I would run three consecutive iperf tests. Why did I run three 10-second tests instead of a single 30-second test?

It seemed like the small delay when reconnecting between the tests would allow the CPU to clock down a notch or two. That seemed like a helpful simulation of what the real world might be like.

I didn’t use a stopwatch. I didn’t set up a script to watch the clock speed to let me know when we were reaching the maximum. I just ran cpufreq-aperf and counted hippopotamuses before seeing 4,000,000 in the output. I guess it helps that cpufreq-aperf updates once every second!

I didn’t really need an exact number, but it was easy to see how quickly the CPU was ramping up. I think I wound up at a point where the CPU bumps up one frequency notch faster than once every two seconds, but slower than once per second.

That means my iperf test reaches full speed in about five seconds. It also doesn’t dip more than one notch between runs.

I think that is pretty reasonable. I can reach or at least approach 600 megabits per second via Tailscale in less than 10 seconds while only using 0.01 kWh more throughout the day than if I locked the machine at 1.4 gHz.

Is all this work worth $10 per year?!

The important thing is that you don’t have to do all this work. I’ve spent a few hours making tweaks and recording data to learn that just switching to the conservative governor would save me 75% as much as if I just force my lowest clock speed, and I spend even more hours tweaking the governor to claw back the next 24%.

All you have to do is spend two or three minutes switching governors or applying my changes. You don’t have to hook your server up to a power meter to see if it is actually working, but you aren’t running my ancient and power-hungry FX-8350, so your mileage will almost certainly vary.

I imagine this helps less if you already have an extremely efficient processor.

Why wouldn’t you want to make this change?

My ancient server is overkill for my needs. I run a handful of virtual machines that are sitting idle most of the day, and they could all manage to do their job just fine with the smallest mini PC.

Maybe you run servers that need to be extremely responsive. Google measures your web server response time as part of their search rankings. If your web server is locked at full speed, it might be able to push your blog up a notch in the search rankings, and that would be worth so much more than $10 per year!

Most of us aren’t going to notice things slowing down. Especially if you just switch governors instead of applying my extreme tweaks. It isn’t like your CPU is going to be in sleep states more often with the conservative governor. It can still do work while cruising at a low speed.

The best server hardware is almost always the hardware you already have

Every time my home server gear starts getting old, I start thinking about buying upgrades. This old FX-8350 box is eating up $93 in electricity every year. $73 of that is the compute side, and about $20 is the storage.

If I wait for a deal, I could swap out the old 4 TB hard drives for a 14 TB hard drive for around $200. If we ignore the fact that I need more storage and these drives are getting scary old, I can save $14 per year here. That’d pay for the new hard drive sometime in the next decade.

NOTE: We post hard drive, SSD, and NVMe deals in the #deals channel on the Butter, What?! Discord server almost every day!

When I do this math, I always assume I am going to be buying something bigger, faster, and better. A new motherboard will be $100. A new CPU will be at least $200. New RAM will be at least $150. I am assuming I can reuse my case and power supply.

Even if this magical new machine uses zero electricity, it would take six years to pay for itself in power savings. If it only uses half as much power it will take will take 12 years.

I think this is the first year where I can pay for a server in energy savings!

My next upgrade might be very different! I am very seriously considering replacing my homelab server with the slowest Beelink mini PC you can buy. The Celeron N5095 model sometimes goes on sale for $140. I have some free RAM here to upgrade it, and the little Beelink would probably use less than around $10 in electricity every year.

It would cost me $340 for a Beelink and a 14 TB USB hard drive to hang off the back. The two pieces of hardware combined might only cost me about $16 per year in electricity. That would completely pay for itself in power savings in about 4.5 years. Maybe less than three years if we include the costs of cooling.

I don’t like lumping in the 14 TB hard drive with the Beelink. I am quickly running out of storage on my server, and I am planning on replacing those four drives with a single 14 TB drive after my next inevitable disk failure. I will be retiring those hard drives soon whether I retire the FX-8350 or not!

The Beelink would pay for itself in electricity savings in two years. No problem.

It is exciting that this is even a possibility, but it is a bummer because this is a downgrade in many ways. The Beelink doesn’t have six SATA ports and the bays to hold those drives. The Beelink doesn’t have PCIe slots for upgrades. My FX-8350 is 50% faster than the N5095, but it is possible that the N5095’s AES instructions would give it a significant Tailscale boost!

But the Beelink is tiny, quiet, and capable of doing the work I need. I am excited that it is literally small enough to fit in the network cupboard!

There are more capable Beelink boxes. The Beelink model with a Ryzen 5 5560U would be a pretty good CPU and GPU upgrade, but I don’t need more horsepower, and that $400 Beelink wouldn’t save me enough power to pay for itself before I’d likely retire it.

Of course this gets complicated because I have no idea how to account for prematurely turning my FX-8350 server into e-waste.

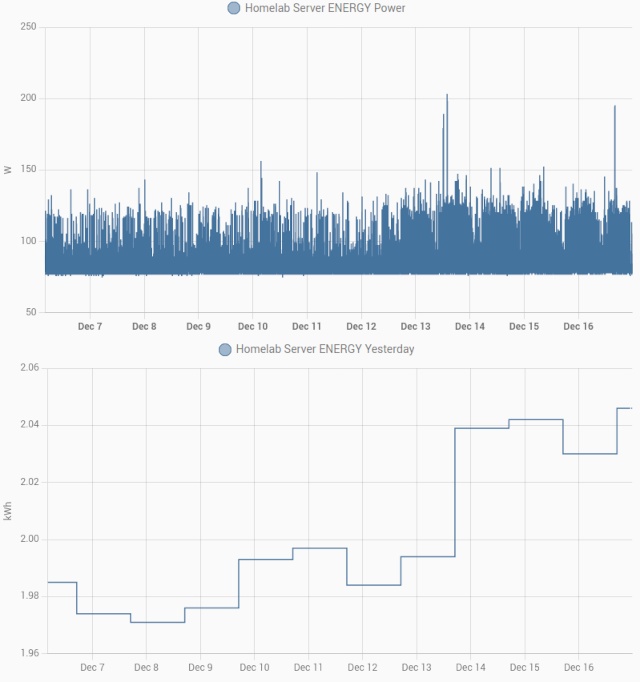

UPDATE: Power use is up a bit, and I am not sure why!

You can see two spikes there on December 13. The first one was when I upgraded to the noticeably faster Tailscale release. I was excited to see that my 612 megabits to 785 megabits. An hour later I realized that the numbers might go up a bit if I switched back to the ondemand CPU governor. That got me up to 810 megabits per second.

I did remember to switch back to the conservative governor, but I noticed that my power graphs didn’t drop back down. That’s when I learned that switching governors also resets the tweaks I made to some of the knobs on the conservative governor.

It wasn’t until I wrote the two previous paragraphs that I noticed that my monkeying around with Tailscale isn’t the problem. The graph got all sorts of chonky about 24 hours before that!

If you’ve ever carried virtual machines around on your laptop, then you probably already know that VMs and power savings don’t usually fit together well. Sometimes things work out alright, but other times it is easy for one of those machines to keep the CPU awake, and your 8-hour battery on your laptop winds up lasting only 3 hours.

I will report back when I know more, but I figured it was worth noting that we have to keep an eye on our CPU governor tweaks! Things aren’t really going all that bad. The ondemand governor used 2.2 kWr each day. The good days on the conservative governor used 1.97 kWh. My worst day so far since December 13 is at 2.05 kWr.

I am still closer to the low end than the high end!

Conclusion

I am just one guy testing his one ancient homelab server. I’ll probably find a way to do a comparable test on at least one more piece of hardware, but this is still just me. I want to hear from you!

Are you going to try this out? Do you have an old Kill-A-Watt meter or a smart outlet capable of measuring power usage? If you happen to do a before-and-after test with and without the conservative Linux CPU governor, I would absolutely love to hear about it! You can leave a comment, or you can stop by the Butter, What?! Discord server to chat with me about it!