This blog won’t have any how-to documentation. I don’t even know for sure if everything I think I know about my new AMDGPU software setup is actually correct, and I certainly don’t know that I am doing anything in any sort of a recommended way. The Radeon RX 6700 XT that I bought last month is the first AMD GPU I have owned since running Linux on a Dell Inspiron laptop with a Core 2 Duo processor. I believe I was still using that laptop when I started this blog in 2009!

I figured I ought to write down how I feel about things, and document some of the things that I have learned, and probably talk about the mistakes that I have already had to correct.

- Oh No! I Bought A GPU! The AMD RX 6700 XT

- My New Radeon 6700 XT — Two Months Later

- MSI Radeon RX 6700 XT at Amazon

The tl;dr

If you only care about gaming, you should buy an AMD Radeon GPU. AMD’s pricing is so much better than Nvidia. You almost always get more VRAM from a comparable AMD Radeon. Everything related to gaming works great out of the box with an AMD GPU using open-source drivers on Linux.

If you need OpenCL to run DaVinci Resolve, you may currently be out of luck with a 7900 XT or 7900 XTX. At least for now. If you have an older AMD GPU, you should be able to get OpenCL installed, but it isn’t going to just work right out of the box.

If you’re interested in machine learning stuff, then you should probably be considering an Nvidia GPU. The situation here is improving, but it is improving slowly.

NOTE: I believe the recent ROCm 5.5 release has support for RDNA3 GPUs!

Six of one and half a dozen of the other

Everyone on Reddit says that the Linux experience with an AMD Radeon GPU is far better than with Nvidia GPUs. I can’t say that they are completely wrong, but they aren’t entirely correct.

I don’t know about every Linux distribution, but Ubuntu makes it really easy to get up and running with the proprietary Nvidia drivers. I am pretty sure Nvidia’s proprietary driver ships with the Ubuntu installer.

Absolutely everything works once the Nvidia drivers are installed. You will have accelerated video encoding. Your games will run fast. You will be able to run Stable Diffusion with CUDA, and OpenCL will function. DaVinci Resolve will work.

If I replaced my Nvidia GTX 970 with any newer Nvidia GPU up to the RTX 4090, I wouldn’t have had to do a thing. I would have already had the drivers installed, and everything would have just continued to work.

It definitely looked like I was in good shape immediately after swapping in my new 6700 XT. My machine booted up just fine. Steam fired up. Games were fast!

Then I noticed that DaVinci Resolve wouldn’t open. I didn’t have OpenCL libraries installed. The documentation about this is contradictory, so I am assuming something changed here fairly recently. I thought I had to install the AMDGPU-PRO driver instead of the open-source AMDGPU driver to get OpenCL to work. Don’t do that.

That is what I did at first, because I thought I had to, and it was horrible! The proprietary AMDGPU-PRO driver is much slower than the open-source AMDGPU driver. I quickly figured out that you can use AMD’s tooling to install their ROCm and OpenCL bits, and they will happily install and run alongside your AMDGPU driver.

At that point I was in pretty good shape. DaVinci Resolve worked. My games ran well. I believe I had working video encoder acceleration with VAAPI. I didn’t stop here, though, so my setup is currently a little quirky. When I upgraded Mesa, I lost hardware video encoding support.

I am pretty sure that if you have an RDNA3 card, like the 7900 XT or 7900 XTX, then you will not be able to have working ROCm or OpenCL at this time.

AMD is way ahead of Nvidia on performance per dollar

This isn’t specific to Linux. How much value and AMD GPU provides kind of depends on how you are looking at things.

If you choose a GPU from each vendor with similar performance in most games, the AMD GPU will benchmark much worse than the Nvidia GPU as soon as you turn ray tracing on. That makes the AMD card seem like it isn’t all that great.

Except that the AMD GPU is going to be quite a bit cheaper.

Things look so much better if you choose an AMD GPU and an Nvidia GPU with similar ray-tracing performance. The AMD GPU will probably still cost a few dollars less, have more VRAM, and it will outperform the Nvidia GPU by a huge margin when ray tracing isn’t involved.

One of the problems here is that the AMD RX 7900 XTX is the fastest AMDGPU available, and its performance in ray-tracing games falls pretty far behind Nvidia’s most expensive offerings. If you just have to have more gaming performance than the 7900 XTX has to offer, AMD doesn’t have anything available for you to buy.

As far as gaming is concerned, I am starting to think that the extra VRAM you get from AMD is going to be important.

I didn’t buy a top-of-the-line GPU, so I am not unhappy that my 6700 XT only has 12 GB of VRAM. It is looking like the 12 GB of VRAM on the Nvidia RTX 4070 is going to make the card obsolete long before its time, but the RTX 4070 also costs nearly twice as much as my 6700 XT.

Support for machine learning with AMD GPUs isn’t great

AMD will sell you a GPU with 24 GB of VRAM for not all that much more than half the price of a 24 GB RTX 4090. This should be such an amazing GPU for running things like LLaMa!

AI is dominated by CUDA, and CUDA belongs to Nvidia. It is possible to get some models up and running with ROCm or OpenCL, but it will be challenging to make that happen. It was pretty easy to get Stable Diffusion going on my 6700 XT, but that seems to be just about the only ML system that is relatively easy to shoehorn onto an AMD GPU.

I have been keeping an eye on the Fauxpilot and Tabby bug trackers. Nobody is even asking for support on non-Nvidia GPUs.

UPDATE: There is now some information about getting Fauxpilot running on a Radeon GPU in the Fauxpilot forums!

If AI is your thing today, then you probably already know that you will just have to spend more money and buy an Nvidia card. I have a lot of hope for the future. As soon as LLaMa was leaked, Hacker News was going crazy with articles about porting it to work with Apple’s integrated GPU. I feel that this bodes well for all of us that can’t run CUDA!

Having Stable Diffusion running locally has been fantastic. I can give it a goofy prompt, ask it to generate 800 images for me, then walk away to make a latte. It will probably be finished by the time I get back, and I can shuffle through the images to see if there is a funny image I can stick in a blog post!

Installing bleeding-edge Mesa libraries and kernels

It is awesome that AMDGPU is open source, but it is also pretty tightly woven into your distribution. Everything runs better with a more recent kernel, and you may even need a newer kernel than your distro ships if you want to run an RDNA3 card. Maybe. I am not even sure that an RDNA3 card will run on Ubuntu 22.04 without updating Mesa using a PPA.

I have been running Xanmod kernels for years, so I was already ahead here already.

I wanted to try the latest Mesa libraries. At the time, I thought I needed them to enable ray tracing. I don’t think that was correct, but I am quite certain that ray tracing performance is better with the latest version compared to whatever ships with Ubuntu 22.04 LTS.

First I ran the Mesa libraries in Oibaf’s PPA. I had random lockups or my X11 session would crash once every two or three days. I have since switch to Ernst Sjöstrand’s PPA, and things have been completely stable ever since.

Using either PPA broke VAAPI on my Ubuntu 22.04 LTS install. I don’t think any of the up-to-date Mesa PPAs ship an updated libva for 22.04. They do ship an updated verion of libva for more recent Ubuntu releases. I assume this is where my problem lies.

This is all feels less clean than just running the latest Nvidia driver.

I got OBS Studio working with VAAPI using Flatpak

I wound up installing OBS Studio and VLC using Flatpak. The Flatpak release of OBS Studio is a couple of versions ahead of what I was running, which is nice, and it is linked to some other Flatpak packages that contain VAAPI.

I mostly use OBS Studio to capture a safety recording of our podcast recordings on Riverside.fm. I record those at a rather low bitrate. I mostly use these recordings to help me make sure all the participant’s recordings are lined up on my timeline. I could get away with CPU encoding here.

I have recorded some 3440x1440 games at 1920x1080@60 with good quality, and it uses a pretty minor amount of GPU horsepower. I don’t quite know how to measure that correctly. There are a lot of different segments of GPU performance that radeontop measures, and I don’t know which are most important while gaming!

I do know that VAAPI is not using the dedicated video encoding hardware. That is a bummer. It seems to be using shader cores.

I don’t consider that to be even close to a deal breaker. Sure, I am wasting some of my GPU gaming performance on video encoding, but if that were problematic I could have bought a 6800 instead of a 6700 XT, and I still would have gotten a better value than buying an Nvidia card.

DaVinci Resolve and OpenCL

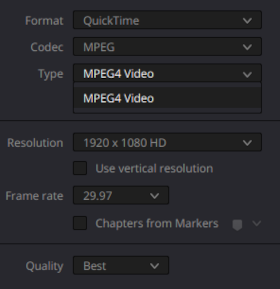

I have lost some options with Resolve. I used to be able to export h.264 and h.265 video with my Nvidia GPU. Now I am stuck with only a rather generic MPEG4 option, and even at its highest quality is rather low bitrate.

YouTube will let me upload DNxHR, so this shouldn’t be a huge problem.

I have no way to benchmark anything related to DaVinci Resolve, and I don’t have several GPUs on hand even if I did. I do suspect that Nvidia GPUs perform significantly better than AMD GPUs when running Resolve. I don’t know by how much, but if video editing is your goal, then you might want to consider an Nvidia GPU.

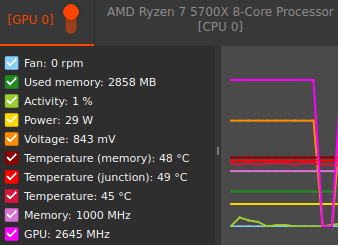

Power consumption!

The graph in CoreCtrl for power is pretty much a flat line while I write this blog. The GPU is using 28 watts. That is kind of a lot, but that is less than my Nvidia GTX 970 was using.

The GTX 970 idled at around 60 watts, but it was driving two 2560x1440 monitors at 102 Hz. I hear the Nvidia driver doesn’t idle well with two monitors.

My RX 6700 XT is idling at 28 watts while driving a single 3440x1440 monitor at 144 Hz. That drops to 17 watts if I set the monitor to 60 Hz.

Why did I choose the Radeon 6700 XT? Was it a good choice?

My Nvidia GTX 970 has been [a bottleneck][pbhn] for a while. I did some pretty fuzzy and hand-wavy math. I wanted to roughly double my frame rates in the games that were just barely worth playing. That would take about twice as much GPU, and that would have gotten me up over my old monitor’s 102-Hz refresh rate.

I assumed I would be upgrading from a 2560x1440 monitor to a 38” 3840x1600 ultrwide. That would be 60% more pixels. Doubling performance again would help with the extra pixels and leave room for turning up the visual settings in most games.

My actual upgrade to a 34” 3440x1440 monitor only adds 34% more pixels, so I bought myself a little margin there. When I started doing my math to find my minimum viable GPU upgrade, there wasn’t much support for things like FidelityFX SuperResolution (FSR). Now that I can use this sort of fancy scaling technology in nearly every game, I am much less worried about having enough GPU to render in my monitor’s native resolution.

I was tempted to splurge and upgrade to a 7900 XT, but some of the things I already mentioned scared me off. There was no ROCm or OpenCL support from AMD yet, so DaVinci Resolve wouldn’t work, and I can’t edit podcast footage without Resolve. I also wasn’t sure how smooth things would go upgrading Mesa with a PPA.

I figured that if I wasn’t going to go with an RDNA3 card, then I really should stick with the minimum viable upgrade. The 6700 XT seemed to hit a sweet spot on price to performance ratio, and it was definitely more than enough GPU to keep me gaming for a while!

The 6700 XT is kind of comparable in performance to the RTX 3070, but the 6700 XT has an extra 4 GB of VRAM and costs about $150 less.

I am most definitely pleased with my choice. Most of my games are running at better than 100 frames per second with the settings cranked up to high or ultra. My only regret is that Control doesn’t quite hit 60 frames per second with ray tracing enabled. I never expected the 6700 XT to give me enough oomph for ray tracing, but I do believe a 6800 XT would have given me the juice for that.

I did get to play through Severed Steel with ray tracing enabled. It is a minor, but really cool update to the visuals in the game!

It would have been easy to talk myself into more GPU. The RX 6800, RX 6800 XT, and even the RX 6950 XT provide performance upgrades comparable to their increases in price. It would be easy to add $60 to $100 at a time three or four times and wind up buying a 7900 XT.

I didn’t want to do that, so I stayed at the lower end. Sometimes it is best to aim for the top. Sometimes it is better to settle for what you actually need. I don’t expect to wait eight years for my next GPU upgrade, so I think settling will work out better in the long run.

- Oh No! I Bought A GPU! The AMD RX 6700 XT

- MSI Radeon RX 6700 XT at Amazon

- Severed Steel is My New Favorite First-Person Shooter

Conclusion

We may have gotten a little off track in the last section. You don’t really need to know how my choice of an upgrade went to discuss the advantages and disadvantages of AMD vs. Nvidia on Linux, except that I feel it highlights one of the advantages. I am glad I didn’t spend $20 more on a slower Nvidia RTX 3070 with only 8 GB of VRAM.

I think my conclusion just needs to call back to the tl;dr. If you only need your GPU for gaming on Linux, then I feel that an AMD GPU is a no-brainer. If you are focused on machine learning, then an Nvidia GPU is a no-brainer. If you are anywhere in between, then you are just going to have to weigh the advantages and disadvantages.

What do you think? Are you running an AMD GPU on Linux? Are you mostly gaming, or do you need GPU acceleration for video editing or machine learning? How is it working out for you? Let me know in the comments, or stop by the Butter, What?! Discord server to chat with me about it!