Hello everyone! My name is Pat, and I have been using Linux since 1995. The last Windows machine I had at home was a Cyrix P200 that dual booted Windows 95 and Slackware Linux. Before that I spent many years running MS-DOS and DESQview 386. My professional life after that definitely including configuring and maintaining Windows servers, but I always did my best to keep that to a minimum, and the last time I even touched a Windows server it was running Windows Server 2003.

I thought I did a good job, but the charging port on the laptop is pointing down! pic.twitter.com/upzVmPgZFr

— Pat Regan (@patsheadcom) May 10, 2022

Last month I bought an Asus Vivobook Flip 14. It shipped with Windows 10. It immediately offered to upgrade to Windows 11, and I let it go ahead and do its thing—why postpone the inevitable? I’ve been doing my best to make this into a comfortable device for this long-time Linux user. I think I am doing a reasonable job, but that depends on how you measure things.

Why am I running Windows 11 on my new 2-in-1 convertible tablet?

My hope was that I could treat this machine like an appliance. As far as I am concerned this may as well be an overgrown Android tablet, except it should also run be able to run Emacs, Davinci Resolve, and a real web browser. As a bonus, the Ryzen 5700u has a rather capable GPU, so I can even do some light gaming on this thing.

I should be able to use WSL2 and WSLg to haul enough of my usual Linux environment over to be comfortable enough. There’s even the Windows Subsystem for Android available to let me run Android apps and games if need be.

And Windows 11 is supposed to be pretty well optimized for use with a touch screen. That should be a bonus, right?

Native Emacs or WSL2 with WSLg?

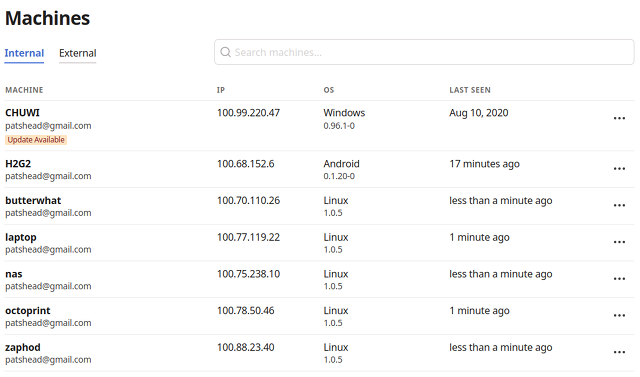

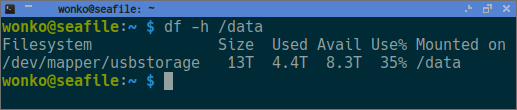

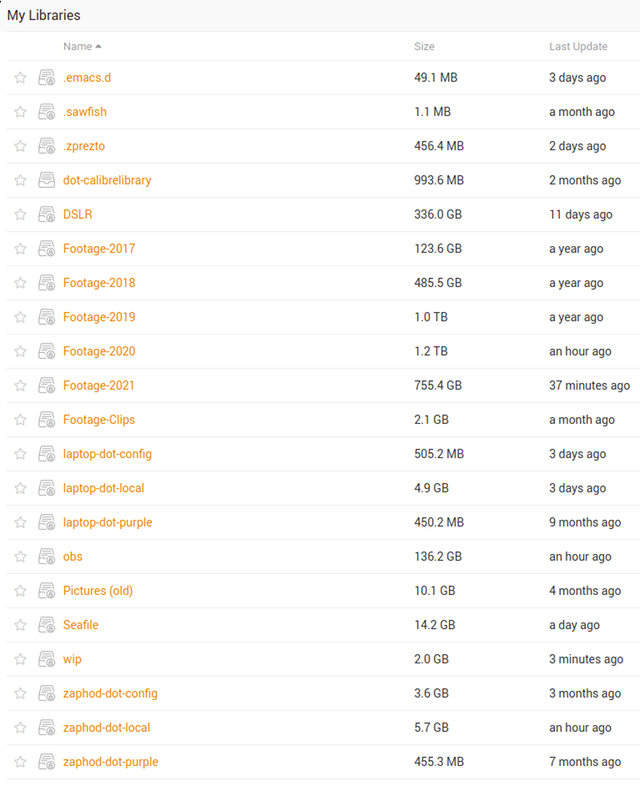

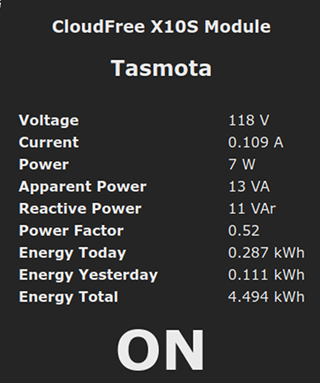

The first thing I did was install Seafile so I could sync my Emacs configuration library. I don’t know C:\Users\patsh was the correct place to sync my .emacs.d, but that’s where I put it. I fired up Ubuntu 22.04 under WSL2, installed Emacs, and symlinked my .emacs.d to the appropriate place.

I heard accessing the host file system from WSL2 was slow, but I didn’t realize it would be this slow. My Emacs daemon on my desktop starts in about a second. This setup on my laptop was taking tens of seconds!

I wound up installing the Seafile GUI applet in WSL2 and syncing everything I need to my home directory inside the WSL2 environment. This was a huge improvement, and it is definitely usable.

Since my Seafile libraries are encrypted, I have to run the Seafile GUI. I had to work quite hard to get the Seafile GUI and Emacs to start up when I log in. I don’t know why my initial attempts weren’t working. I wound up adding some random sleep commands to my little shell script that the Windows Task Scheduler was invoking, and it seems to work most of the time. It feels like such a hack, and I have no idea why it works.

If this didn’t work, my next step would have been attempting to install native Emacs on Windows. I tried doing this on my old Windows 10 tablet, but I never really drove that one daily. The Chuwi Hi12’s Atom CPU and lack of RAM made it a pretty awful machine to use for all but the lightest tasks, but I did learn that I didn’t enjoy maintaining the sprinkling of if statements that my Emacs config needed to work on Windows and Linux.

Running Emacs in the WSL2 Ubuntu virtual machine is much easier.

WSLg breaks automatic sleep for me

I don’t know when this started. I assume after I figured out how to get my Emacs GUI to launch on login. My laptop stopped going to sleep on its own. I wasn’t sure how to troubleshoot the problem, but Google suggested that I run powercfg /requests and that showed me that mstsc.exe was keeping my screen awake.

What on Earth was I doing with terminal server? It turns out that’s how WSLg puts X11 windows onto your Windows 11 desktop.

The relevant bug report that I found on Github suggests that the blog word counter script I use with Emacs might be causing the problem. That doesn’t seem to be the case, because even if I close Emacs and Seafile, my laptop no longer goes to sleep on its own.

I could probably fix the problem by never running Emacs again, but that isn’t an option, so I guess I will just have to live with this one.

Firefox was slow and using a ton of battery

I made a small change. I opened about:config and set gfx.webrender.all to true. Scrolling seems smoother, videos might be playing back better, and my runtime on battery is greatly improved. That last part is the most important to me.

Am I doing a good job? My plan was to eat that frog and just get the frame of the pick and place assembled, but @OpuloInc snuck two stepper motors, a limit switch, and the umbilical mount into that first step! Is it legal to use hashtag #arduino on this? pic.twitter.com/b9dSQGz03Q

— Pat Regan (@patsheadcom) May 14, 2022

I think Edge still wins if you want to maximize your time away from a power outlet, but I won’t be away from power long enough for this to matter. It takes less than 20 minutes to charge the Asus Vivobook Flip 14’s battery from 18% to 50%, so I don’t even need a long pit stop to put quite a few hours of use back into the tank.

If you told me I was going to be stuck without a power outlet for 24 hours, I would likely fire up Edge just so I could watch Netflix for 8 hours instead of 6 hours.

I have auto brightness, auto rotation, and keyboard problems

Have you tried rebooting it?

I gather that Windows 11 tries to do something smart with auto brightness. I see plenty of posts from people with Microsoft Surface tablets with the problem, so I assume this is a Windows 11 issue. Most of the time everything is fine, but I randomly have a problem where Windows adjust my brightness every time I rotate the tablet.

Sometimes it wants to be really bright. Sometimes it wants to be dim. It doesn’t stop doing goofy things until I reboot.

Sometimes my screen just doesn’t want to auto rotate. This is quite problematic on a tablet! Usually putting the tablet to sleep and waking it back up fixes this issue.

On other occasions, Windows doesn’t want to disable the keyboard and mouse when I flip the screen around into tablet mode. Sometimes putting the tablet to sleep helps. Sometimes I have to reboot.

I have managed to mitigate the rotation and keyboard problems, but I think the solution is stupid. I just make sure I take my time. If I wake up the device, unlock the device, switch to tablet mode, and rotate as immediately as I would like, there’s a good chance something will go wrong. I’ve been going slow for a last few days, and I haven’t been able to trigger a mistake since.

I can’t be the only one who thinks this is dumb.

UPDATE: I am not sure when it happened, but it is about three weeks since I wrote this blog, and these three problems seem to have completely gone away.

Remapping capslock to control

I doubt that I did this correctly. I don’t even know where I found the instructions. I pasted some sort of registry hack into Powershell, and now my capslock key is a control key. My control key is still a control key.

I worry that if I ever have to play any FPS games that I won’t be able to map both control keys to different things, but I also have no idea what to do with the original control key.

If there’s a correct thing I should be doing here, I would love to hear about it!

Powertoys is bumming me out

FancyZones sounds super handy, and it should have been a quick and easy way for me to at least partially mimic the automatic window sizing and placement I have on my Linux desktop using custom Sawfish scripts. The first problem I wanted to solve was that when Emacs starts up it is a few columns and two rows too small. I figured I could just set up a FancyZone to drop Emacs in!

Except FancyZones doesn’t work with WSLg windows yet.

At least Always On Top will come in handy.

Having Ubuntu 22.04 in WSL2 and on my desktop is handy!

I was able to set Windows Terminal to use Solarized Dark. I was able to install zsh and use Seafile to sync my zsh config on the laptop, and my configuration for things like htop and git came along for the ride.

I was able to just rsync my .rbenv over to the WSL2 environment, sync my blog-related Seafile library to the correct place, and all my Octopress scripting just works.

I also think it is awesome that my laptop and desktop are both 64-bit x86 machines. That means I can copy binaries around and they just work. There are a few binaries of minor important in my /usr/local/bin that have just continued to work.

A lot of people are excited about how the Apple M1 hardware is so fast and power efficient. I agree that this is exciting, but all my workstations and servers run on x86 hardware. Things are likely to go more smoothly and be more efficient overall if I stick to the same architecture.

I have more feelings about the Asus 2-in-1 than I do about Windows!

I didn’t buy the fastest, lightest, or fanciest 2-in-1, but I think the Asus Vivobook Flip 14 has been a fantastic compromise. It was one of the lowest price 2-in-1 ultrabooks, which does come with some compromise. Even so, the Ryzen 5700U performs quite well while still giving me 6 to 8 hours of battery life in this 3.2-pound package.

I am absolutely sold on the idea of a convertible laptop. I consume Hacker News, Reddit, and Twitter in tablet mode most mornings. I’ve been able to stand the little Asus up like a book to follow the assembly directions during the build of my LumenPNP pick-and-place machine. I get to fold the tablet back while propped up at a slight angle to watch YouTube while I roast coffee beans in the kitchen.

I expect all my future laptops will be convertible 2-in-1 models, even if I wind up with another jumbo machine like my old 18.4” HP laptop.

Is it legal to tip the tablet screen back like this and stick it between the keyboard and monitors?! Asking for a friend. pic.twitter.com/4roJbnWKZm

— Pat Regan (@patsheadcom) May 19, 2022

I can definitely see the appeal of the removable keyboard on the Surface Pro. It would be nice to leave 6 ounces at my desk when I read Twitter in my recliner, but I didn’t want a 2-in-1 with a kickstand. There are only a limited number of ways to fit a Surface Pro with a kickstand on your lap while typing.

I am typing this from the recliner right now. I am sitting in about the third different pose this session. The right knee is bent with the laptop on that side of my lap, and my left foot is under my right knee. I don’t have a good way to take a picture of this, but I know I wouldn’t be able to balance a Surface Pro in this position.

There is no proper way to lounge in a recliner with a laptop, so I tend to move around a lot. I also don’t tend to sit and type for long periods of time over there.

Gaming is bumming me out, but probably not for the reason you think!

I was curious how the Ryzen 5700u compares to my overclocked Ryzen 1600 desktop with its Nvidia GTX 970. My desktop has the edge in single-core performance, but not by a ton, and even though the 5700u has two fewer cores, it is quite comparable on multi-core performance.

I play a lot of Team Fortress 2. This is an ancient game and not terribly taxing. The Asus of course had no trouble maintaining 60 FPS with the default settings. I hope I am never stuck having to play Team Fortress 2 on a 14” screen, but it is nice to know it is an option.

#TF2 on #Linux is stuck using the old OpenGL renderer, and my FPS have often been dropping under my monitor's 102 Hz when things get busy and there's lots of hats. I tried out one of the hacky FPS things and accidentally my lighting now looks like Minecraft?! pic.twitter.com/SOPe3n9xnA

— Pat Regan (@patsheadcom) March 31, 2022

NOTE: The clip of Team Fortress 2 in this tweet was NOT recorded on my new laptop. I just felt like this section needed some sort of example to spice things up. Maybe I will have something more appropriate to drop in its place soon!

Then I tried Sniper Ghost Warrior Contracts 2. It is one of the most modern games I’ve played this year. With the lowest settings I can muster and using AMD’s FSR to scale up from 1280x720, my desktop usually sits between 80 and 100 FPS. With similar settings, my Ryzen 5700u laptop can manage an unplayable 22 FPS.

Is that disappointing? I don’t think so. My desktop draws something like 300 watts at the power outlet to get 80+ FPS. I am impressed that my tiny, inexpensive laptop has even 20% as much gaming muscle at around 30 watts!

Why am I disappointed? I am bummed out about the almost total lack of touch-friendly games that I have to play on my Windows tablet. I already complained about this in the previous blog about this laptop. Hopefully I find some fun games over the next few months!

Conclusion

I am still very much at the beginning of this experiment. Today is May 23, and my notes say this laptop was delivered on May 4. That means I am only just coming up on three weeks. I am certain there will be more to learn over the coming months, and it is very likely that I have forgotten to mention something important in this blog!

What do you think? Are you a long-time Linux user trying out a Windows machine? Are you as excited about 2-in-1 convertible laptops as I am? Are you using a nicer 2-in-1 than my budget-conscious Asus Vivobook Flip 14, like the the new Asus Zenbook S13 Flip OLED that I am already drooling over even though the Ryzen 6800u model isn’t out yet! Let me know in the comments, or stop by the Butter, What?! Discord server to chat with me about it!