I am a fan of Dropbox-style file sync services. I’m not a fan of letting someone else have the keys to access my encrypted data. Back in 2013, I tested every open-source self-hosted cloud-storage solution I could get my hands on.

At the time, Seafile was the only Dropbox-style option that could meet my needs. Other software might work today, but Seafile hasn’t failed me in 7 or 8 years.

In 2013, my Seafile server lived in a virtual machine on a physical server I already had colocated in a data center. In 2018, my last colocated server started having hardware issues, so I started moving services to the cloud. I wound up subscribing to Prometeus.net’s Seafile service in Romania.

Some things have changed since then, and I’ve decided it is time to bring Seafile back in house.

The parts list

I completely forgot to list the parts I used when putting together my little Raspberry Pi server. Sure, I mentioned all the parts in various places, but it would be easier for you if I made a list. Wouldn’t it?!

This isn’t quite what I ordered. I borrowed parts from the Pi-KVM setup that Brian gave me. Aside from the case being transparent, this sure looks like that’s the kit from my Pi-KVM. I kept the 32 GB microSD card with my KVM and used a random 8 GB microSD card in this Seafile build.

Aside from those two small differences, this seems to be exactly what I’m using. The 4 GB Pi is definitely overkill. You can absolutely save yourself $20 and use a 2 GB model. You can even use an older Pi. Seafile and Tailscale aren’t terribly heavy!

You should MOST DEFINITELY shop around for a hard drive. When I bought the 14 TB drive it was on sale for $230. There’s always a USB hard drive on sale somewhere. Just keep your eyes open!

Why Seafile?

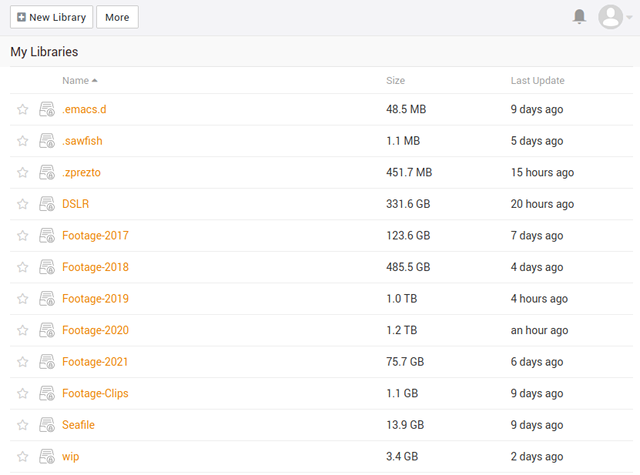

In 2013, Seafile was the only piece of software I could find that was both encrypted on at the client and could scale to tens of thousands of files. My hope was to sync my entire home directory. At the time, Seafile couldn’t handle that many files in a single library well. I also decided it would be cumbersome to literally sync my actual home directory, but I did manage to sync every important subdirectory into its own Seafile library.

Seafile encrypts my data before it leaves my local machines, and the server doesn’t have the keys to unlock my files. If you’re paranoid, though, you need to be careful. If you access an encrypted library in Seafile’s web interface, your keys are stored in memory on the server for about an hour.

This means that if someone hacks into my Seafile server, the attacker won’t have the ability to read any of my data. If a thief steals my server, they won’t have access to my data.

I want Dropbox-style file sync between my desktop, laptop, and a few other machines. I want a centralized server in a remote location storing an encrypted copy of my data. Seafile also stores historical snapshots of my data on the server, so if I accidentally delete or corrupt all my files, I can always download them again.

Seafile is at the heart of my backup strategy.

Why am I bringing Seafile back in house?

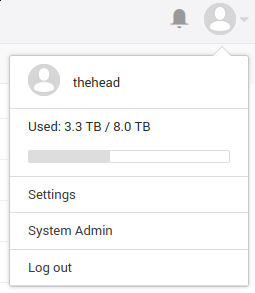

When I signed up at Prometeus.net, the bulk of my data was in the RAW photos from my DSLR. At the time, they consumed less than half the space in Prometeus’s 400 GB plan. Today I’m at around 380 GB, and Prometeus doesn’t have a larger tier.

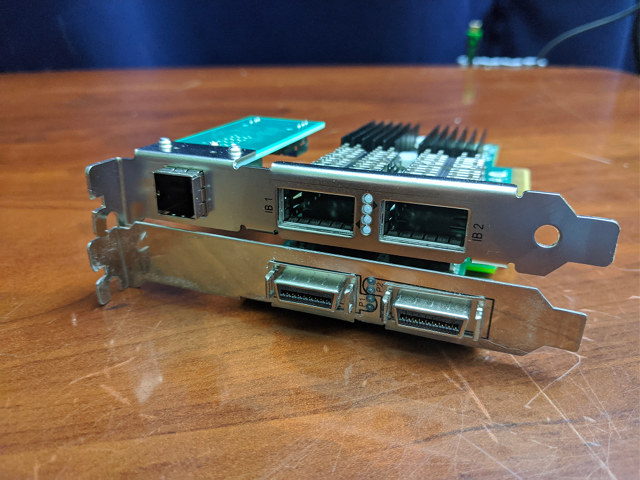

I also have nearly three terabytes of video, and that sometimes grows by hundreds of gigabytes in a month. Most of it is GoPro footage from my FPV drones. The video is stored on a RAID 10 on my NAS virtual machine. I don’t have a backup plan for it. If my NAS dies, my GoPro footage will be gone.

I’m not terribly concerned about this. I’m probably never going to look at the older footage. Even so, if I’m addressing my lack of storage, then I may as well include this data in my plans.

Why not use Google Drive or Dropbox?

Let’s just forget my paranoia and assume I’m not worried about Dropbox peeking at my private data. Let’s ignore the fact that Dropbox stopped working on some Linux file systems. We can also ignore the fact that Google doesn’t even have a file sync client for Linux.

I was mostly looking at price. Google Drive is $100 per year for 2 TB, and Dropbox is $120 per year for 2 TB. I’m paying $43 per year for 400 GB of Seafile storage from Prometeus. Prometeus was quite a bit cheaper in 2018, but Google One and Dropbox have adjusted their pricing since then.

Neither service offers a large enough plan for my needs, but I’m just going to assume I could buy 4 TB of storage for double the price of 2 TB. I’m looking to sync a little over 3 TB, so a 4 TB plan makes sense to me.

I’d be paying $240 per year to Dropbox or $200 per year to Google. Wait until you see what my do-it-yourself setup cost.

I wouldn’t have even considered hosting Seafile myself again without Tailscale

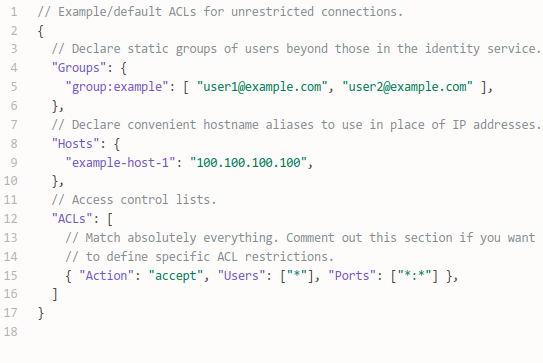

Tailscale is fantastic. Tailscale is a mesh VPN service built on top of Wireguard. You install the Tailscale client on every one of your machines, and each computer will connect directly to every other computer on your Tailscale network using Wireguard. Tailscale manages all the authentication and encryption keys for you.

One of the things I hated about hosting my own Seafile server on the public Internet was security updates. I had to constantly make sure my operating system was up to date. If Seafile or Nginx had a serious security patch, I had to race to update it as soon as possible.

Tailscale is hiding my Seafile server from the public. My Raspberry Pi server will be sitting behind Brian’s firewall, and I blocked every port on the Ethernet interface except for Tailscale’s port. I can only ssh in through the encrypted Tailscale network interface, and I can only access the Seafile services on that interface.

I won’t have the entire internet banging away at my Seafile server. The only computers with access will be computers that I control. It will be so much less stressful!

Not only that, but I’ll be able to share my Seafile server with my wife using Tailscale’s machine-sharing feature.

Use the unstable release of Tailscale!

The stable release of Tailscale in their Raspbian repositories is version 1.2. I switched my Pi to the unstable repository, and that installed version 1.5.4. This doubled my network performance over Tailscale!

I wasn’t smart enough to investigate this until after the initial upload of my 3.3 terabytes of data. This would have saved me considerable time!

Why am I using a Raspberry Pi?

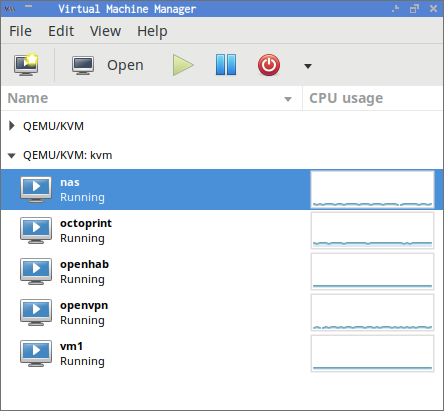

I got this idea shortly after Brian gave me a full Pi-KVM setup for Christmas. I immediately plugged the whole setup into my virtual machine host to test it out. As soon as I saw that it was working, I installed Tailscale on the Pi-KVM. That got the wheels turning.

The next week, I ordered a 2 GB Raspberry Pi 4 and a 14 TB Seagate USB hard drive. The hard drive was on sale for $230, and I overpaid a bit at $54 for the Pi with a power supply from Amazon. I figured it was worth it for the 2-day shipping.

Don’t just buy the hard drive I bought. There’s always an external USB hard drive on sale somewhere!

The Pi-KVM setup was using a 4 GB Raspberry Pi 4, but the entire setup was using only about 200 megabytes of RAM. I swapped the 2 GB Pi into the Pi-KVM setup, and I stole the 4 GB Pi for the Seafile project. Even the 2 GB Pi is overkill for the Seafile server, but I figured that I’m more likely to find a use for the extra RAM on the Seafile server.

I could have saved a bit more money. There’s no reason for this to be a Raspberry Pi 4. Any old Pi would do the job. I ordered a Pi 4 to swap into the Pi-KVM because some of the accessories are USB-C, and older Pi models don’t have USB-C ports.

At full price, my 14 TB Pi Seafile server would be just a bit over $300. With the sale on the hard drive, I paid a little under $300.

Why are you only using a single hard drive?!

I have to admit that I was extremely tempted to buy a second hard drive to set up a RAID 1 array. It was easy enough to talk myself out of it.

Nearly doubling the price of the project wasn’t exciting to me. Price per available terabyte would still compare quite favorably to Google One or Dropbox. There’s nearly 10 TB that I’m not even using, though. Using a second hard drive would mean it would take 3 years of use to match Google One’s pricing instead of 18 months. Still reasonable, but not as interesting.

Recently I’ve started saying that I have a redundant array of inexpensive computers. There’s a copy of my data on the Seafile server. There’s a copy of my data on my desktop. There’s a copy of some of my data on my NAS. There’s a copy of my data on my laptop.

Any one of these machines counts as my backup. If that cheap 14 TB drive in my new Seafile Pi server fails, I can replace it and reupload my data. Sure, losing out on Seafile’s sync services for a few days will be an inconvenience, but it will be a minor one. These days I can always use Tailscale to access files on another machine in an emergency like this.

If I did buy another 14 TB Seagate drive, I would install it in my NAS instead of building a mirror on the Seafile server. That additional drive could be another sync point for a Seafile client, or I could do an rsync backup of the Seafile server. Either option would be a better value to me than mirroring the Seafile server’s storage drive.

You should have an off-site backup

My Seafile server has been off-site backup since 2013. At first, it lived in a data center 20 miles south of my house. Then it lived in some random data center in Romania. My new Raspberry Pi Seafile server is going to live 6 miles away at Brian Moses’s house.

The server is still in my house today, but I’m treating it as if it was already living somewhere else. Everything on the local interface is already firewalled off, and I am uploading my data to Seafile using Tailscale.

Don’t worry about my data. I still have my account at Prometeus.net, and they still have a rather recent copy of my data. If my house burns down, I might lose a few days’ worth of writing.

I told Brian he needs to colocate a Raspberry Pi server at my house. We should be making full use of the buddy system!

How are things working out so far?

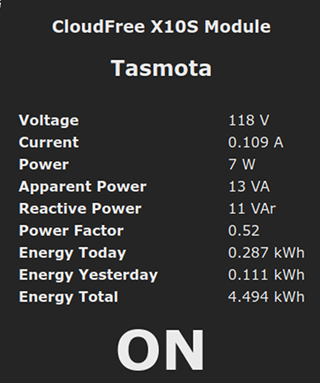

Everything is slower than I expected, but this is kind of my own fault. I did an iperf test of the Pi 4’s gigabit Ethernet and saw 940 megabits per second. I ran cryptsetup’s benchmark and saw that the Pi could manage roughly 100 megabytes per second while encrypting the disks.

Tailscale wound up being the slowest piece of the puzzle. Tailscale doesn’t use the Linux kernel’s Wireguard implementation. It uses a Go implementation. The Go implementation of Wireguard is slower than the kernel on Intel, and I wouldn’t be surprised if the performance gap is bigger on Arm. This isn’t a big deal.

Over Tailscale, iperf averages around 65 megabits per second. Much of the time, my Seafile upload has been staying pretty close to this number.

Not always, though. There’s a lot of encrypting and decrypting going on. Tailscale is decrypting AES, then Nginx decrypting SSL. That’s two extra layers of encryption on top of data that was pre-encrypted by the Seafile client on my desktop. Then the kernel is encrypting the blocks that are written to disk.

Writing to a USB hard drive is also a rather CPU-intensive task.

During the first part of my upload, all four of the poor little Pi’s CPU cores were maxed out, and I was averaging somewhere around 35 megabits per second. I’m assuming this is because of the quantity of smaller files that were being synced one at a time.

I have nearly two terabytes left to sync. I haven’t looked at the average speed, but we’ve been up over 50 megabits per second the entire time I’ve spent writing the last four paragraphs. We’re down to RAW photos and huge GoPro videos, so I’m not too surprised they’re going faster.

My Internet upload and download speeds are both 200 megabit, and Brian has 1 gigabit up and down. This would sync just as quickly if the server were already at his house.

Are you going to CNC or 3D-print an enclosure?

I have to say that I was really tempted to design something! The biggest problem is that USB hard drives are significantly cheaper than SATA drives even though they’re usually plain old SATA disks on the inside.

If I were buying a bare 3.5” disk and a stand-alone SATA-to-USB adapter, then designing an enclosure would have been a no-brainer. Since I’m buying a USB hard drive, the SATA-to-USB adapter is already included, and the whole thing is wrapped up in a nice enclosure.

I am using a Pi enclosure from Amazon. It is the one Brian gave me with the Pi-KVM. I DO want to design a custom case for that one! I just used some 3M Dual Lock to attach the Pi case to the Seagate hard drive. I also used some Velcro cable ties and a couple of pieces of sticky-back Velcro to manage the USB cables.

I think it is reasonably clean, and it shouldn’t look out of place sitting next to Brian’s Octopi. If the hard drive fails, I don’t have to worry about taking a replacement drive apart to fit the bare SATA drive into some sort of custom case. I’ll just put some Dual Lock on the new drive, plug it in, and get going right away.

I’m also not accidentally voiding the 1-year warranty on my 14 TB Seagate drive.

It is difficult to compare apples to oranges

On one hand, I’m getting 14 TB of Dropbox-style cloud storage for $300. That would cost me $700 per year from Google Drive, right?!

My hardware might fail and need to be replaced. I have to handle updates myself. Brian’s gigabit FiOS connection isn’t going to be as reliable as Google, but at least it is already paid for.

Google and Dropbox both have access to your files. It is policy and not capability that keeps employees from sneaking a peek at your data. I’ve worked in a lot of IT departments, and every one of them had one guy that was proud and excited that he could read everybody’s email. I’d much rather keep my own files to myself.

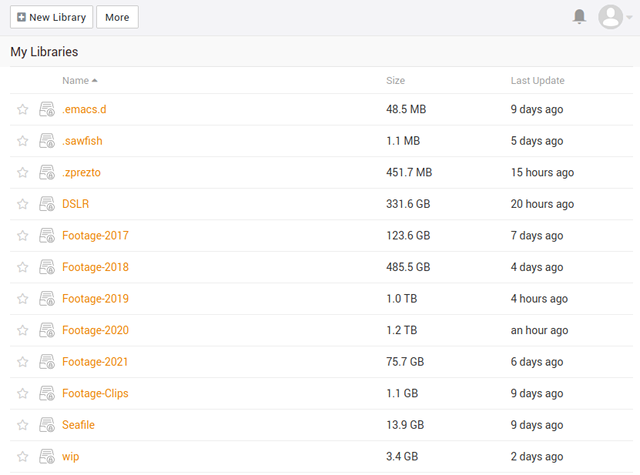

NOTE: I’m not quite finished uploading in that screenshot, and I’m already using 2.9 terabytes more storage than I currently have available at Prometeus.net.

Saying I have 14 TB of cloud storage feels disingenuous. I’m going to be using 3 TB, and I’ll probably accrue up to one TB of fresh data every year. It would be a long time before a service like Google One would actually cost me $700 per year.

For me, keeping my data on my own hardware has quite a bit of value. My wife and I will be saving a total of $86 per year, and I’m pushing an extra 2 TB or more to Seafile right now for free. I’ll have paid for the hardware in 3 years, and I’m considering my labor to be the cost of keeping my data private and safe.

Don’t forget that hosting my own Seafile server on a Raspberry Pi gives me the opportunity to write this blog post. I will see more clicks from Google. I might make tens of dollars per year on Amazon affiliate sales. I’m also having fun writing this, and I had fun cobbling together this little server.

Setting up Seafile was way too complicated!

I can already see that this blog post is going to be approaching 3,000 words by the time I’m done, so I don’t have room here to document how I got things working. I stumbled quite a few times, but I didn’t document anything. I also cut a few corners, and I wouldn’t want you to follow me there.

The instructions for setting up Seafile on a Raspberry Pi didn’t quite work. Seahub just didn’t want to start, and its log file was empty. I bet I futzed around with that for two hours. I don’t even remember what actually fixed it.

Then I had to put Nginx in front of Seafile to add SSL. I was hoping to use Let’s Encrypt for my cert, but using Let’s Encrypt for a host with a private IP address looked like it was going to be a real pain in the neck, so I just set up a self-signed cert. This was lazy, but it works.

I don’t have a monitor and keyboard plugged into my Pi. I goofed up the firewall rules twice. That meant I had to power off the Pi, plug the SD card into my computer, fix the rules, and try again. This was slow going.

I didn’t even have to write my own iptables rules, but I did. Tailscale already has documentation describing how to do to set up your firewall using ufw. I could have just copied from their example.

I’d like to write about each of these things. I have an extra Pi, so I can replicate these processes, and I can do them correctly next time.

You don’t have to do this my way!

You can and should set up a Raspberry Pi Seafile server. Maybe you don’t like Seafile, though, and you’d rather use Nextcloud. In either case, you should look at using Tailscale so you can access this little micro server from anywhere in the world.

Maybe you don’t want to use the buddy system. Maybe you want to keep your Seafile or Nextcloud server at home. That’s fine, but I still recommend the buddy system. The best backup is an off-site backup!

There’s plenty of free RAM on my Pi. You could definitely host other services on there. It is almost too bad Bitwarden only charges $10 or $15 per year, because this machine would be a good place to host my Bitwarden server.

Conclusion

This project hasn’t even really hit the ground running yet. My giant collection of GoPro flight videos is still syncing, and the Seafile server is still here in my house. I have confidence in its success, though, because I ran my own Seafile server for quite a few years.

What do you think? Am I making a mistake by going back to hosting my own cloud-storage server? Should I just pay someone instead? Or is this going to be a fantastic value? Are you already using Tailscale for a project like this? Tell me about it in the comments, or stop by the Butter, What?! Discord server to chat with me about it!