Early in November, I decided that we should give our 13-year-old nephew, Caleb, a full FPV quadcopter setup for Christmas: a 5” racing drone, 6 batteries, a LiPo charger, FPV goggles, and a Taranis X9D+ transmitter. My wife was worried that we’d be spending too much on his Christmas gift, but I convinced her that it was an excellent idea.

Cost is only one obstacle, though. Flying FPV racing quads can be an inexpensive hobby. I always compare it to golfing. My driver cost more than you would have to pay for a full set of entry-level FPV quadcopter gear. We bought him a better-than-entry-level transmitter, so our whole setup did end up costing more than a driver, but I think we did alright!

I had other worries

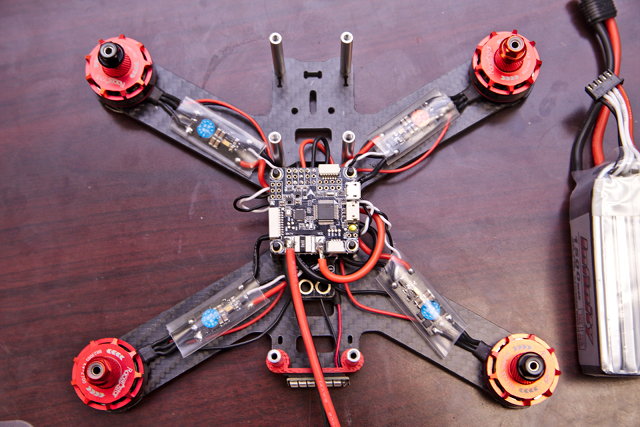

Racing quads are not toys. The relatively inexpensive BFight 210 quad we got for Caleb is easily capable of reaching speeds in excess of 80 miles per hour. It is most definitely not a toy. A crash could easily smash through somebody’s windshield or send someone straight to the hospital. Safety is paramount.

I often tell people that we spend as much time building and repairing drones as we spend flying them. There’s some hyperbole in that statement, but it helps drive home the message that you need to enjoy working on electronics if you’re going to have a good time flying FPV racing quads. If you don’t think you’ll enjoy spending an hour soldering replacement parts onto your quadcopter when you get home, this hobby might not be for you.

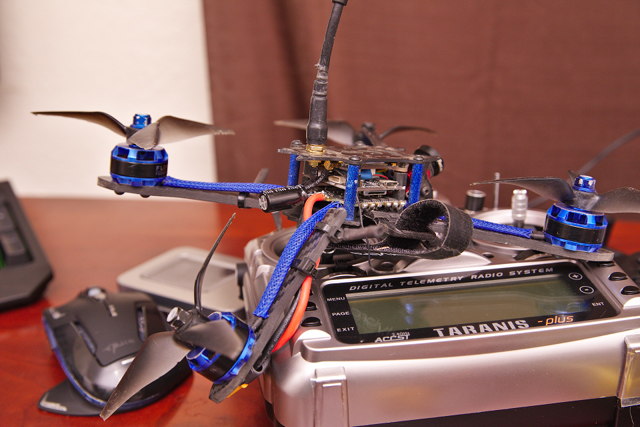

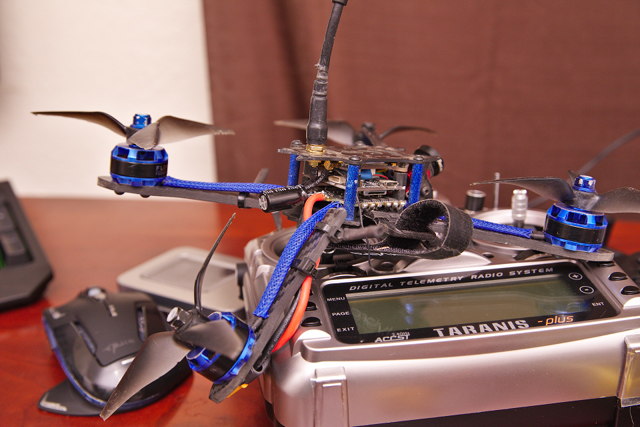

Even ignoring the hardware and soldering, there is a steep learning curve involved in configuring all the software. Binding a new drone to your Taranis radio has proven to be problematic for new pilots, and that’s the first and simplest step in that process. Many have trouble configuring Betaflight correctly as well. Betaflight is the software that runs on your quadcopter’s flight controller.

We’re constantly running into people that are inches away from being able to fly their new quadcopter, but they’ve missed one or two minor configuration details along the way. Even if they’ve managed to get their new quadcopter in the air, they might have missed an important configuration detail that ends up making their quadcopter fly poorly. It is a complicated hobby, and everything goes more smoothly if you have help!

Someone needs to know what they’re doing!

If the recipient of your gift has to learn everything the first day, they’re going to be in trouble! I did most of the difficult work before we gift-wrapped all the hardware.

I mapped some switches to communication channels on Caleb’s Taranis X9D Plus radio and configured it to communicate with the quad. I configured Betaflight and mapped all the important modes to appropriate switches. I picked a channel on his VTX and tuned his goggles into the correct channel. I also put the props on correctly and took it out for a quick test flight.

These are all things that he will eventually need to learn how to do. If he had to spend hours working on all this stuff before he got flying, I think he would have been disappointed. If he didn’t do a good job setting all this stuff up, his early flight experiences may have been poor.

The simulator is invaluable

Caleb and his family flew into town on Christmas day. As soon as he was done opening his presents, I plugged his Taranis X9D Plus into my laptop, fired up Liftoff, and him and his father practiced flying for about an hour.

It was raining the next day, so we couldn’t go out flying. I put them both on the simulator again, and I had them practice exactly what they needed to be doing their first day out at the park. I wanted them to get up in the air—not too high, but not so close to the ground that they’d accidentally wreck. Then I had them make big circles around the map and come home for a landing.

The next day, we repeated that exercise, but this time we did it with a real quadcopter! We went out and they flew laps around a big open field. They did a fantastic job, too. Most of my friends learned to fly without a simulator, and we didn’t do nearly as well on our first flights!

That same day, Caleb watched me fly my quad through a drone gate several times. He wanted to learn how to do that, too. When we got home, I fired up Liftoff and loaded a race with lots of drone gates. Caleb and his father practiced flying through the drone gates for about an hour.

The next day, we went back to the same park and I set up one of my drone gates—a pop-up soccer goal with the net cut out. It is much smaller than the gates in the simulator, but Caleb did a fantastic job flying through it. I was amazed! It probably took me a month before I managed to fly well enough to do that!

He did a better job hitting the drone gates on his first battery, though. He was flying slow and smooth. The more successful he was, the faster he would attempt to go, and his successes then became fewer and fewer. He was mostly doing the right thing, because it is important to push your abilities a little past your comfort zone. You have to find a balance between crashing and flying. Flying is more fun than walking to recover your crashed drone!

The list of gear

You need a lot of hardware to get flying. I’m going to type up a list of the things we gave Caleb to get him started. I cheated a little. The only things I had to buy were the BFight 210 drone, the Taranis X9D+ radio, and a FrSky XM+ receiver. The rest was all stuff that I already had around the house.

Total: $460

Battery total: $180

We gave him a lot of gear. I fly higher-quality 1500 mAh batteries now, and I have more of them than I want to carry. I wound up giving Caleb all my old 1300 mAh packs. It is more batteries than he’ll need, especially with the long flight times of the BFight 210.

Since I upgraded to a much better bag, I also sent him home with my old tactical backpack.

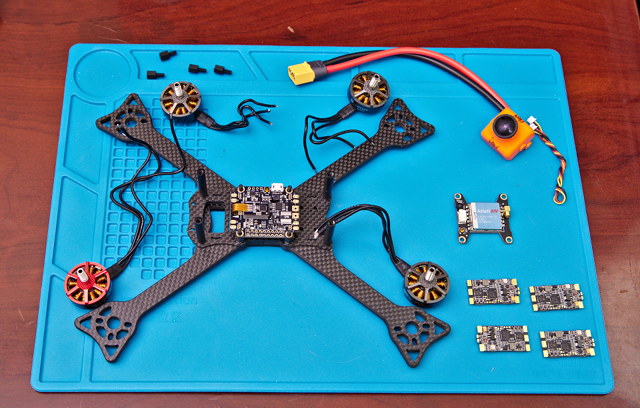

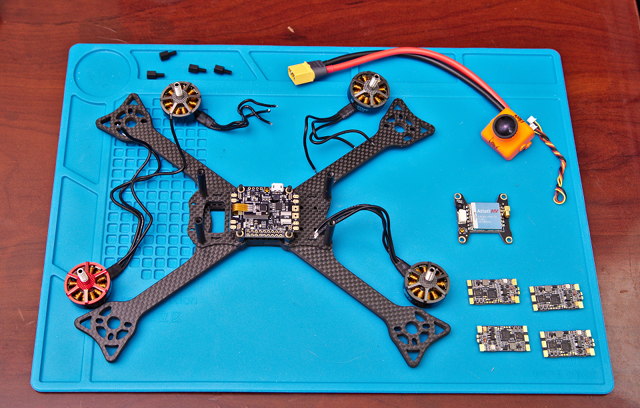

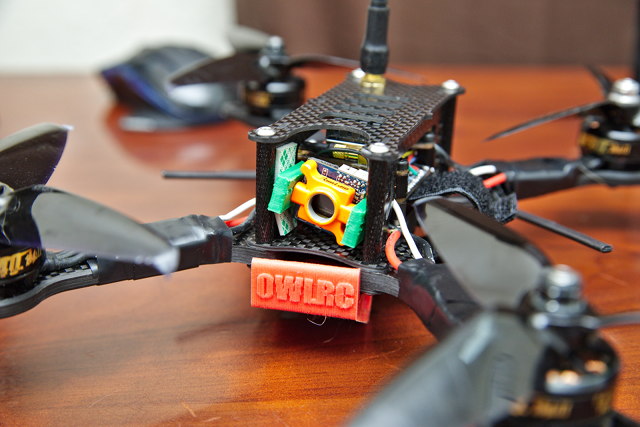

The BFight 210 FPV Racing Quad

Back in October, my friends at Gearbest sent me a BFight 210 to review. It is an awesome 5” racing quad for the price. It isn’t perfect—no $140 racing quad will be perfect. Its problems are mostly minor.

The FrSky or Spektrum compatible receivers that ship with the bind and fly models don’t work all that well. I’d highly recommend using your own receiver. I use a FrSky R-XSR receiver in mine, and I used a FrSky XM+ in Caleb’s BFight 210.

My biggest complaint about the BFight 210 is that the frame is somewhat fragile. It is obvious that it is trying to imitate the lightweight Hyperlite FLOSS frame, and both the BFight and FLOSS have narrow arms. Thankfully, replacement arms or entire replacement frames are inexpensive.

My friend Brian and I regularly receive quadcopters from Gearbest for review. He’s gotten a pair of Furibee racing drones since I first reviewed the BFight 210. Both Furibee drones are better equipped than the BFight 210, but they don’t seem as solid and reliable.

But you got your BFight 210 for free! Can your opinion be trusted?

Shortly after my BFight 210 arrived, I went out to the park with my friend Brian. I let him fly it, and he ordered one for himself a few days later. Shortly after he ordered his, I ordered a BFight 210 for Caleb. Right around the same time, our friend Mike from TheLab.ms makerspace ordered one, too.

They’re all still airworthy. I did have a big crash, and I broke one of my BFight 210’s arms. I moved my BFight’s motors and electronics to an OwlRC Dragon frame, and it is all still flying well. I fly it every time I go to the park.

We are all pleased with the value of our BFight 210 racing quads!

Did I have to spend this much?

No, I didn’t, but just about the only place to save any money is on the radio. I chose the Taranis X9D Plus because it is my favorite radio, and it will be easier to provide tech support if his radio matches mine.

I could have saved $80 or so if I went with the Taranis Q X7. If you want to spend less, that’s the radio I would recommend. It is just as good as the X9D+ in almost every way. They both have the same range and channel count. You can plug either into your computer’s USB port to use them with a simulator such as Liftoff.

The Taranis X9D Plus comes with a battery and charger. The Taranis Q X7 requires a bunch of AA batteries or compatible battery module.

The least expensive radio worth buying is the FlySky FS-i6. It doesn’t feel as nice as the Taranis radios, and it doesn’t have as much range, but it is more than enough radio for a beginner. The FlySky FS-i6 and receiver combo only costs $50. That would bring the total cost down under $300 before batteries!

NOTE: Almost all quadcopter pilots in the US use Mode 2 transmitters. Mode 2 radios have the throttle control on the left stick. Most transmitters are available in either Mode 1 or Mode 2. Make sure you choose Mode 2!

This still sounds like a lot of money

Everyone keeps telling me that flying drones is an inexpensive hobby. It is one of the cheapest hobbies I’ve ever been involved in, but I can’t seem to stop spending money! It certainly can be an inexpensive hobby, but I keep buying and building more quadcopters.

I always go back to the comparison with golfing, even though I haven’t played in years. There’s a reasonably priced golf course a few miles down the road from me. Depending on the time of day, it would cost me $20 to $40 to play a round of golf. I’m not very good, so I’d expect to lose a few dollars’ worth of golf balls.

Once you’ve bought your quadcopter and other related equipment, it doesn’t cost anything to fly. I usually need to replace a prop or two every time I go flying, but props cost less than golf balls.

You can use the same batteries, charger, goggles, and transmitter with a whole fleet of racing quads.

Every now and then, you will have a more serious crash. You might break a motor, ESC, or frame. Replacement parts usually cost less than $20. You can get an extremely durable OwlRC Dragon frame for less than $60.

This does sound reasonable. How many batteries do I need?

Depending on how powerful and efficient your quadcopter is, each 1300 to 1500 mAh 4S LiPo battery will keep you flying for up to 10 minutes or even as little as 3 minutes. If I’m heavy on the throttle, I could burn through an entire 1500 mAh battery on my most powerful quad in under 3 minutes. If I’m just cruising around, I can get just over 11 minutes of flight out of my BFight 210.

Most of the time, you’ll have flights somewhere in between those times. Five-to-six-minute flights are very common, unless you’re carrying the extra weight of a GoPro action cam.

One battery is definitely not enough, but how many do you really need? I wouldn’t recommend carrying fewer than four batteries. It is very difficult to spend all your time flying, so that ought to be enough to spend 30 or 40 minutes at the park—especially if you bring a friend.

Four batteries is probably enough, but having more is even better!

What if this is too expensive? What if I don’t trust my kid with something this powerful?

A proper FPV racing setup is expensive, especially if you’re not sure that the recipient of this wonderful gift will maintain an interest in the hobby. Maybe they’ll have trouble learning to fly. Maybe they’ll have a big crash and be unable to repair the quad. Maybe they’ll just have trouble taking regular trips to a safe location for such a fast and dangerous piece of equipment.

There are ways to get into the hobby without breaking the bank. One of the best options is the Eachine Q90C Flyingfrog bundle. I haven’t tried the Flyingfrog yet, but I just ordered one. It is available with or without goggles. Make sure you choose the correct one!

It is a brushed micro FPV drone—an overgrown Tiny Whoop—that comes with a radio, goggles, and one battery. Add some spare batteries to your order, and you’ll have a complete FPV racing setup for around $100.

I think it is a great value, but you get exactly what you pay for. The drone uses brushed motors, and they start to lose their pep after 30 or 40 flights. At least brushed motors are cheap!

The radio that comes with the Flyingfrog is far from the best, but it is as close as you’ll get to a real radio at this price point. There are some other FPV bundles available, but the radio that comes in the Flyingfrog kit can be plugged into a computer and works with all the popular simulators.

The goggles that come with the kit may be rather low-end, but they’re also the only part of the kit that you’ll be able to take with you on your progression into more advanced FPV racing gear. They’ll work with any standard FPV racing drone, including the BFight 210 that I bought for our nephew.

Is it scary handing the controls over to a 13-year-old?!

It was absolutely terrifying! I’m nervous every time we help an adult fly a racing quad for the first time. Are they going to hit a car in the parking lot? Are they going to slice their finger open? Will they get their drone stuck in a tree? Are they going to fly out into traffic and cause an accident?

I recently published a collection of tips for beginner FPV quadcopter pilots. They’re definitely worth reading over if you’re concerned about the potential pitfalls of this hobby!

These are the questions that keep going through my head, and those worries are only amplified when the new pilot isn’t an adult. This time, though, I was using the trainer mode on our Taranis radios. I had my radio plugged into Caleb’s radio, and I could take over at the first sign of danger.

I knew Caleb was going to be in town for a week, and I didn’t want to send him home with an 80 MPH guided projectile unless both he and his father could safely fly around our big, open park.

It only took about thirty seconds of flight time before my worries faded away. I knew I wasn’t going to have to take control. I knew I wouldn’t need to plug my trainer into his radio for his second battery.

Everything went fine. Caleb’s time in the simulator paid off. He could have gone home right then, and I wouldn’t have worried about him and his quadcopter. I’m happy that we had another day of flying before he left, though!

The simulator will fail to teach you the most important lesson

When you’re flying in the simulator, you just fly until you crash. When you crash, the game resets itself, and you start flying again.

In real life, you will have an arming switch on your transmitter. You have to arm your quad before you start flying, and disarm it again when you’re finished. This isn’t required in the simulator.

That switch is by far the most important control on your quadcopter. If you’re about to enter a dangerous situation, that’s the switch you want to flip. It will turn off all the motors, and gravity will quickly intervene and bring your quadcopter to the ground.

You need to be able to flip this switch without thinking about it. If you hit a tree, and your quadcopter starts flying in the direction of people, you should immediately disarm. If you’re heading towards the road, you should disarm. Any time you don’t feel in control, you should disarm.

Since he didn’t get to practice this in the simulator, I was worried that Caleb wouldn’t learn this instinct quickly enough. I must have drilled it into his head quite a bit, though, because he was very good at disarming whenever he bumped into the drone gate.

As you get more skilled, you won’t need to disarm in as many situations. It is better to have a broken prop or quad than a broken car or person!

It is a fun and inexpensive hobby to share

I know I keep talking about how inexpensive this hobby is. For me, it has been anything but. Buying into the hobby is the most expensive hurdle. The expensive parts aren’t even the ones flying through the air, which is awesome.

No individual part of a quadcopter like the BFight 210 costs more than about $30, and most components are under $15. A day of flying is usually cheaper than going to the driving range or an arcade. Do kids still go to arcades? We had a good time taking Caleb to the Free Play Arcade when he was in town, so I’m going to assume that they do!

Flying quads is much more fun with a friend. When we go flying, we usually take turns in the air. Flying batteries back-to-back is tiresome. Caleb’s father is going to have just as much fun flying as Caleb, and I won’t be surprised if they take turns flying.

If you’re buying this sort of setup for a kid, they’re going to need some sort of support system. They’ll need someone to keep an eye on their activities, and they’ll need someone to help when things to wrong. Flying alone is a terrible idea, and the FAA says it is against the rules.

If you’re like me, and you’re an uncle buying this setup for your nephew, then you probably know that you aren’t just giving your nephew a fun, exciting gift. You’re giving both your nephew and his father an awesome gift, and you’re also giving his father some extra responsibilities! Make sure his parents are ready, willing, and able to take on that responsibility.

If you’re a parent interested in getting your kids into FPV quads, I think that’s awesome! Especially if you’re interested in the hobby as well.

What’s the right age to start flying FPV?

I am not a parent, and I don’t know much about kids. I’m probably the wrong person to answer that question. Caleb is 13, and he is old enough. I imagine you can get away with even younger kids.

Toy packaging always has recommended ages. When I was young, all my favorite toys were labeled beyond my age. You know your kids better than I do, so use your best judgment. Can they be trusted handle an 80 mile per hour guided missile? That’s entirely up to you!

Final thoughts

The gear required to fly FPV is an awesome gift, but it isn’t for everyone. The hobby requires a big time investment, and the younger the recipient of that gift, the more help and guidance they will need from an adult. You need to remember that flying is only part of the hobby. They might have to spend almost as much time building and repairing quads as they do flying, and they may need a lot of assistance!

This is a great hobby for a parent and child to enjoy together. It is plenty of fun for adults, and it is a lot of fun for kids. Flying an FPV quad is a lot like playing a video game, except that it isn’t a game. You get to fly an actual aircraft in the real world. How amazing would that have been when you were a kid?

Do you fly FPV with your kids? If you do, please leave a comment. I’d love to hear about your experiences!