UPDATE: Everything in this blog post is still relevant today, but I’ve since upgraded to 40-gigabit Infiniband cards. When I bought the used 20-gigabit Infiniband gear, it was already 10 years old. Today, the 40-gigabit stuff is 10 years old, and it costs about what I paid for the 20-gigabit hardware. You should skip the 20-gigabit Infiniband hardware and go straight to 40-gigabit.

Gigabit Ethernet ought to be fast enough for anybody, right? It usually is, at least for me. Heck, even Wi-Fi is fast enough for most of my local streaming and Internet needs. I am impatient every now and again, and when I’m impatient, I think about what I might do to upgrade my home network.

My friend Brian decided to add 10 Gigabit Ethernet to his home network. I would enjoy having a faster link between my desktop computer and virtual machine server, and I didn’t want Brian to leave me behind in the dark ages, so I decided to give InfiniBand a try.

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network

- Building a Cost-Conscious, Faster-Than-Gigabit Network at Brian’s Blog

- Can You Run A NAS In A Virtual Machine? at patshead.com

- Pat’s NAS Building Tips and Rules of Thumb at Butter, What?!

Why InfiniBand?

As it just so happens, used InfiniBand hardware is fast and inexpensive. It isn’t just inexpensive—it’s downright cheap! I bought a pair of 2-port 20-gigabit InfiniBand cards and a pair of cables on eBay for less than $70. That $20 per card and $15 per cable.

If that’s not fast enough for you, 2-port 40-gigabit cards don’t cost a lot more. I figured I’d save a few bucks on this experiment, since I don’t have fast enough disks to outpace a single 20-gigabit port. In fact, the RAM in my little server probably can’t keep up with a pair of 20-gigabit ports!

I decided to be optimistic, though. I bought two cables just in case 20 gigabits wasn’t enough.

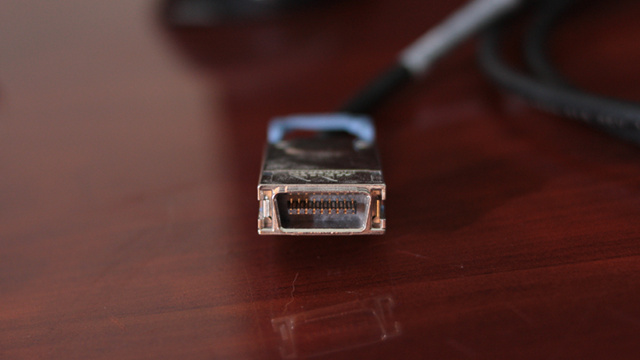

I bought InfiniBand cards with CX4 ports. The cards aren’t always labeled well on eBay, though. Most of the CX4 Mellanox-brand cards will have XTC in their model number. My Mellanox cards were rebranded as HP, so they don’t have those helpful part numbers. Luckily for us, pictures of the cards make it terribly obvious.

You can buy used InfiniBand gear at Amazon or eBay. I usually prefer the convenience of Amazon, but the prices are quite a bit better at eBay.

- InfiniBand Cards at Amazon

- InfiniBand CX4 Cables at Amazon

How is InfiniBand different than 10 Gigabit Ethernet?

10 Gigabit Ethernet is another incremental upgrade to the Ethernet protocol, and it works with TCP/IP in exactly the manner you’d expect. You can run IP over InfiniBand, but that isn’t what it is designed for—you lose the advantages of InfiniBand’s Remote Direct Memory Access (RDMA). I knew before ordering my InfiniBand cards that this might cost me some performance, but I didn’t know how much.

I can run iSCSI over RDMA, and these InfiniBand cards should be fast enough that I wouldn’t be able to tell if an SSD were plugged into the local machine or the server on the other side of the InfiniBand cable.

Even though I don’t currently have a need for iSCSI, I did initially plan to test its performance. However, you need to install all sorts of third-party OFED packages to make use of iSCSI. I don’t need any of those packages to use IP over InfiniBand (IPoIB), so I didn’t think it was worthwhile to pollute my desktop and homelab server with extra cruft.

Performance is much better than Gigabit Ethernet, but I have a bottleneck somewhere

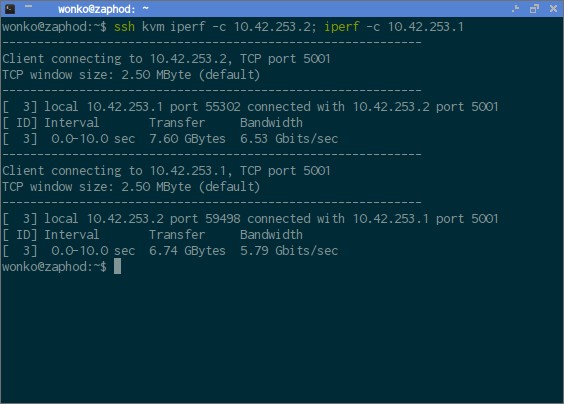

IP over InfiniBand (IPoIB) was easy to set up. The InfiniBand network interfaces default to datagram mode, which was extremely slow for me. Putting the interfaces in connected greatly improved my speeds. I am able to push around 5.8 or 6.5 gigabits per second between my little server and my desktop computer, depending on which direction the traffic is flowing.

I had no idea how much network performance I’d be giving up to the extra memory-copying requirements of IPoIB. 6.5 gigabits per second is well short of the theoretical maximum speed of 16 gigabits per InfiniBand port, but it is already a HUGE improvement over my Gigabit Ethernet ports.

At first, I had assumed my speed limitation was caused by the low-power CPU in my KVM server—I certainly didn’t choose it for its performance. During all my early testing, iperf’s CPU utilization was always precisely 50%. While writing this blog, however, I’m seeing 55% utilization when testing in one direction and 74% in the other.

I’ve also tested RDMA and rsocket performance, which should both be much faster. The RDMA benchmark tools were no faster than my IPoIB iperf tests, and my librspreload.so tests using iperf yielded identical performance as well. Those LD_PRELOAD tests with librspreload.so were definitely working correctly, because there was no traffic over the IPoIB link during the test.

1 2 3 4 5 | |

These results led me to dig deeper. Before ordering these InfiniBand cards, I knew I remembered seeing an empty PCIe 16x slot in both my desktop and my KVM server. As it turns out, both of those slots are only 4x PCIe slots. Uh oh!

1 2 | |

According to lspci, my Mellanox MT25418 cards appear to be PCIe 2.x devices, so they should be capable of operating at 5.0GT/s—that’s 16 gigabits per second. Unfortunately, they’re running at half that speed. This easily explains my 6.5-gigabit limit, but I had to dig deeper to figure out why these cards identify as PCIe 2.x while operating at PCIe 1.x speeds.

The Mellanox site says that the MT25418 cards are PCIe 1.x and the MT26418 cards are PCIe 2.x. As far as I can tell, the Mellanox cards with model numbers that contain an 18 or 28 are PCIe 1.x,while model numbers containing 19 or 29 are PCIe 2.x.

Unfortunately, the faster cards cost four times as much. If you don’t have 8x or 16x PCIe slots available, and you need to double your performance, this might be a worthwhile investment. For my purposes, though, I am extremely pleased to have a 6.5-gigabit interconnect that only cost $55. That’s roughly 800 megabytes per second—faster than most solid-state drives.

- InfiniBand Cards at Amazon

- InfiniBand CX4 Cables at Amazon

You can’t bond IPoIB connections

My extra InfiniBand cable was completely useless for me. It took me a while to learn that my cards just aren’t capable of maxing out even a single DDR InfiniBand port in my machines. Of course, before testing anything at all, the first thing I tried to do

after a successful ping was attempt to bond my two InfiniBand ports.

You can’t do it. IPoIB runs at layer 2, and the Linux kernel can only bond layer-1 devices. You can use the channel bonding interface to set up automatic failover, but you can’t use channel bonding to increase your IPoIB bandwidth.

This led to a second problem.

You can’t attach KVM virtual machines to IPoIB interfaces

KVM can only bridge directly to layer-1 network devices. This seemed like an easy problem for an old-school network engineer like myself. I figured I’d just need to create a new bridge device on the KVM server with a new subnet. Then I’d just need to route from the IPoIB subnet to the new virtual subnet. Easy peasy, right?

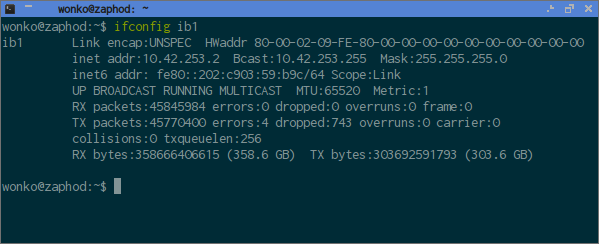

It should have been easy, but I spent days trying to make it work. I knew I had to set those giant InfiniBand MTUs of 65520 on all these interfaces, but I just couldn’t get decent speeds when routing. At first, I was getting 5 or 6 gigabits per second in one direction, but I wasn’t even hitting DSL speeds in the opposite direction.

It was easy enough to fix, and I’m more than a little embarrassed to tell you what the problem was. I missed an important interface. I just couldn’t set the MTU on my KVM bridge to 65520. It failed. As it turns out, you can’t set the MTU of a bridge device to 65520 if the bridge isn’t already bridged to another interface.

When my virtual machine on that bridge starts up, it creates a vnet0 device and immediately attaches it to the bridge. Once that device is created, you can set its MTU to 65520, and then you’ll be allowed to set the MTU of the bridge to 65520. Then everything works as expected.

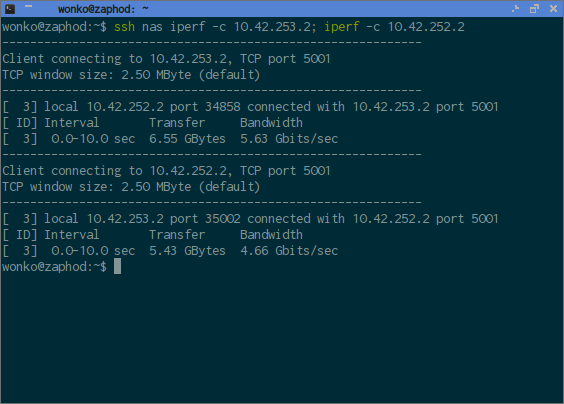

I am losing some performance here. I can “only” manage 5.6 or 4.6 gigabits per second between a virtual machine and my desktop computer. That’s still four or five times faster than my Gigabit Ethernet network, so I can’t complain.

How do I configure these IPoIB and KVM interfaces?

Documenting my new network and KVM configuration would probably double the size of this blog post, so I’m going to do a separate write-up on that soon. I will summarize things here, though!

You need an InfiniBand subnet manager. I’m under the impression that one of these may already be running on your InfiniBand switch, assuming you have one. I’m only connecting these two machines, so I don’t have a switch. I’m running opensm on my KVM server. I don’t believe I had to do anything to configure opensm after installing it.

Setting up the link between the two physical hosts was extremely easy, and it only required a few extra lines in my /etc/network/interfaces file to put the IPoIB links into connected mode. Other than that, they look just like any other network device.

1 2 3 4 5 6 7 | |

I am not routing any traffic between my InfiniBand subnet and my old Ethernet subnet. It isn’t necessary, since both machines on my InfiniBand network are also on the Ethernet network.

The verdict

I’m quite pleased with my InfiniBand experiment. It may have a few quirks that can be avoided if you use 10 Gigabit Ethernet instead, but InfiniBand costs quite a bit less, especially if you have enough machines that you need to use a switch—there are plenty of 8-port InfiniBand switches on eBay for under $100!

Had I known ahead of time that the InfiniBand cards I chose only supported PCIe 1.x, I would have spent more to upgrade to faster cards. If I had done that, though, this would probably be a much less interesting blog post. I can’t really complain about the performance I’m getting, either. All the disks on my KVM host machine are encrypted, and its CPU can only process AES at about seven gigabits per second. These cards are still just about fast enough to push my hardware to its limits.

I convinced my friend Brian to build a heavy-duty, dual-processor homelab server using a pair of 8-core, 16-thread Xeon processors. I don’t really have a need for a beast like that in my home office, but I haven’t built anything like that just for the fun of it in a long time. I may end up building a similar machine later this year to add to my InfiniBand network!

Are you using InfiniBand of 10-Gigabit Ethernet at home? Do you have questions? Leave a comment or stop by and chat with us on our Discord server!

- 40-Gigabit Infiniband: An Inexpensive Performance Boost For Your Home Network

- Pat’s NAS Building Tips and Rules of Thumb at Butter, What?!

- Can You Run A NAS In A Virtual Machine? at patshead.com

- RAID Configuration on My Home Virtual Machine Server at patshead.com

- Choosing a RAID Configuration For Your Home Server at Butter, What?!

- InfiniBand Cards at Amazon

- InfiniBand CX4 Cables at Amazon