This isn’t a tutorial. The steps to enable 802.11r on two or more access points running any reasonably recent release of OpenWRT are pretty simple. I will spell those steps out shortly, but there won’t be screenshots walking you through the process. I am writing today to document what I have done, what I might do next, and how things are working out so far.

I figured out what this long ass cable is! It is bypassing the extra layer of switches there and connecting my office to the switch ports on the router. Maybe I should color code that and make a correct length cable! pic.twitter.com/7gEHHaPDvL

— Pat Regan (@patsheadcom) October 25, 2022

As I fact check this post, it just keeps getting longer and longer. So much longer than I expected! It feels like every time I look up specifics to make sure my memories of various events are reasonably accurate I wind up finding something that I had an incorrect understanding or memory of.

Everything in here should be pretty accurate now, but I am definitely not an expert when it comes to any of the newer WiFi roaming specifications of technologies. This is just my experience while messing around with this stuff over the last week or so.

- Should You Enable Smart Queue Management To Mitigate Bufferbloat?

- Choosing an Intel N100 Server to Upgrade My Homelab

- Upgrading My Home Network With MokerLink 2.5-Gigabit Switches

- I Bought The Cheapest 2.5-Gigabit USB Ethernet Adapters And They Are Awesome!

Why is Pat monkeying with his WiFi setup?!

I have not been entirely unhappy with the WiFi signal around my house for the last few years, so how did we get here? Why have I upgraded things?

I was at Brian Moses’s house a couple of weeks ago. He’d just replaced one of his OpenWRT access points, a TP-Link Archer A7 WiFi 5 router, with a GL.iNet WiFi 6 router. That got my gears turning. I was thinking I could use Brian’s old router to solve my poor connectivity issues with my Raspberry Pi Zero W on my CNC machine in the garage. It’d get me a switch port to plug the laptop into as well, so I asked him how much he wanted for it.

The next week he sent me home with two of his old OpenWRT-compatible routers. That TP-Link and [a Linksys WRT3200ACM][wrt3200acm]. Now that I had an overabundance of WiFi routers, I figured it was time to reengineer my home network and eliminate my only access point that can’t run OpenWRT.

NOTE: You shouldn’t buy the TP-Link or Linksys router. The Linksys seems terribly overpriced, and it feels like the TP-Link should costs less, too. You can get two better equipped WiFi 6 routers from GL.iNet for the price of the Linksys WRT3200ACM WiFi 5 router, and the GL.iNet routers ship from the factory with OpenWRT.

The layout of our house

There are much bigger homes in our neighborhood, but the homes with significantly more square footage will have a second story. Our house is shaped like the letter U, and Zillow claims our house is around 2,200 square feet. I also need to cover the garage which adds another 500 square feet. Let’s just say I need to cover 2,500 square feet with WiFi, and reaching out into the yard wouldn’t be a bonus!

I have two access points, and each access point has a separate SSID on each radio. Chicanery2.4 and Chicanery5 are on the gateway router that lives in the network cupboard. Shenanigans2.4 and Shenanigans5 are on an access point in the living room. Both devices are D-Link DIR-860L routers. Both have faster WiFi when running D-Link firmware, but the one in the living room can’t even run OpenWRT.

Shenanigans is named after Farva’s favorite restaurant, and Chicanery is named after its Canadian equivalent from the sequel.

This is how things were configured last week.

Why not just put the same SSID on every access point?

We can call this my poor man’s WiFi steering. The access point in the living room does a good job reaching the entire house, but there are some important devices that are close to the network cupboard.

There is a television in the room opposite the network cupboard, and it is connected to chincanery5, and the television in the living room is also connected to chicanery5.

Why is the TV in the living room not connected to the access point in the living room? It is close enough to the cupboard to get a good connection, so there is no reason to waste 20 or 30 megabit on the house’s primary access point.

These devices are still connected to the access point in the cupboard today.

What have I changed?

I wound up putting the Linksys WRT3200ACM in the cupboard as our new Internet gateway and our upgraded chicanery access point. I did some tests, and this router can easily manage routing and NAT at 920 megabits per second. That’s only about 20 megabits shy of the maximum speed of gigabit Ethernet, so we won’t have to worry about swapping routers if we upgrade the speed of our FiOS service.

I put the TP-Link Archer A5 in the living room. Both routers are running the latest release of OpenWRT, and they have all their old SSID settings copied over.

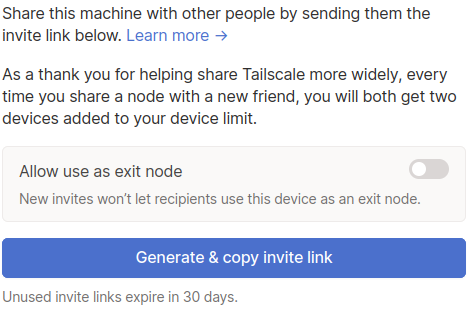

I also added a new SSID called kerchow to every radio. I set up 802.11r fast transition on that SSID for nearly instantaneous roaming.

How do you enable 802.11r roaming in OpenWRT?

I gave every radio that I wanted participating in the roaming the same SSID and WPA2 key. I also had to choose a mobility domain for my roaming group. Then I had to:

- Tick the checkbox on that interface to enable 802.11r

- Enter my mobility domain

- Choose

FT over the Airfor the FT protocol - Set DTIM Interval to 3 (supposedly helps iOS devices roam)

The first two are the only steps that are truly required. Lots of advice on the Internet recommended using FT over the Air, but I haven’t tested both options. They said to do it, it seems to work well, so I am sticking to it.

I have no iOS devices, so I have no idea about the DTIM Interval.

How do I know if 802.11r is working?

I am not entirely sure that I have tested things correctly, but I am mostly confident that I’ve tested well.

I used ssh to connect to both routers and ran logread -f to follow the logs to see when machines were connecting to WiFi. I had the OpenWRT LuCI GUI open in two browser tabs, and I would go in and click the disconnect button for my laptop.

I would immediately see output in one of the logs saying the laptop reconnected. I had a ping running on the laptop the entire time, and I never missed a ping. Sometimes one or two responses would bump up to 200 or 300 milliseconds, but they always made it.

This seems like a success to me!

How do you know if your 802.11r setup is working well?!

I had a glitch. My Windows 11 laptop very much preferred 2.4 GHz connections. Once it picked a 2.4 GHz radio, it would always connect to another 2.4 GHz radio after being disconnected. I had to turn WiFi off and on again on the laptop to get it to connect to 5.8 GHz again.

This is a bummer, because the 5.8 GHz radios are about four times faster. This happens because 802.11r doesn’t care about bandwidth. It only cares about signal strength. A 2.4 GHz signal has a much easier time penetrating walls than a 5.8 GHz signal, so the slower radio often has a better radio signal even if throughput will be lower.

I solved this problem in a simple, ham-fisted, but very effective way. I now have three SSIDs and three different mobility domains.

I have kerchow with a mobility domain of beef on every single radio. I have kerchow2.4 with a mobility domain of b0b0 on the 2.4 GHz radios, and I have kerchow5 with a mobility domain of b00b on the 5.8 GHz radios.

I wish I discovered both DAWN and usteer before setting this up.

I added a GL.iNet WiFi 6 router to the mix

I have a GL.iNet GL-AXT1800 travel router here, and I was curious if the 802.11r configuration would even work on this hardware. These particular routers have radio chips that aren’t yet supported by OpenWRT, so they are shipped with an oddball proprietary Qualcomm fork of OpenWRT.

Brian said his GL.iNet WiFi 6 router got pretty goofed up when he configured the WiFi through LuCI instead through GL.iNet’s interface, but it did let him add additional SSIDs to each interface through the LuCI GUI. I was worried that other things like 802.11r wouldn’t work.

I ran out of Farva-related restaurants, so I put the SSID of slammin on my GL-AXT1800. Then I added the three kerchow SSIDs with the correct mobility domains.

I don’t really have a good location for a third access point. I just found an open network jack near a power outlet that is about half way between the other two access points. My laptop rarely decides to roam to this access point. I had to click disconnect in the OpenWRT GUI so many times, but it did eventually connect right up to the WiFi 6 router.

As far as I can tell, 802.11r fast transition does work with the oddball OpenWRT firmware.

What about DAWN and usteer?

I am bummed out about this. A few weeks ago I learned the name of one of these packages, but I didn’t remember to make a note of it. I tried finding it several times over the last week, but I failed miserably.

Do you know when I managed to find one of them? Minutes after I set up nine different variations of kerchow on three different routers.

I don’t have a lot to say about either one at this point. They are both available as OpenWRT packages. They are tools that help you configure how devices roam between your WiFi access points.

Both seem fiddly and complicated, and success seems to involved a lot of trial and error. I’d be happy if either package could easily manage to accomplish two things.

I’d like to be able to eliminate two of the three kerchow variations. It sounds like configuring one of these tools to make your 2.4 GHz less appealing only requires a few lines of configuration. That would be awesome.

- Setting up DAWN and band-steering in OpenWrt at the OpenWRT Wiki

- Setting up usteer and band-steering in OpenWrt at the OpenWRT Wiki

Why am I waiting before trying DAWN or usteer?!

Both require upgrading the default wpad-basic OpenWRT package with the more feature complete version. I’m sure this will go smoothly, but I am just not ready to try something that might require that much effort!

If I were just monkeying around with a few test routers, this would be fine. That’s not what I am doing. These are the routers running my home network. I need them to work, and they are working right now.

NOTE: I might be confused about this. My fresh installs of OpenWRT 22.03 all have wpad-basic installed, and the description for this package claims it supports 802.11r. I am guessing that wpad-basic has replaced wpad-mini which lacks this support. I am not certain if wpad-basic has the features required for DAWN or usteer, but I am suspecting that it will work fine!

Your setup is probably more complicated than mine!

I have learned that I can cover my entire house with acceptable WiFi speeds using a single access point. Sure, the speed drops to 20 or 30 megabits per second in the bathroom and garage, but that’s more than enough to watch YouTube videos. Anywhere with a desk can manage 200 megabits per second.

I don’t truly need multiple access points with fast roaming, but maybe you actually do! If you do, I imagine things get more complicated!

My access points have their radios cranked up to maximum output. If you’re trying to cover a slightly larger area with two access points, you probably don’t want to be blasting quite so hard. You want your devices to notice that one access point is weaker than the other so they can switch over.

I am also cheating because there is a gigabit Ethernet port at every desk in the house. I only need just enough WiFi reaching every comfy chair for my phone and laptop to at most play YouTube videos, and I need a couple of dozen megabits to get 4K video streaming to two televisions. Things get more complicated if you really need a solid 200 megabit wireless connection at specific workstations in the house.

Why does Pat keep using numbers like 200 megabit?! Can’t modern WiFi do gigabit?!

I’ve been doing iperf tests to various access points from all sorts of distances and different rooms all week. The fastest WiFi 6 router I have access to has managed to pull numbers really close to 700 megabit, but that only happens in the same room, and it only happens some of the time. I am way more likely to see 400 megabits per second while in the same room.

Once you start putting a couple of walls in between your devices, these numbers drop. It seems like 250 megabits per second is about what I can expect to see from a about one room away from the access point. The signal has to either bounce around corners through doorways, or has to go through the pair of walls that make up the hallway.

The fastest advertised WiFi speeds are only possible under ideal conditions.

Something that surprised me!

First of all, if you are buying a fresh set of OpenWRT routers for your project, you should probably be buying GL.iNet routers that ship from the factory with OpenWRT. At last that way you know what you’re going to get. One of my old D-Link tube routers isn’t running OpenWRT because I had no way to know which revision of the hardware I was going to get before it arrived.

Not only that, but many WiFi routers that can run OpenWRT have radio chipsets that don’t run as well with the open source drivers. My older revision D-Link router’s WiFi is about 50% faster than the newer revision with OpenWRT.

Why is there a thick layer of dust?! For years, I had one of these tall cylinder routers wedged in the door, so the door wouldn't close, and the laundry room is where the cats' litter box used to live. I eventually hid the cylinder behind the rack so the door would close. pic.twitter.com/IT9cgk7cWa

— Pat Regan (@patsheadcom) October 23, 2022

I was handed a box of free OpenWRT-compatible WiFi hardware. I already knew the hardware would work. If this didn’t all happen accidentally, I would have bought GL.iNet routers instead.

I guess I should get to the weird part. The Linksys WRT3200ACM can be set to 200 mW on 5.8 GHz and 1,000 mW on 2.4 GHz. The TP-Link Archer is the opposite! It can be set to 1,000 mW on 5.8 GHz and 200 mW on 2.4 GHz!

Does the OpenWRT driver really know how much power the radio is going to put out? Is it anywhere near correct? We have no idea. I never trust numbers like this.

Radio is weird. You have to quadruple the transmit power to double your range. I have no way to properly verify just how much power the Archer A7 is pumping out, but the WiFi analyzer seems to think the TP-Link 5.8 GHz signal is quite a bit stronger than the WRT3200ACM!

Conclusion

I don’t know if there is a conclusion. I set up 802.11r. I pointed a few of my WiFi devices at the 802.11r SSIDs. I think I am at the point where I wait and see how things work out.

Maybe the conclusion is that 802.11r was really easy to set up on my OpenWRT hardware. It isn’t much more work than checking a box, though it does get a bit more fiddly when you are trying to put the correct mobility domain on three different SSIDs on three different routers. I know I goofed at least one up on my first attempt!

- Upgrading My Home Network With MokerLink 2.5-Gigabit Switches

- I Bought The Cheapest 2.5-Gigabit USB Ethernet Adapters And They Are Awesome!

- Should You Enable Smart Queue Management To Mitigate Bufferbloat?

- OpenWRT, Two GL.Inet Routers, and Tailscale: Successes and Failures

- My New Travel Router: The GL.iNet Mango N300

- Choosing an Intel N100 Server to Upgrade My Homelab

- Tailscale on My GL.iNet Mango OpenWrt Router

- Setting up DAWN and band-steering in OpenWrt at the OpenWRT Wiki

- Setting up usteer and band-steering in OpenWrt at the OpenWRT Wiki

- GL.iNet GL-AXT1800 Slate AX at Amazon

- GL.iNet Mango at Amazon