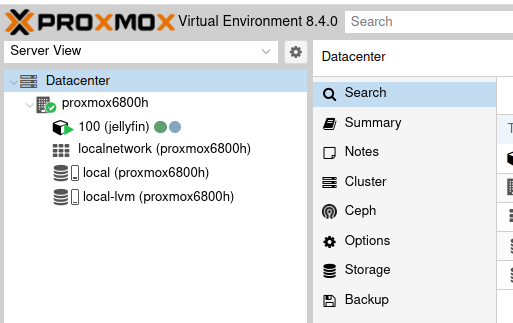

The hardware running Proxmox around my homelab is a little slow or a little outdated. I have an Intel N100 mini PC and a Ryzen 3550H mini PC here at home, and I have another Intel N100 mini PC off-site at Brian Moses’s house. The N100 machines are modern but lean towards the slow and power-sipping side. The Ryzen 3550H is basically a smaller, slower, older Ryzen 5700U.

I have been keeping my eye on various Ryzen 6000 mini PCs. They aren’t exactly bleeding edge, but they have a rather powerful iGPU, and they have more modern CPU cores than a Ryzen 5700U, like in my laptop, or Ryzen 5800U.

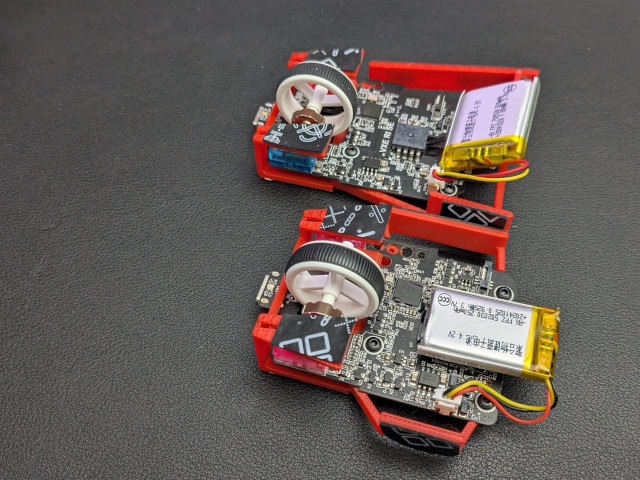

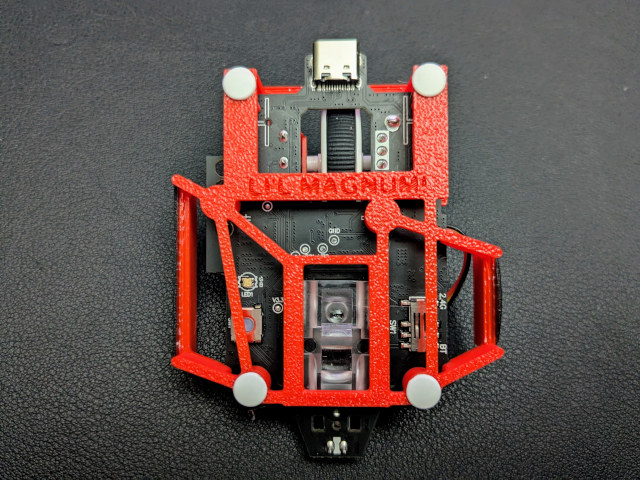

My Ryzen 6800H mini PC while I am installing Proxmox. It is in my home office sitting on top of my off-site Trigkey N100 Proxmox server and its 14-terabyte external USB storage.

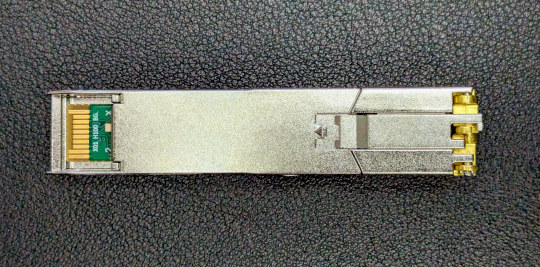

I haven been waiting to see a nice specimen drop under $300. Preferably one with 2.5-gigabit Ethernet and a pair of m.2 slots. If it weren’t for all the upcoming tariffs stirring up trouble for us, I was expecting Ryzen 6600U or Ryzen 6800H mini PCs to take the $250 price point away from the Ryzen 5800U mini PCs before summer ends.

I got everything I wanted except the price. I saw the Acemagician M1 on sale for $309, and I just had to snatch one up. I don’t really NEED to expand my homelab, but that is definitely a good enough price for me to be excited enough to do some testing!

Should I sneak in a tl;dr?

Like with most interesting things, the value proposition of a Ryzen 6800H mini PC can be less than simple.

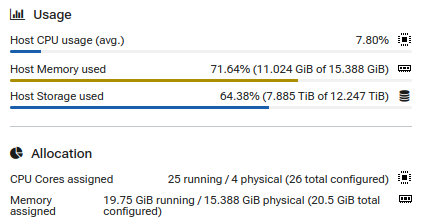

Let’s compare it to my lowest cost Intel N100 Proxmox node. The Ryzen 6800H CPU is nearly four times faster, can fit two or three times as much RAM, has a faster Ethernet port, but the N100 manages to transcode around 50% faster. At today’s prices, the Ryzen 6800H only costs a hair more than twice as much as the Intel N100.

Do you value that extra video-transcoding performance? Maybe you should save some cash and add an Intel N100 mini PC to your homelab, especially when you consider that the Ryzen 6800H burns 50 watts of electricity while transcoding. The Ryzen 6800H is just fast enough to transcode 4K 10-bit tone-mapped video in real time for at least two Jellyfin clients.

Maybe that is just the right amount of video-encoding performance for your needs. If you value that extra CPU chooch, then maybe you should splurge for a Ryzen 6600U or 6800H.

- Acemagician M1 Ryzen 6800H mini PC at Amazon

Do you have to watch out for Acemagician?

Maybe. There are reports that Acemagician installs spyware on the Windows 11 image that ships on their hardware. I never booted Windows 11 on mine. The very first thing I did was install Proxmox, so this didn’t matter to me at all.

We are still at a point where the handful of Goldilocks mini PCs don’t tend to go on sale at the lower price points. There are a lot of mini PCs with gigabit Ethernet and two m.2 slots, or with 2.5-gigabit Ethernet and one m.2 slot. Sometimes you even get a pair of Ethernet ports, and sometimes BOTH are 2.5-gigabit Ethernet ports. Finding the right combination for a good price can be a challenge!

I could see why you might want to vote against Acemagician with your wallet, but this was the correct porridge for me. It would have been nice if it had a second 2.5-gigabit Ethernet port, but that wasn’t a deal-breaker for me at $309.

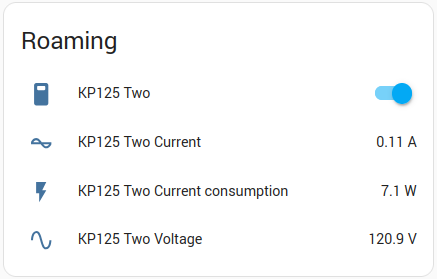

Home Assistant says that my power-metering smart outlet reads between 6.1 and 7.5 watts most of the time while my Acemagician M1 is sitting there waiting for a task, but it shoots up to a whopping 50 watts while transcoding video!

There is an oddity, though. Mine shipped with a single 16-gigabyte DDR5 SO-DIMM. I was expecting a pair of 8-gigabyte SO-DIMMS.

On one hand, that means I didn’t acquire a pair of worthless 8-gigabyte DDR5 SO-DIMMs that would be destined for a landfill. On the other hand, I am thinking about using this particular mini PC as a gaming console in the living room, so I could really use that dual-channel RAM for the iGPU. Not only that, but my single-channel RAM might be having an impact on my Jellyfin testing.

OH MY GOODNESS! This isn’t unexpected, but you absolutely need dual-channel memory for good 3D-gaming performance on your Ryzen 6800H. My FPS doubles in most games when I dropped in a second stick of RAM, and some weird regular stuttering in Grand Theft Auto 5* completely went away.

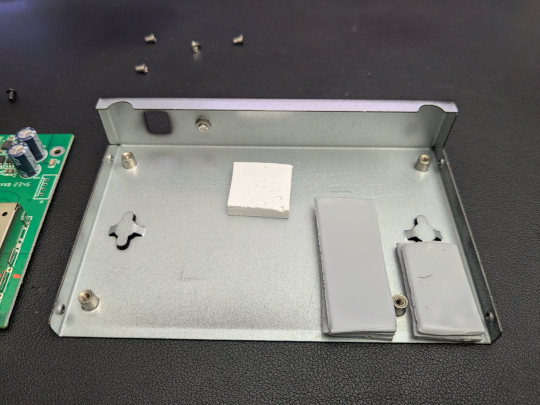

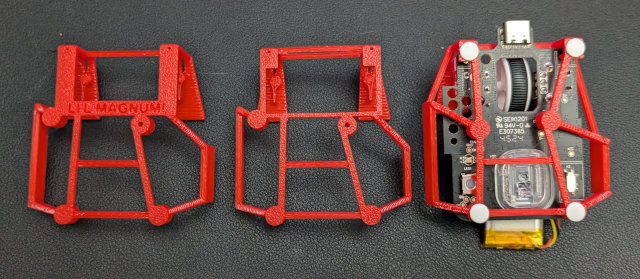

I should also say that taking apart the Acemagician M1 was the opposite of a delight. They hide the screws under the rubber feet, and when you pop the easy side of the lid off you are greeted with the big cooling fan. You have to finesse the entire motherboard out of the shell to reach the memory and NVMe slots underneath.

- Acemagician M1 Ryzen 6800H mini PC at Amazon

The rest of this blog will be about the Ryzen 6800H and not specifically my Acemagician Mini PC

The Ryzen 6800H is overkill for my personal homelab, but I wanted to be able to see how my new mini PC might handle some light gaming duties in the living room. Something like the 6-core Ryzen 6600U would have been a better fit for my homelab, and you can find those at better prices, but twice as many GPU cores out of the 8-core Ryzen 6800U or 6800H.

That isn’t a big deal for your homelab. The smaller iGPU probably has exactly as much Jellfin transcoding performance as the heavier iGPU.

I already said some of this in the tl;dr. The Ryzen 6800H is roughly four times faster than an Intel N100 and maybe 25% faster than a Ryzen 5800U.

All mini PCs for your homelab are a good value

This statement is mostly true. You should make sure you’re buying when there is a sale, because there is always at least one mini PC brand running a sale on Amazon on any given day. You may have to wait to get a good deal on exactly the specs you want, but there’s always sure to be a deal coming up. We keep an eye on our favorite mini PC deals in our Discord community.

I have been doing a bad job keeping the pricing in my mini PC pricing spreadsheet up to date. When I last updated it, you could get an Intel N100 mini PC for $134, a Ryzen 5560U for $206, or a Ryzen 6900HX $439. Each of those is roughly twice as fast as the model before it, and each costs around twice as much. The prices and performance don’t QUITE map out that linearly if you plot them on a graph, but none would stray that far from the line.

We haven’t seen deals that good on an Intel N100 or lower-end Ryzen 5000-series mini PC in a while. You’re going to wind up paying $150 or more today, possibly closer to $200. And there aren’t many 6-core Ryzen 5000-series mini PCs around now, so you have to pay a bit more for an 8-core Ryzen 5800U.

What’s exciting today is that the 8-core Ryzen 6000 mini PCs with 12-core RDNA2 iGPUs are four times faster than an Intel N100 or Intel N150 mini PC while only costing a bit more than twice as much.

Lots of small Proxmox nodes, one big one, or something in between?!

One of the cool things about mini PCs is that you can mix and match whatever assortment of servers you might need.

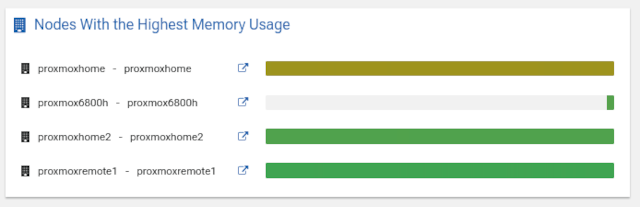

Do you want to save money on your electric bill? All these laptop-grade CPUs consume similar amount of power when they aren’t doing any serious work, so it might be better to splurge on one overpowered mini PC that idles at 9 watts, because four Intel N100 boxes will each idle at 7 watts.

NOTE: Don’t just assume that someone else’s idle numbers will exactly match your own. Cramming four times as many virtual machines onto a Ryzen 6800H just because it has four times the CPU and RAM of an Intel N100 also means that you have four times as many opportunities for mostly idle virtual machines to keep the CPU awake. We aren’t always comparing apples with oranges.

That is 9 watts vs. 28 watts. That difference only adds up to around $20 per year where I live, but that might be enough difference in power consumption to pay for a mini PC someone in Europe over the course of 3 or 4 years.

One the other hand, you may have some vital services that need to alongside problematic ones. Maybe your home router runs in a virtual machine, and every once in a while your Jellyfin or Plex containers goofs up your iGPU and requires a reboot of the host. You probably don’t want your home network going down just because you had to fix a problem with Plex.

You have the option of moving Plex and some less vital services over to their own inexpensive Intel N100 mini PC while running the rest of your homelab on a mid-range or high-end mini PC. You have a lot of flexibility in how you split things up.

- Do All Mini PCs For Your Homelab Have The Same Bang For The Buck?

- Two Weeks Using The Jellyfin Streaming Media System

How is the performance?

I have been running Geekbench 5 on all my mini PCs and keeping track of the scores, but why Geekbench 5? I didn’t wind up buying Geekbench 6, because I am unhappy that Geekbench no longer includes an AES test. I have been extremely interesting in improving my potential Tailscale encryption speeds, so this number has been a good indicator of whether or not a particular CPU would be a good upgrade for me.

It also helps that I have all sorts of historical Geekbench 5 scores in my notes. That makes it easier for me to compare older machines to my current hardware.

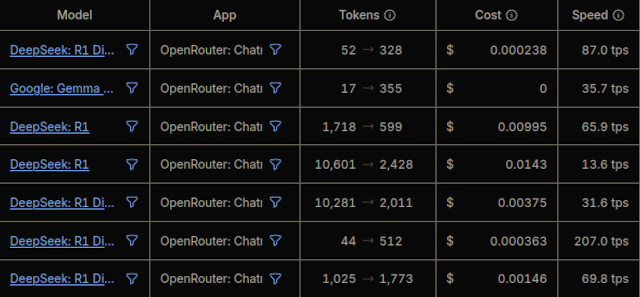

| Mini PC | Single Core | Multi Core | |

|---|---|---|---|

| Trigkey Intel N100 DDR4 | 1,053 | 2,853 | | |

| Topton Intel N100 DDR5 | 1,002 | 2,786 | | |

| Minisforum UM350 Ryzen 3550H | 955 | 3,215 | | |

| Acemagician M1 Ryzen 6800H 1x16GB | 1,600 | 7,729 | | |

| Acemagician M1 Ryzen 6800H 2x16GB | 1,646 | 9,254 | |

The multi-core score did improve by about as much as I would have expected, but my multi-core score is lower than many of the mini-PC scores in Geekbench’s database. Other people are near or above 10,000 points.

We should probably also talk about Jellyfin transcoding performance. Unlike the gcn5 iGPU in processors like the Ryzen 3550H or 5800U, the Ryzen 6800H’s RDNA2 iGPU supports hardware tone mapping. This is important today because most content that you download on the high seas will be 10-bit HDR video. If you need to play back on a non-HDR display, then you will want Plex or Jellyfin to map the content down to 8-bit for you. The Intel N100 and Ryzen 6800H can both do that for you.

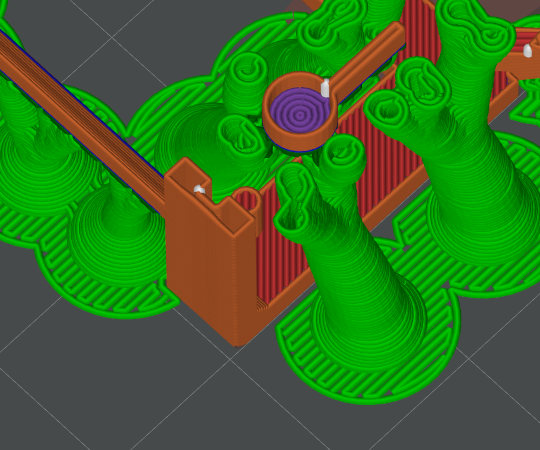

I played my usual 4K 10-bit test movie, and my Ryzen 6800H was transcoding at between 42 and 56 frames per second. It was also burning 50 watts of electricity as measured at the power outlet while transcoding.

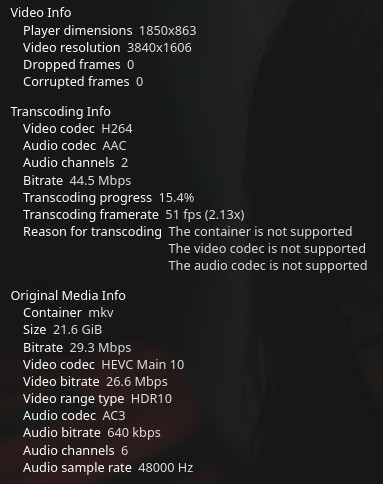

I am not sure why this is the only Jellyfin encoding screenshot I have saved at 51 FPS!

The Intel N100 can manage 75 frames per second while transcoding the exact same movie. I don’t believe I measured power consumption while transcoding on the N100, but both of my N100 mini PCs top out at around 20 watts maximum. The Intel N100 is faster and more efficient at this task than the Ryzen 6800H.

That isn’t the actual performance limit for either machine. When Jellyfin is transcoding two or more videos, the total throughput of all the videos will exceed the single-video maximum.

Wrapping Up

So, as you can see, diving into the world of mini PCs for your homelab is a fascinating exercise in balancing power, efficiency, and price. The Acemagician M1 with its Ryzen 6800H offers a significant step up in processing power compared to the Intel N100. While it’s not perfect – the fiddly build and single RAM stick were minor inconveniences – the performance gains are undeniable.

Ultimately, the “best” mini PC truly depends on your specific needs and priorities. Do you prioritize power efficiency and low cost? The N100 is a fantastic choice, especially if your mini PC will spend many hours each day transcoding video! Need a bit more punch for demanding services or memory-heavy workloads? A Ryzen 6600U or 6800H might be the sweet spot.

We’ve only scratched the surface here, and the mini PC landscape is constantly evolving. If you’re building your own homelab, debating upgrades, or just enjoy geeking out over hardware, we’d love to have you join our community!

Come hang out with us on the Butter, What?! Discord server! We share deals, troubleshoot issues, discuss projects, and generally discuss all things homelab and DIY NAS. Share your setups, ask questions, and learn from others – we’re a friendly bunch who love to help. We’re always swapping tips and tricks on finding the best hardware, and specifically discussing optimal configurations for different homelab services, so you’ll be among the first to know about the next great mini PC deal!