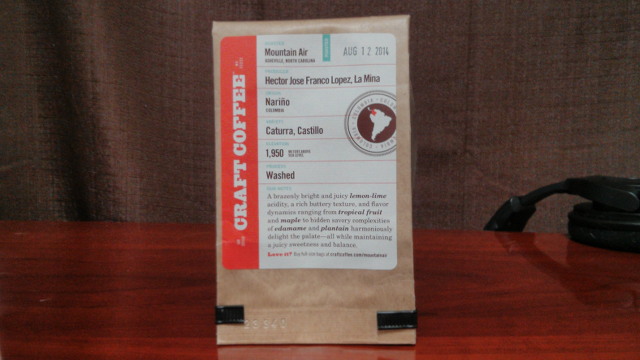

Early last year, I decided that I should replace Dropbox with a cloud-storage solution that I could host on my own servers. I was hoping to find something scalable enough to store ALL the files in my home directory, and not just the tiny percentage of my data that I was synchronizing with Dropbox. I didn’t expect to make it all the way to that goal, but I was hoping to come close.

I wrote a few paragraphs about each software package as I was testing them. That old cloud-storage comparison post is almost 18 months old and is starting to feel more than a little outdated. All of the software in that post has improved tremendously, and some nice-looking new projects have popped up during the last year.

All of these various cloud-storage solutions solve a similar problem, but they tend to attack that problem from different directions, and their emphasis is on different parts of the experience. Pydio and ownCloud seem to focus primarily on the web interface, while Syncthing and BitTorrent Sync are built just for synchronizing files and nothing else.

I am going to do things just a little differently this year. I am going to do my best to summarize what I know about each of these projects, and I am going to direct you towards the best blog posts about each of them.

This seemed like it would be a piece of cake, but I was very mistaken. Most of the posts and reviews I find are just rehashes of the official installation documentation. That is not what I am looking for at all. I’m looking for opinions and experiences, both good and bad. If you know of any better blog posts that I can be referencing for any of these software packages, I would love to see them!

Why did these software packages make the list?

I don’t want to trust my data to anything that isn’t Open Source, and I don’t think you should, either. That is my first requirement, but I did bend the rules just a bit. BitTorrent Sync is not Open Source, but it is popular, scalable, and seems to do its job quite well. It probably wouldn’t have made my list if I weren’t using it for a few small tasks.

I also had to know that the software exists. I brought back everything I tried out last year, and I added a few new contenders that were mentioned in the comments on last year’s comparison. If I missed anything interesting, please let me know!

What other options do I have?

There are plenty of third-party services much like Dropbox that are available to host your data. Most of them will let you get started for free, and they will let you pay to upgrade to larger storage plans. Dropbox encrypts your data on the server, so they definitely have the ability to access your data. Companies like SpiderOak and Wuala claim to encrypt your data before it leaves your computer, but there is no way to verify that they are doing so correctly.

Charl Botha has an excellent write up over at vxlabs.com about his experiences with Dropbox, Wuala, and SpiderOak. He also talks about his experiences with some of the self-hosted cloud-storage programs that I mention in this post. Charl shares his experiences with using an off-the-shelf Synology NAS box combined with their CloudStation software.

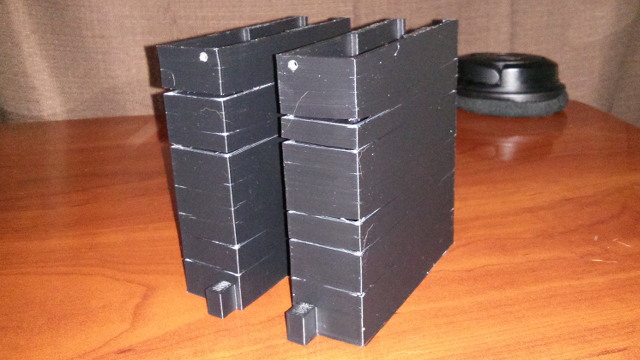

If you want to store your files at home, but you don’t want to pay for overpriced and underpowered Synology NAS, then you might want to check out my friend Brian’s blog. He has excellent blog posts explaining what hardware you need to buy to build your own FreeNAS servers that fit both small and large budgets. There’s plenty of room for lots of hard disks in either of his builds!

Seafile

I am more than a little biased towards Seafile. I’ve been using Seafile for more than a year, and it has served me very well in that time. I am currently synchronizing roughly 12-GB made up of 75,000 files using my Seafile server. The 12-GB part isn’t very impressive, but when I started using Seafile, tens of thousands of files was enough to bring most of Seafile’s competition to its knees.

Seafile offers client-side encryption of your data, which I believe is very important. Encryption is very difficult to implement correctly, and there are some worries regarding Seafile’s encryption implementation. I am certainly not an expert, but I feel better about using Seafile’s weaker client-side encryption than I do about storing files with Dropbox.

Features:

- Client-side encryption

- Central server with file-revision history

- Web interface with file management and link sharing

- Dropbox-style synchronization (tens of thousands of files)

Limitations:

Won’t sync files with colons in their name (NTFS supported characters only)- Encryption may not be weak

For more information:

ownCloud

ownCloud’s focus is on their web interface. As far as I can tell, ownCloud is leaps and bounds ahead of just about everyone else in this regard. They have tons of plugins available, like music players, photo galleries, and video players. If your primary interest is having an excellent web interface, then you should definitely take a look at ownCloud.

ownCloud has a Dropbox-style synchronization client. I haven’t tested it in over a year, but at that time it didn’t perform nearly well enough for my needs. The uploading of my data slowed to a crawl after just a couple thousand files.

This is something the ownCloud team have worked on, and things should be working better now. I’ve been told that using MariaDB instead of SQLite will make ownCloud scalable beyond just a few thousand files.

Features:

- Central server with file-revision history

- Web interface with file management, link sharing, and dozens of available plugins

- Collaborative editing (see the Linux Luddites podcast for opinions)

- Dropbox-style synchronization, may require MariaDB to perform well

Limitations:

- Reports of files silently disappearing during sync

For more information:

SparkleShare

SparkleShare was high on my list of potential cloud-storage candidates last year. It uses Git as a storage backend, is supposed to be able to use Git’s merge capabilities to resolve conflicts in text documents, and it can encrypt your data on the client side. This seemed like a winning combination to me.

Things didn’t work out that well for me, though. SparkleShare loses Git’s advanced merging when you turn on client-side encryption, so that advantage was going to be lost on me.

Also, SparkleShare isn’t able to sync directories that contain Git repositories, and this was a real problem for me. I have plenty of clones of various Git repositories sprinkled about in my home directory, so this was a deal breaker for me.

It also looks as though SparkleShare isn’t able to handle large files.

Features:

- Central server with file-revision history

- Web interface with file management and link sharing

- Dropbox-style synchronization (large files will be problematic)

- Data stored in Git repo (merging of text files is possible)

Limitations:

- Won’t synchronize Git repositories

For more information:

Pydio (formerly AjaXplorer)

Pydio seems to be in pretty direct competition with ownCloud. Both have advanced web interfaces with a wide range of available plugins, and both have cross-platform Dropbox-style synchronization clients. I have not personally tested Pydio, but they claim their desktop sync client can handle 20k to 30k files.

Pydio’s desktop sync client is still in beta, though. In my opinion, this is one of the most important features that a cloud-storage platform can have, and I’m not sure that I’d want to trust my data to a beta release.

Features:

- Central server with file-revision history

- Web interface with file management and link sharing

- Dropbox-style synchronization with Pydio Sync (in beta)

Limitations:

For more information:

git-annex with git-annex assistant

I first learned about git-annex assistant when it was mentioned in a comment on last year’s cloud-storage comparison post. If I were going to use something other than Seafile for my cloud-storage needs, I would most likely be using git-annex assistant. I have not tested git-annex assistant, though, and I don’t know if it is scalable enough for my needs.

git-annex assistant supports client-side encryption, and the Kickstarter project page says that it will use GNU Privacy Guard to handle the encryption duties. I have a very high level of trust in GNU Privacy Guard, so this would make me feel very safe. It also looks like git-annex assistant stores your remote data in a simple Git repository. That means the only network-accessible service required on the server is your SSH daemon.

Much like SparkleShare, git-annex assistant stores your data in a Git repository, but git-annex assistant is able to efficiently store large files—that’s a big plus.

Dropbox-style synchronization is the primary goal of git-annex assistant. It sounds like it does this job quite well, but it is lacking a web interface. This puts it on the opposite end of the spectrum compared to ownCloud or Pydio.

There is one big downside to git-annex assistant for the average user. git-annex assistant makes use of the git-annex, and git-annex does not have support for Microsoft Windows.

I was disappointed to learn that git-annex will not synchronize the .git directory in a Git repository. This is a very important requirement for my use case. I understand the potential problems I might see, and they aren’t likely to happen to me.

The creator of git-annex, Joey Hess, had this to say about the issue:

Why not just use git the way it’s designed: Make a git repository on one computer. Clone to another computer. Commit changes on either whenever you like, and pull/push whenever you like. No need for any dropbox to help git sync, it can do so perfectly well on its own.

This plan is very rigid and inconvenient for me. It assumes that I am always finished with my work and ready to commit every time I stand up, and this is often not the case. I enjoy knowing that my partially completed work magically appears on my laptop. It is nice knowing that my computer at home will pull down all my work within minutes of arriving home from a long trip.

I used to rely on my version control system for this. I’ve been doing that since long before Git existed. I didn’t always remember to stop back at my desk before leaving the building. Dropbox taught us that we shouldn’t have to remember these things. With Seafile, I don’t have to.

Features:

- Centralized server to allow synchronization behind firewalls

- Peer to peer synchronization on local network

- Uses GNUPG for encryption and can use your existing public keys

- Dropbox-style synchronization

Limitations:

- No web interface on the server

- Won’t synchronize Git repositories

For more information:

BitTorrent Sync

I like BitTorrent Sync. It is simple, fast, and scalable. It does not store your data on a separate, centralized server. It simply keeps your data synchronized on two or more devices.

It is very, very easy to share files with anyone else that is using BitTorrent Sync. Every directory that you synchronize with BitTorrent Sync has a unique identifier. If you want to share a directory with any other BitTorrent Sync user, all you have to do is send them the correct identifier. All they have to do is paste it into their BitTorrent Sync client, and they will receive a copy of the directory.

Unfortunately, BitTorrent Sync is the only closed-source application on my list. It is only included because I have been using it to sync photos and backup directories on my Android devices. I’m hoping to replace BitTorrent Sync with Syncthing.

Features:

- No central server but can use third-party server to locate peers

- Very easy to set up

- Solid, fast synchronization without extra bells and whistles

Limitations:

For more information:

Syncthing

Syncthing is the Open Source competition for BitTorrent Sync. They both have very similar functionality and goals, but BitTorrent Sync is still a much more mature project. Syncthing has yet to reach its 1.0 release.

I’ve been using Syncthing for a couple of weeks now. I’m using it to keep my blog’s Markdown files synchronized to an Ubuntu environment on my Android tablet. It has been working out just fine so far, and I’d like to use it in place of BitTorrent Sync.

It does require a bit more effort to set directory up to be synchronized than with BitTorrent Sync, but I hope they’ll be able to streamline this in the future. This is definitely a project to keep an eye on.

Features:

- No central server but can use third-party server to locate peers

- Easy to set up

- Synchronization without extra bells and whistles

- Like BitTorrent Sync, but Open Source

Limitations:

For more information:

Syncany

I don’t really think Syncany is mature enough for a place on this list, but I’m going to go ahead and add it to the list anyway. The developers still recommend against trusting your important data to Syncany, and it is still missing some very important features, but I very much like some of the design choices that the Syncany team has made.

Syncany doesn’t require any special server-side software, and it supports many different storage backends. Syncany can store your data using Amazon S3, Dropbox, or even a simple SFTP server. It also lets you mix and match various storage backends for both redundancy and extra capacity. You can safely store your data just about anywhere since Syncany supports client-side encryption.

Syncany recently added automatic, Dropbox-style synchronization, but it is new, and it isn’t configured out of the box. The documentation says that setting up automatic synchronization isn’t as easy at it should be, and they are working on improving this situation.

Again, Syncany says that their software is still in alpha, and that you should not trust it with your important files yet. I look forward to the day when I can try using Syncany to sync my data!

Features:

- Client-side encryption

- No special server software required

- Supports a large variety of remote storage backends

- Can sync to redundant storage services

Limitations:

- Not yet safe for production use

For more information:

Conclusion

There’s no clear leader in the world of self-hosted cloud-storage. If you need to sync several tens of thousands of files like I do, and you want to host that data in a central location, then Seafile is really hard to beat. git-annex assistant might be an even more secure competitor for Seafile—assuming it can scale up to a comparable number of files.

If you don’t want or need that centralized server, BitTorrent Sync works very well, and it should have no trouble scaling up to sync the same volumes of data as Seafile, and I bet it won’t be too long before Syncthing is out of beta and ready to meet similar challenges. Just keep in mind that with a completely peer-to-peer solution, your data won’t be synchronized unless at least two devices are up and running simultaneously.

If you’re more interested in working with your data and collaborating with other people within a web interface, then ownCloud or Pydio might make more sense for you. I haven’t heard many opinions on Pydio, either good or bad, but you might want to hear what the guys over at the Linux Luddites podcast have to say about ownCloud in their 24th episode. The ownCloud discussion starts at about the 96-minute mark.

Are you hosting your own “cloud-storage?” How is it working out for you? What software are you using? Leave a comment and let everyone know what you think!