My next batch of beans from Craft Coffee should be arriving soon, so I thought this would be a good time to post an update on how things are going with my new espresso machine and grinder. Things were off to a pretty bumpy start, but I’ve learned a lot, and I’m making some pretty tasty lattes.

Last month’s Craft Coffee assortment

I ruined a lot of shots with last month’s Craft Coffee selection. If I managed to pull one good shot with each of the three small pouches of beans, then I’d say I was doing well. I think it is more likely that I got one good latte out of the entire shipment, and that’s a bit disappointing.

Up until last month, every coffee I’ve received from Craft Coffee has been both interesting and delicious. I modified my subscription, and I will be receiving a single 12-oz bag of coffee this month. I’m hoping this will keep me from screwing things up!

I highly recommend Craft Coffee. They’ve sent me a lot of very delicious coffee for a very reasonable price. If you use my referral code, pat1245, you will save 15% on your order, and I will receive a free month of coffee. That seems like a pretty good deal for both of us.

Central Market in-house roasted Mexico Custapec

When the Craft Coffee quickly ran out, I decided it would be best to stop by the Central Market and get a large quantity of one or two coffees of reasonable quality to experiment with. Chris picked out a Central Market branded coffee. The beans were from Mexico, and the sign on the bin claimed it was low acidity. We bought about a half pound of it.

I did a much better job with this coffee. Things didn’t go well at first, but after about five or six double shots things were much improved and I was getting pretty consistent results. They weren’t the best results, but they were definitely drinkable.

Addison Coffee Roasters – Premium Espresso Blend

I definitely preferred lighter coffees with my old espresso machine, but I’ve read that it is easier to tune in a darker roast on a real espresso machine. I’ve used several pounds of Addison Coffee Roasters’ Premium Espresso Blend in the past, so I knew what to expect. It was never my favorite, but I thought it would be a good experiment. We bought about 12 oz.

This was a very oily coffee, and it was a bit harder to dose into the portafilter. The other coffees would level out with a bit of a shake, but this coffee stuck together too much for that. It took three or four shots to get the grind about right, but things were pretty consistent after that.

I tried changing my “temperature surfing” a little to improve the flavor. I went from my usual 40-second wait up to a full minute. This seemed to help a bit, but not enough. I also decided to try “start dumping” the coffee. This made a HUGE difference in the flavor. This is probably cheating, but it definitely works!

I should also note here that no matter what I did, these pucks were always wet. They had a tiny pool of water on top, and they would drop right out of the portafilter. I can’t say that this had any impact on the taste, because the good and bad shots were all equally wet.

I think I prefer a ristretto

The Internet seems to have mixed feelings about what precisely a ristretto espresso actually is. It is definitely a smaller volume of coffee—1 to 1.5 ounces—but how you arrive there seems to be under question. Some say it should still take 25 to 30 seconds to pull a ristretto, while others say you just have to stop the machine sooner. I think I’ve landed somewhere in the middle.

I’ve not weighed anything, but I’ve been happier stopping my machine when my 2-ounce cup is somewhere between ½ and ¾ full. It takes somewhere between 20 and 25 seconds. This seems to be right around where the best lattes have happened. In fact, I think the best ones come in closer to one ounce at 25 seconds.

I thought maybe my coffee-to-milk ratio was just too low at one point, and I pulled out my big 16-oz mug. The extra four ounces of milk mixed with a full-sized, 2-oz doubleshot was not an improvement.

Java Pura – Ethiopian Ygracheffe

We ran out of coffee over the weekend, so we made another trip to the Central Market. I found a shelf full of 12-oz bags of coffee beans from Java Pura for $10 each. I’d never heard of them before, but each bag was stamped with a roasting date, and that seemed promising. I found a bag of Ethiopian Ygracheffe that was roasted on July 17—the same day my Rancilio Silvia arrived.

I knew this coffee would be good as soon as I opened the bag. It smelled delicious, and the bag was full of tiny, light brown Ygracheffe beans.

It took quite a few tries to get the grind right. I had to move up almost three full clicks on the Baratza Preciso before I could get much water to pass through the puck. Once I did, though, it was delicious. This coffee from Java Pura is definitely on par with the brands that Craft Coffee sends me. I most definitely won’t have to “start dump” with this coffee!

I think I made one of the best lattes of my life using this coffee. That was yesterday, and I haven’t been able to quite replicate that success today. I have plenty of beans left in the hopper, and I’m confident that I’ll do it again.

- Ethiopian Ygracheffe at Java Pura

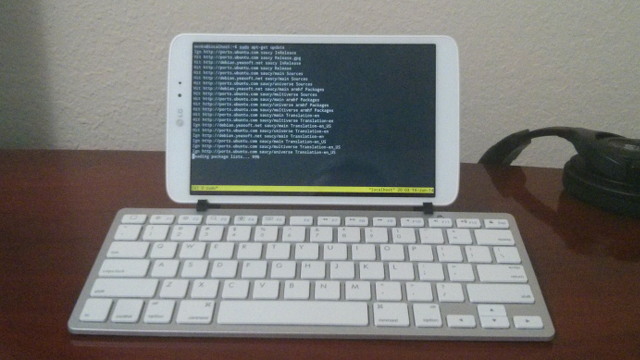

The Baratza Preciso grinder was an excellent choice

I am very pleased with the Baratza Preciso. I may be a beginner, but the microadjustment lever seems like it will be indispensable. The difference between choking the machine and a pretty good ristretto is only two or three clicks of the microadjustment lever. I can’t imagine trying to tune in a shot with only the macro settings.

I know there are other grinders that don’t have fixed settings, and I don’t think I’d like that. I’m making notes for myself, and I know that I was using a setting of 3H for the Premium Espresso Blend. If I ever buy more, I will have a good starting point. I’m using a setting somewhere around 5C with the lighter roast from Java Pura.

- Baratza Preciso Coffee Grinder at Amazon

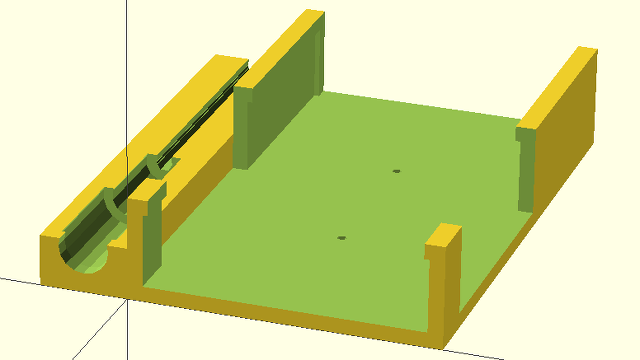

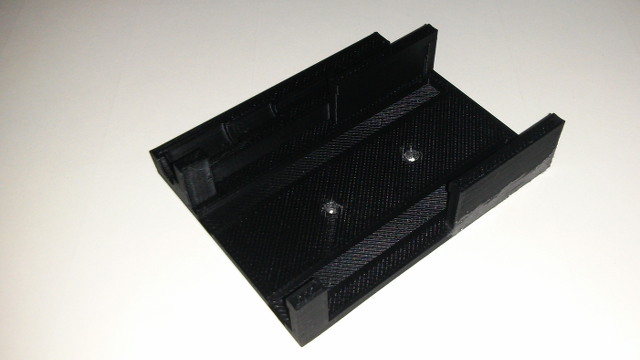

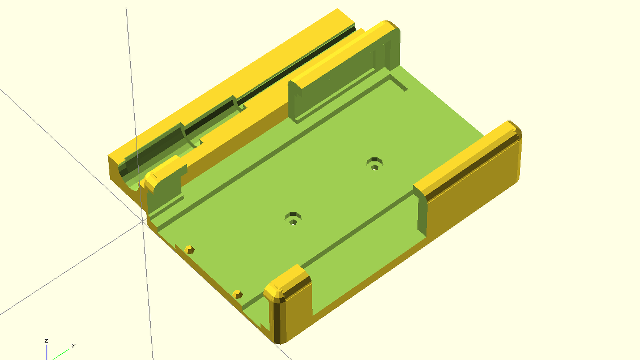

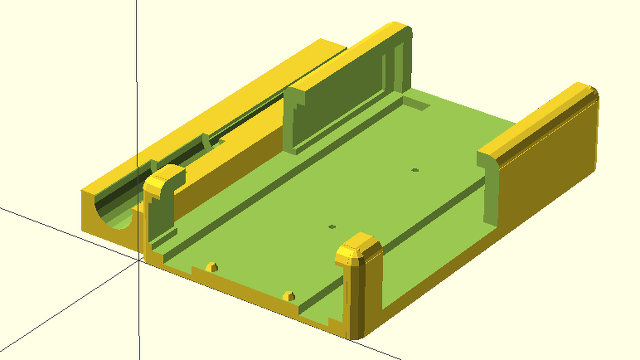

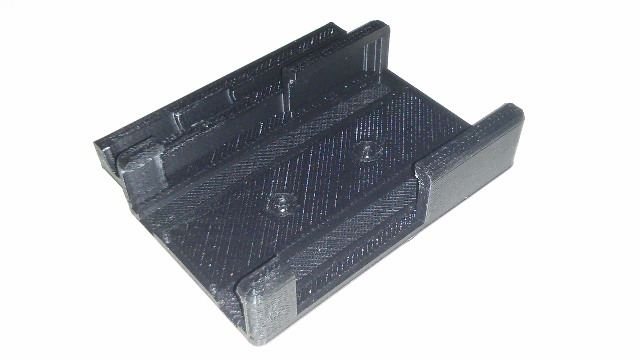

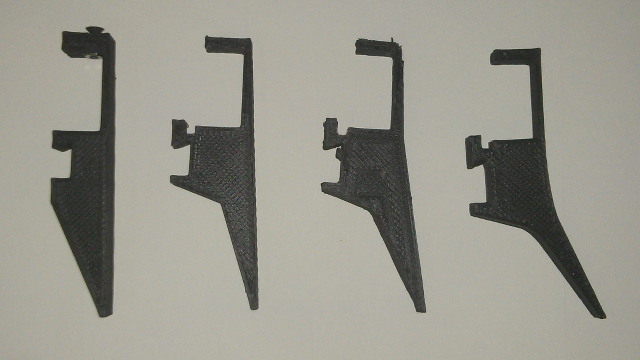

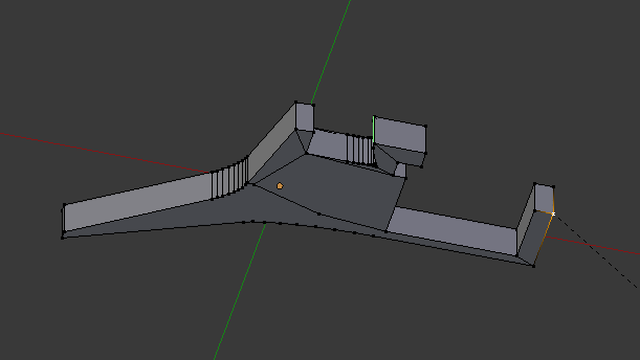

3D printing a better tamper

The tamper that comes with the Rancilio Silvia has a convex bottom, and it isn’t big enough to fit the basket. This leads to inconsistent tamping, so I knew I’d want to upgrade the tamper right away. Instead of buying one, I decided to print one, and I’m glad I did.

The first tamper that I printed was just a hair over 58 mm in diameter, which is the size everyone recommends for the Silvia. This seems a little too big for the Silvia’s tapered doubleshot basket. It would get hung up on the sides of the basket unless I dosed pretty heavily—heavily enough that the puck would come into contact with the screen.

This was no good, and I’d have had to order another tamper. Instead, I printed another tamper the same day. This one measures 57.3 mm in diameter, and it has been working out pretty well. This tamper can push the coffee down just below the groove in the filter basket.

I am probably going to print one more that is just under 57 mm, or I might try to add a slight bevel to the edge. I haven’t decided which way to go.

- Resizeable Espresso Tamper at thingiverse.com