The motivation

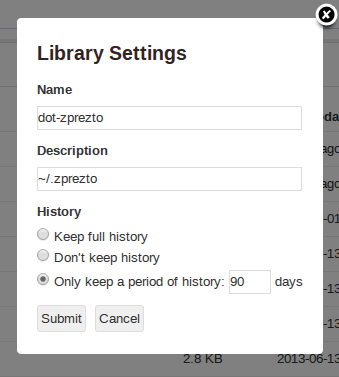

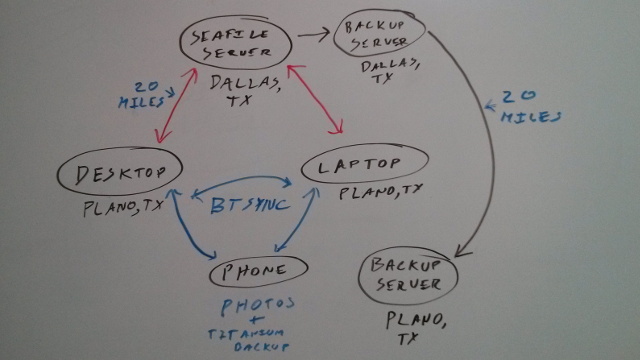

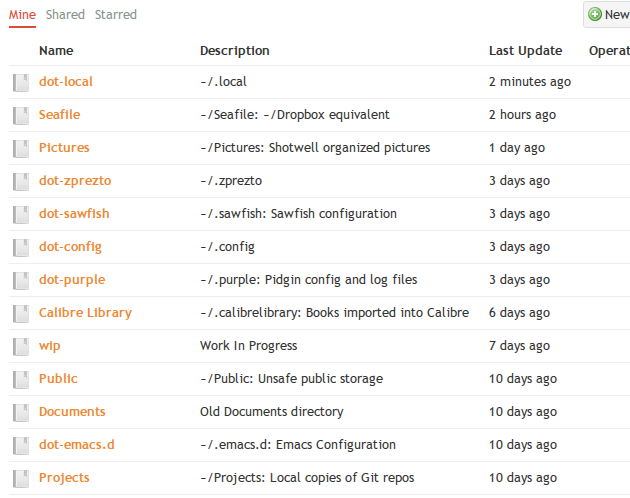

I’ve been thinking about upgrading all year. I almost did back in January, and I wish I had decided to do it then. Memory prices have just about doubled since then. I’ve been using a laptop as my primary workstation since 2006, and I wasn’t sure if I wanted to switch back to a desktop. Since I’ve had some recent success using Seafile to synchronize a huge chunk of my home directory, I figured I could get away with using two different computers again.

I’ve had my current laptop for over three years now, so I figured it was time for an upgrade. It is fast enough for almost everything I do, but I’ve been playing more games lately and the video card just isn’t fast enough. I have the graphic settings turned way down in most games.

Some of the games I play, like Team Fortress 2, run at perfectly acceptable frame rates. I’m not so lucky in other games. Upgrading to a desktop gives me a guaranteed video card upgrade for free.

The goal

History and Moore’s law made me think that I’d be able to build a reasonably priced Linux desktop that was at least three or four times faster than my laptop. I was very mistaken. Moore’s law doesn’t seem to be translating directly into performance like it used to.

Doubling my memory and processor speed of my Core i7-720QM laptop turned out to be pretty easy to achieve, and I didn’t have to break the bank to do it.

Parts List

Optional:

Total cost: $715

A slightly faster equivalent to the video card I used would be an Nvidia 650. That would bring the total cost up to about $825.

All the devices on this motherboard are working just fine for me on Ubuntu 13.10. I don’t have any USB 3 devices, though, so I am unable to confirm whether they are working correctly or not. I can confirm that the USB 3 ports on the rear panel are working just fine with USB 2 devices.

The processor and motherboard

I’ve had my sights on the AMD FX-8350 for quite a while now. To get a processor from Intel with comparable performance you’ll end up paying around $100 more, and you’ll also have to pay more for the motherboard. I could have spent $150 to $200 more on Intel parts for about a 15% performance boost, but that didn’t seem like a good value.

Should the FX-8350 really be referred to as an eight core processor? Probably not. It sounds to me like it has eight nearly complete integer units and four completely floating point units. I’d like to do a bit of testing to see exactly how close to complete those eight integer units actually are, but for now, I am going to say that the FX-8350 is more like an eigth-core processor than a four-core processor with hyper-threading—at least as far as integer operations are concerned.

When my friend Brian built his FX-8350 machine, he ended up using a motherboard with the 990 chipset. At that time, the motherboards with the 970 chipset weren’t shipping with BIOS support for the FX-8350. This isn’t a problem anymore, so I was able to choose a less expensive motherboard.

I opted for the MSI 970A-G43. I chose this motherboard because it was one of the least expensive 970-based boards from a manufacturer I trusted. I’m much more impressed than I thought I was going to be. I knew before placing my order that it had six SATA 3 ports and room for 32 GB of RAM. When I opened the box, I was surprised to see solid capacitors on the board. I’ve never actually had a capacitor failure, but it was still nice to see.

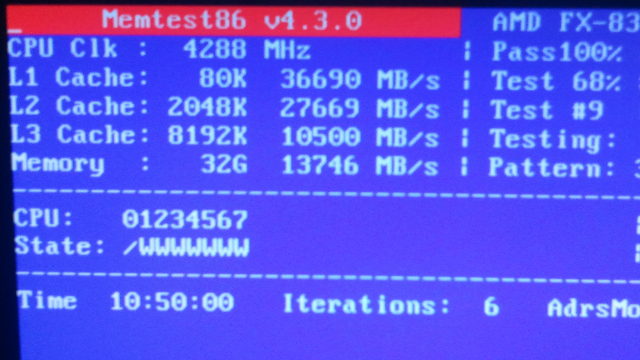

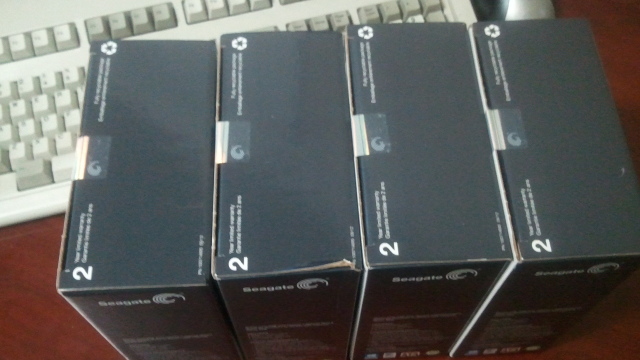

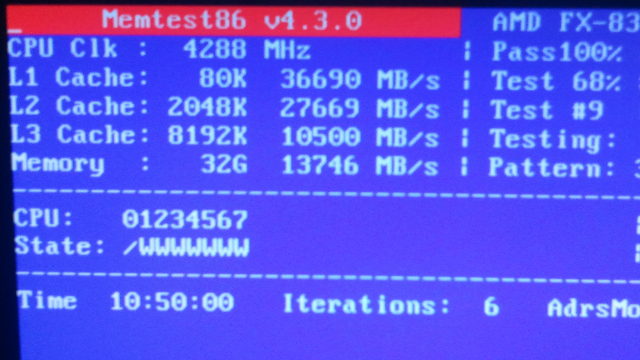

32 GB of memory

I definitely went overboard on memory. My laptop has 16 GB of memory, and almost 10 GB of that is usually being used as disk cache. I could function relatively comfortably most days with only 8 GB. It doesn’t matter, though. Memory is still cheap enough that it made sense to me to max it out, even though it is almost twice as expensive as it was late last year.

The NZXT case

This NZXT Source 210 is the second case from NZXT that I have seen. They are both quiet and well made. They lack some features, though. I usually prefer cases with easier to access, 90-degree rotated 3.5” drive bays, but I’m willing to live without them at this price point.

The power supply was another pleasant surprise. The spare video card I have has a pair of PCIe power connectors that need to be populated, so I wanted to find a power supply that could meet that requirement. The Topower ZU-650W just happened to be on sale while I was placing my order, and I am lucky that it was.

All of its cables are wrapped in sleeves, so it is easier to manage that potential rat’s nest of wires. I was also surprised to see that it came with five Velcro cable ties. The Topower has one feature that really surprised me: a “turbo fan switch.” I haven’t had any sort of “turbo” button on any of my computers in 20 years!

The solid-state drive

I didn’t have to buy the 128 GB Crucial M4 SSD. I simply moved it from my laptop into the new machine, and it booted right up. I included the price in the parts list to help paint a more complete picture of the build.

The video card

Late last year, a friend of mine built himself a new computer very similar to this one. He donated his old video card to me for use in my arcade cabinet. I couldn’t use it in the arcade cabinet because it requires two PCIe power connectors, and the power supply in the arcade table only has one.

This card is an NVidia GTX 460. With its 336 CUDA cores, this card should be around ten times faster than the mobile NVidia card in my laptop. This should be fast enough for the foreseeable future. It is doing a fine job running all the games I have. That isn’t too surprising, since the games I play are all pretty old.

I’m getting 100 to 150 frames per second in Team Fortress 2 with some antialiasing enabled and all the rest of the settings maxed out. Killing Floor still drops down below 60 frames per second when things get busy; I’m pretty sure the Linux port is just buggy. Maps like “Abusement Park” that were nearly unplayable on my laptop are running just fine now, though. I think this video card will keep me happy for quite a while.

If I did have to buy a video card today, I would choose the NVidia 650 Ti. It is at a very nice point on the price/performance curve, and I’ve seen the Nvidia 650 Ti Team Fortress 2 at 2560x1440 with all the settings maxed out. That is more than fast enough for my own purposes. You could save a little money with the NVidia 650, but it has half as many cores as the 650 Ti, so the bang for the buck isn’t as good.

Benchmarks

I was very interested in seeing just how far I’ve come from my laptop. I tried to come up with a few benchmarks that would help gauge just how much of a real world performance increase I would see.

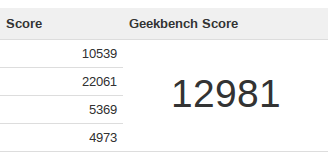

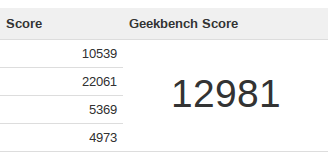

Geekbench – Laptop: 5,950 FX-8350: 12,981

Geekbench is a pretty good benchmark of CPU performance, and I relied on Geekbench’s results browser very heavily while I was shopping. I was really hoping to triple my laptop’s processor performance, but I quickly learned that the required hardware was pretty expensive.

My first-generation, quad-core i7 laptop manages a Geekbench score of 5953. I didn’t want to bother upgrading unless I could double that score. I was a little worried, though, because the scores for the FX-8350 cover a range from 9500 to 13,500. I was hoping to reach 13,000.

This wide range of scores for the FX-8350 was my primary motivation for this write-up. I have no idea what is wrong with those poor FX-8350 machines that are scoring under 10,000, and I was a bit worried that I would be down there with them. I’m happy to be able to report that the FX-8350, paired with the very reasonably priced MSI 970A-G43, performs very well.

I was happy to see that the parts I chose for my new Linux desktop were able to pull off a Geekbench score of 12,981. That’s close enough to 13,000 for me, and it is better than the majority of scores for FX-8350 machines. This is definitely good enough for now, but a tried out a small bump in CPU multiplier, and that brings that score up to 13,649

Linux kernel compile time – Laptop: 3:48 FX-8350: 1:40

I did run this test on both machines in RAM on a tmpfs file system. This seemed more fair. My drives are encrypted, and I didn’t want the laptop’s lack of encryption acceleration to be a factor.

I ran make defconfig && /usr/bin/time make -j 12 on fresh copy of version 3.10 of the Linux kernel. I did some testing way back when I bought this laptop, and determined that 12 jobs was pretty close to ideal. I did make a run with -j 16 on the FX-8350, but I saw no improvement.

The laptop completed the task in 3:48, while the FX-8350 took only 1:40. That’s 2.28 times faster than the laptop and is in line with the Geekbench results.

Note: You don’t need more jobs than you have cores when compiling from a RAM disk, since the compiler never has to spend any time waiting on the disk. A make -j 8 gives virtually identical results in this test.

1080p h.264 encoding with Handbrake – Laptop: 9.03 FPS, FX-8350: 26.71 FPS

I haven’t actually transcoded much video in the last two years, but I’ve had to wait on this kind of job often enough that this seemed like a useful test. To save some time, I encoded chapter 15 of the Blu-ray “Up” using my slightly modified “high profile” settings in Handbrake. Chapter 15 is roughly four and a half minutes long, so I didn’t have to spend too much time waiting for results.

My new FX-8350 workstation is almost three times faster than my laptop in this case. The laptop only managed 9 frames per second, while the FX-8350 pulls off 26.7 frames per second. That’s fast enough to transcode a 24-frame-per-second Blu-ray movie in real time, even using these “high profile” settings.

openssl speed aes – Laptop: 60 MB/s, FX-8350: 210 MB/s

This test is a bit flawed. I was hoping to see how much advantage the AES acceleration instructions would give the FX-8350, but the openssl package that ships with Ubuntu doesn’t support them. The FX-8350 still manages to pull numbers that are over three times faster than my old laptop.

Laptop:

type 16 bytes 64 bytes 256 bytes 1024 bytes 8192 bytes

aes-128 cbc 76225.62k 82501.14k 85311.70k 85539.62k 84862.54k

aes-192 cbc 65285.86k 69284.52k 71094.54k 70744.36k 71078.61k

aes-256 cbc 56213.70k 59583.91k 61326.30k 60588.61k 60864.64k

FX-8350:

type 16 bytes 64 bytes 256 bytes 1024 bytes 8192 bytes

aes-128 cbc 112766.76k 119554.97k 123908.45k 280502.03k 285362.86k

aes-192 cbc 95632.70k 100571.26k 103522.55k 238100.82k 242368.13k

aes-256 cbc 82652.83k 86610.07k 88366.59k 207808.64k 210090.55k

SSD and whole disk encryption performance

These are the bonnie++ results, including my previous benchmarks from an older article:

Version 1.03c ------Sequential Output------- --Sequential Input-- --Random-

-Per Chr- --Block-- -Rewrite-- -Per Chr- --Block--- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

Laptop, M4, no encryption 16G 954 98 183205 20 105877 9 4858 99 327088 16 4306 120

Laptop, M4, aes 16G 604 94 152764 16 63475 6 4064 98 145506 6 2380 46

FX-8350, M4, aes 6G 642 99 178581 28 82598 25 3124 99 300500 15 4234 104

The sequential block output has mostly caught back up with the Crucial M4’s unencrypted speed. Sequential input very nearly caught up. I am a little bit disappointed in that, though. I was expecting the SATA 3 ports on my new MSI 970A-G43 motherboard to allow the read speeds to surpass the SATA 2 limited 320 MB/s. I must still be hitting a decryption bottleneck, even with the AES-NI kernel module loaded.

I’m still very pleased with my Crucial M4. Its price and performance are both good, and the drive is still performing well after nine months of hard use and random benchmarks.

The verdict

The new hardware easily exceeded my performance goals. The grunt-work tasks that I usually have to wait for are running two or three times faster than before, which should save me quite a bit of time.

All the games I play are running faster, and they look better to boot. The video card I have is fast enough for now, and it is nice to know that I’m just a video card upgrade away from having a pretty powerful gaming machine.

I have to say that I am very pleased with this build. I now have more performance than I actually need, and I feel that I got plenty of bang for the buck.

Update 2013-07-16: I have two new pieces of information. I ended up with one bad stick of RAM. I have it boxed up and ready to ship back. I’m not in a hurry to find a UPS drop box, though, because 24 GB is still more than I need.

I also swapped out the stock AMD heat sink and fan combo. It is quite loud. I ended up replacing it with an Arctic 7 CPU Cooler. It was very reasonably priced, and it doesn’t get nearly as loud as the stock cooler does when it spins up to full throttle. The computer isn’t as quiet as my laptop, but that isn’t surprising. The PSU fans are now the loudest thing in there, and one of my spare hard drives I stuck in there seems surprisingly noisy.