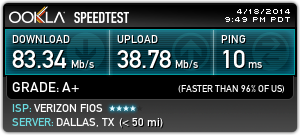

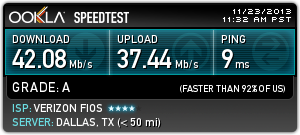

It has been quite a while since we made the decision to drop our cable television service. We’ve just been patiently waiting for our two-year Verizon FiOS contract to expire. The contract expired about a month ago, so we dropped our phone and television service and upgraded our Internet connection from 35/35 megabit to 75/35.

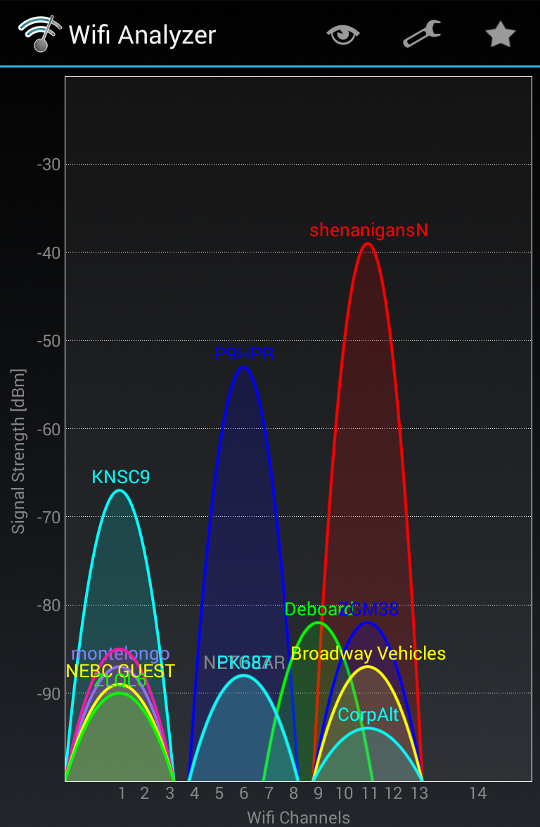

We did have a minor issue. Our television in the living room is just about as far away from our Wi-Fi access point as you can get, and our PlayStation 3 and other set top boxes all seem to have rather poor wireless hardware. We also live in a building with other tenants, and that means we see lots of wireless interference. I can pick up over a dozen networks from here, and I’m probably close enough to connect to almost all of them.

I’m embarrassed by the solution

My friend Brian had a similar problem a couple of years ago. He’s in a single-story home, and his router and access point were in one corner of the house, and he was getting very poor signal to the opposite corner. He wanted to try a Wi-Fi repeater or powerline Ethernet adapters, but I shamed him into running a proper network drop.

He decided that if we were going to do the work, that running a single drop wasn’t going to cut it. He decided to wire up every room in the house with Ethernet. I supplied the cable, he bought the jacks, and we put in the time and got it done.

I’m in an apartment. I have hundreds of feet of cable left on my spool, but I just don’t have the access to run a network drop clear across the apartment. I wish I did.

I happened to see a pair of D-Link DHP-309av powerline Ethernet adapters on sale, so I bought them. I’m not especially proud of the decision, but they’re doing a great job.

Not quite 200 megabits per second

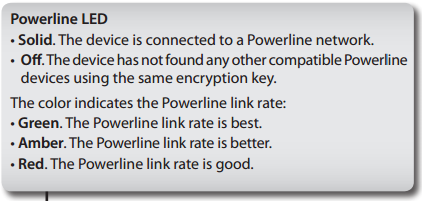

I don’t know what these DHP-309av units actually max out at in the real world. One of the lights shines red, amber, or green, depending on the quality of your connection. My pair are reading amber. The manual uses the word “better” to describe this state. A red light means “good,” and a green light signifies “best.” These aren’t the words I would have chosen.

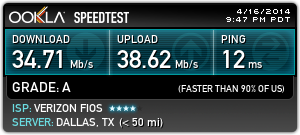

My “better” quality connection gets me between 30 and 40 megabits per second in either direction. That’s better than I ever see over Wi-Fi, and it also means that the television and desktop computer downstairs won’t be competing to make use of our limited Wi-Fi bandwidth.

I also picked up an inexpensive Gigabit Ethernet switch, a TP-LINK TL-SG1005D. That way I can plug in multiple devices by the television, and I was able to run a long cable down the wall to plug in Chris’s desktop computer.

An additional 30-megabit path is a huge improvement

We used to have a desktop, a laptop, various set top boxes, and up to five Android devices all sharing the Wi-Fi signal. That was only about twenty megabits per second to share between them, and that’s assuming that the PlayStation 3 wasn’t actively limping along at a lower bitrate—that just slows everyone else down. We’ve better than doubled the available bandwidth to the living room.

I haven’t seen any buffering in Netflix or Hulu on PlayStation 3 since the upgrade, and the video quality never drops. It is very nice to have the PlayStaton 3 and its horrible wireless adapter off of our wireless network.

The USB Wi-Fi adapter on Chris’s desktop computer wasn’t very good, so she’s seeing quite an improvement as well.

Encrypting the powerline network

Setting up encryption on these D-Link DHP-309av is pretty simple. You press the button on one adapter, and then walk over and hit the same button on the other adapter. That’s it. It sounds easy.

There’s just one problem: I have no way to verify that my signal is encrypted. It probably is, but there’s no indicator light or web interface to tell you that it is active. I just have to hope that it is working, or that no one else is plugged in close enough to snoop on my signal.

The verdict

I’m quite pleased with the results. A fraction of our first month’s savings from dropping cable television service bought me all the hardware I needed to greatly improve our Netflix and Hulu experience in the living room. What more could I ask for?