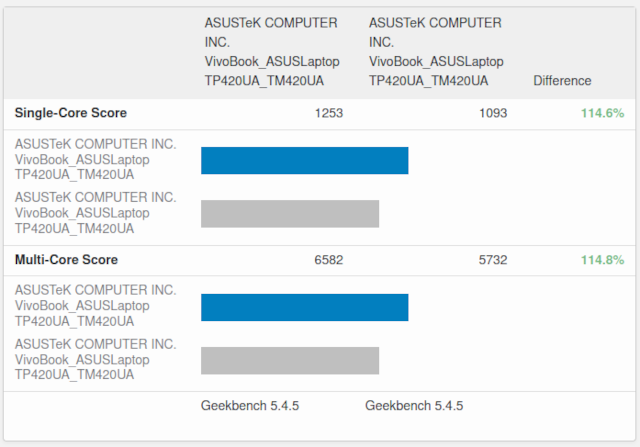

I am writing way too many blogs about mini PCs lately, so I definitely wasn’t planning on publishing this. As I was staring at the graph, I just thought that I had to write about what I was seeing.

The simplified answer to the question in the title is yes. There are outliers that are a better deal. All the common mini PC models from the lowly $129 N5095 up to one with an 8845HS for $649 has similar enough value per unit of CPU horsepower or unit of RAM, though they do drift towards being a little more expensive as you climb the ladder. Even so, you do get other upgrades along the way like more NVMe slots, faster network interfaces, and better integrated GPUs.

There is some variation. The math doesn’t work out perfectly, and the models don’t wind up being fractions that divide evenly with each other. You can’t literally say that one Ryzen 5560U Beelink box is exactly equivalent to two Celeron N100 Trigkey boxes, but it is quite close.

I am not even going to try to sneak an extra subheading in here before giving you the table.

| CPU | RAM | Price | Geekbench | Geekbench per $100 |

RAM per $100 |

|---|---|---|---|---|---|

| n5095 | 16 GB | $129.00 | 2100 | 1628 * | 12.40 GB |

| n5095 | 32 GB | $187.00 | 2100 | 1123 | 17.11 GB * |

| n100 1gbe | 16 GB | $134.00 | 2853 | 2129 * | 11.94 GB |

| n100 1gbe | 32 GB | $192.00 | 2853 | 1486 | 16.67 GB * |

| n100 2.5gbe | 16 GB | $239.00 | 2786 | 1166 * | 6.69 GB |

| n100 2.5gbe | 32 GB | $329.00 | 2786 | 847 | 9.73 GB |

| n100 2.5gbe | 48 GB | $380.00 | 2786 | 847 | 12.63 GB * |

| 5560u | 16 GB | $206.00 | 6200 | 3010 * | 7.77 GB |

| 5560u | 64 GB | $321.00 | 6200 | 1931 | 19.94 GB ** |

| 5700u | 16 GB | $233.00 | 7200 | 3090 ** | 6.87 GB |

| 5700u | 64 GB | $348.00 | 7200 | 2069 | 18.39 GB * |

| 5800u | 16 GB | $239.00 | 7900 | 3305 | 14.94 GB |

| 6900hx | 16 GB | $439.00 | 10200 | 2323 * | 3.64 GB |

| 6900hx | 64 GB | $614.00 | 10200 | 1661 | 10.42 GB |

| 6900hx | 96 GB | $721.00 | 10200 | 1415 | 13.31 GB * |

| 7840hs | 32 GB | $550.00 | 12000 | 2182 * | 5.82 GB |

| 7840hs | 64 GB | $725.00 | 12000 | 1655 | 8.83 GB |

| 7840hs | 96 GB | $832.00 | 12000 | 1442 | 11.54 GB * |

| 8745h | 32 GB | $442.00 | 12000 | 2715 * | 7.24 GB |

| 8745h | 64 GB | $617.00 | 12000 | 1945 | 10.37 GB |

| 8745h | 96 GB* | $724.00 | 12000 | 1657 | 13.26 GB * |

| 8845hs | 32 GB | $649.00 | 12000 | 1849 * | 4.93 GB |

| 8845hs | 64 GB | $824.00 | 12000 | 1456 | 7.77 GB |

| 8845hs | 96 GB* | $931.00 | 12000 | 1289 | 10.31 GB * |

NOTE: Heavily rounded Geekbench 5 scores are guesstimated from searching Geekbench’s database. Precise numbers are from my own personal tests.

UPDATE: I am sneaking in this Trycoo HA-2 5800U mini PC, because it is priced close to a Trigkey or Beelink 5700U while adding a 2.5-gigabit Ethernet port. I don’t know the brand, but that sure sounds like a good deal!

UPDATE: There are two mini PCs with the much more recent Ryzen 8745H and 8745HS showing up in my deal feeds. The Minisforum UM870 and the Beelink SER8 are both starting to show up with 32 GB of RAM for less than $450. That price moves them way up near the front of the pack in bang-for-the-buck.

These mini PCs are discounted almost every single week, so the prices listed are the lowest usual sale prices that I have seen. Don’t buy at full price, but you may have to wait a while to see a sale as low as what I have listed in the table!

The lines with an asterisk next to the RAM are priced with the biggest RAM kit that they be capable of using. You can’t buy most of these without preinstalled RAM, so you will have a spare stick or two of RAM if you upgrade your own mini PC.

I have been keeping this data in a messy Google Sheet. If you want to be able to sort or do your own math, you should definitely to check it out!

- Choosing an Intel N100 Server to Upgrade My Homelab

- The Sipeed NanoKVM Lite Is An Amazing Value

- When An Intel N100 Mini PC Isn’t Enough Build a Compact Mini-ITX Server!

- Topton DIY NAS Motherboard Rundown!

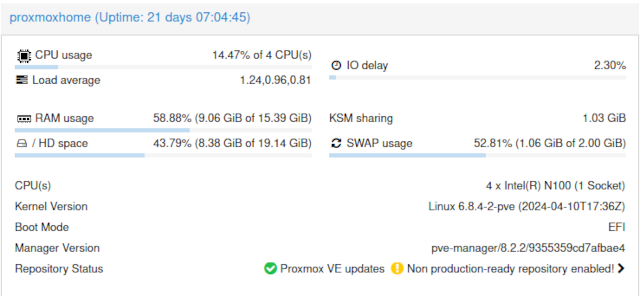

- My First Week With Proxmox on My Celeron N100 Homelab Server

- It Was Cheaper To Buy A Second Homelab Server Mini PC Than To Upgrade My RAM!

- I Added A Refurbished Mini PC To My Homelab! The Minisforum UM350

I was surprised to see that 48 GB DDR5 SO-DIMMs are a good deal!

First of all, you can still save more than a few dollars on RAM if you choose an older mini PC that uses DDR4 RAM. DDR4 is still abundant, so the prices are still slowly going down.

You can only get DDR4 SO-DIMMs in 8 GB, 16 GB, or 32 GB sizes, but DDR5 SO-DIMMs are also available in 12 GB, 24 GB, and 48 GB sizes! That means you can squeeze 48 GB of RAM in a mini PC with only a single SO-DIMM slot or 96 GB in a mini PC with two SO-DIMM slots. You will have to pay a small premium per gigabyte compared to 32 GB DDR5 SO-DIMMs.

My brain immediately said, “That can’t be a good value!” but I was wrong. If your goal is to pack as much RAM into your homelab cluster as possible, then it is very much worth paying that premium.

NOTE: I haven’t personally tested 48 GB SO-DIMMs in any of these mini PCs. We have friends on our Discord server who have used 48 GB DDR5 SO-DIMMs with the Topton N100 motherboard and N100 mini PCs. Your mileage may vary, but I expect these will work in the higher end Ryzen mini PCs as well. To play it safe, I would make sure you buy your RAM from a store with a good return policy!

There are some outliers on that table that just don’t fit in!

It isn’t too surprising that both the oldest and newest CPUs on the list are the worst value. The N5095 is not only the weakest CPU in the table, but it has the worst performance per dollar. Even so, it is one of the best deals around if you want lots of RAM in your homelab!

The Ryzen 8845HS and 7840HS are very nearly the exact same piece of hardware. The newer CPU has a stronger NPU, but they are identical in every other way. You’re paying a premium for the absolute newest CPU here, and you are paying a premium with either for DDR5 RAM.

Both models of the Beelink SER5 lean in the opposite direction. Their Ryzen 5560U and 5700U processors are getting pretty long in the tooth, so they are priced really well for their performance capability. Not only that, but they require DDR4 SO-DIMMs, so they also have the advantage of using less costly RAM.

- Beelink SER5 Mini PC (Ryzen 5560U) at Amazon

- Beelink SER7 Mini PC (Ryzen 7840HS) at Amazon

- Beelink SER8 Mini PC (Ryzen 8845HS) at Amazon

Sometimes you get six of one, half a dozen of the other

Half of the table has RAM prices in the 6, 9, or 12 gigabytes of RAM per $100 range. Each mini PC starts out expensive with the stock RAM, and the pricing gets better as you upgrade. The value for RAM gets slightly worse the higher you go up the list, but Geekbench scores also tend to go up.

The big, messy spreadsheet has a column with the Geekbench score per gigabyte of RAM. That might be a good number to look at if you are working hard to minmax your mini PC cluster, but I can save you some time. The winners here again are the Beelink SER5 with a Ryzen 5560U or Ryzen 5700U.

The Ryzen 5700U being the value champ seems to be the theme of this blog, but I think it is important to note that almost everywhere on this list you are trading RAM for CPU chooch.

Prices go up at the high end, but you do get something for your money!

I like simplifying everything down to RAM or benchmark scores per dollar. I want my virtual machines to only need to be assigned RAM and CPU to go there job, and I don’t want them to worry about where they are running. I prefer to avoid passing in PCIe or USB devices unless I absolutely have to. Sometimes you need more than just RAM and CPU to get things done.

The Beelink SER6 MAX starts to drift into the more expensive territory if you are simply breaking it down by RAM per dollar, but it adds some useful upgrades. It has a 2.5 gigabit Ethernet port, a pair of m.2 slots for NVMe storage, and a 40-gigabit USB4 port. I don’t currently need this, but these upgrades definitely add value, and these could be extremely useful for someone.

The Beelink SER7 and SER8 both have the 2.5 gigabit Ethernet port, and they both have dual 40-gigabit USB4 ports, but they lack the second NVMe slot.

On the lower end, there are mini PCs like the CWWK N100 mini PC router in my own homelab. It has four 2.5 gigabit Ethernet ports and FIVE NVMe slots. It costs more than a Beelink or Trigkey mini PC with the same CPU, but if you need more than one network port or NVMe, then it might be worth the extra cost.

- CWWK Mini PC 4-port router (Intel N100) at Amazon

- Beelink SER6 Mini PC (Ryzen 6900HX) at Amazon

- Beelink SER7 Mini PC (Ryzen 7840HS) at Amazon

- Beelink SER8 Mini PC (Ryzen 8845HS) at Amazon

As far as I am concerned, RAM is the king of virtualization

If you’re going to run Proxmox in your homelab, and you don’t know what to buy, then you should put RAM at the top of your list.

You can over provision disk images. Things will work. Things will run. Things may run forever, but you may run out of disk space some day. That is something that can be fixed.

You can over provision your CPU, and you definitely should! The odds of all your virtual machines needing to do hard work at the same time ought to be low. Even if they aren’t, the worst thing that happens if you don’t have enough CPU is that your tasks will run slower. The important thing is that they will run even if they run slowly.

From a purely technical standpoint, you can over provision RAM. There are mechanisms that let your machines release RAM to the host to be used by other machines when they need it, but if you push this too far, processes or virtual machines will start to be killed.

Memory is a resource that even idle virtual machines need. A gigantic PostgreSQL eats up a lot of RAM whether you are asking it run queries or not. Your dozens of Nginx processes are waiting for hours, days, or weeks for a connection may not be using any CPU, but they are sitting somewhere on a stick of RAM ready to respond.

Unallocated RAM on a Proxmox server is just RAM waiting to be allocated to a new virtual machine tomorrow. It is handy to have some spare!

How big of a mini PC do I need?

This blog is making me feel like a liar, because a few weeks ago I wrote an entire blog saying that it was cheaper to buy a second N100 mini PC than to upgrade the RAM in my existing N100 mini PC. For my own purposes, it was a better value, but I am beginning to think I didn’t do the math correctly with a 48 GB DDR5 SO-DIMM!

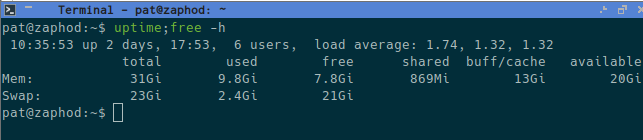

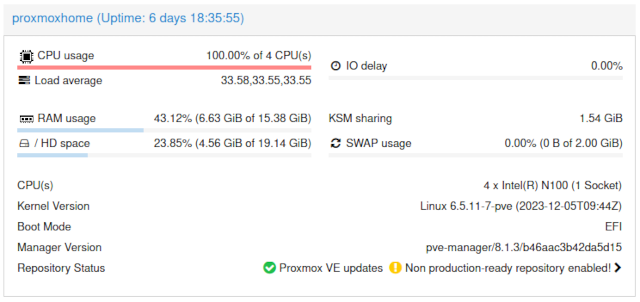

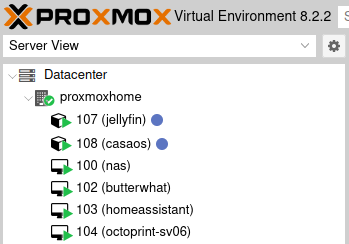

The variety and combination of mini PCs you buy for your homelab will depend on your needs. My whole world fits on a single Intel N100 with 16 GB of RAM, and adding a second similar machine was a luxury. Adding a second server has also been fun. That’s a good enough excuse to do pretty much anything!

My biggest virtual machine is using 3 GB of RAM, and I could tighten that up quite a bit if I had to. Most of my virtual machines and LXC containers are using 1 GB or less. These are small enough that I could easily juggle them around on a Proxmox cluster where each physical server only has 8 GB of RAM.

Your setup might be different. You might have a handful of Windows virtual machine that really need at least 8 GB of RAM to spread out into. If that’s the case, you’d have trouble with 16 GB mini PCs like I am using. There’s only 14 or 15 GB of RAM left over once you boot Proxmox, so you might only fit one of these bigger virtual machines on each mini PC. That’d make playing musical chairs with virtual machines a challenge, so you might be better off with mini PCs with at least 32 GB of RAM.

There’s a balance to be had here. I keep saying that I like the ratio of CPU horsepower to RAM in an N100 mini PC with 16 GB of RAM or a Ryzen 5560U mini PC with 32 GB of RAM. I am comfortable with that ratio without knowing anything at all about what you’re doing with your homelab. It just feels like a good middle ground.

My own homelab wouldn’t be short on CPU even with 48 GB in the N100 or 64 GB in the 5560U. We all have different needs.

One big mini PC, or lots of small ones?!

One big server is easier to manage, and you won’t have to worry about splitting up your large virtual machines while leaving room to fit your smaller virtual machines in between the cracks.

These mini PCs are fantastic because they are so power efficient. The Intel N5095 and N100 mini PCs DO idle more than a few watts lower than all the Ryzen mini PCs on this list, but the difference isn’t all that dramatic. My pair of Intel N100 mini PCs burn at least as much electricity as a single Beelink SER5, and the most expensive mini PCs with Ryzen 8845HS CPUs are probably comparable to the Beelink SER5. That means consolidating down to one beefy mini PC will almost definitely save you money on your electric bill.

Splitting things up provides redundancy. I am not doing anything this fancy, but you can set up a high-availability Proxmox cluster with three or four N100 mini PCs. If one fails, those virtual machines will magically pick up where they left off on one of the remaining mini PCs.

That is pretty cool, but even if you don’t set that up, it is nice knowing that all your services won’t go down due to a single hardware failure, and you could always restore the unavailable virtual machines from backup and run them on the remaining mini PCs. That is assuming you have enough free RAM!

That brings us to another neat feature of running lower-spec mini PCs. When your cluster gets low on RAM or CPU, you can buy another inexpensive mini PC. This works even if you start with only one tiny mini PC.

I prefer the idea of multiple smaller mini PCs

I don’t think there are any wrong choices to make here, but here’s what I have been thinking about for my own setup.

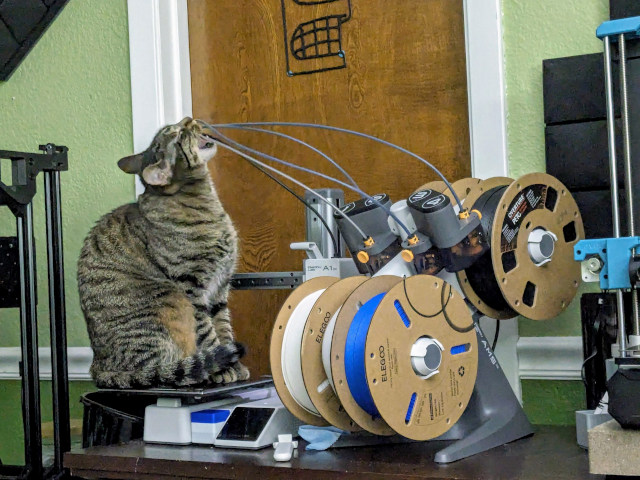

There are plenty of uses outside of our homelabs for these mini PCs. My Trigkey N100 mini PC that I bought for my homelab hasn’t made it into my homelab yet. I have had the Trigkey N100 plugged into the living room TV playing Steam, NES, SNES, and Nintendo Wii games for the last few weeks.

You can plug these into an old monitor and have an extra PC at your workbench or CNC machine, and most of them have brackets that let you hang them from your monitor’s VESA mount. Almost anything you might do with a Raspberry Pi can be done with an Intel N5095 or N100 mini PC.

If you buy one big mini PC that can meet all your homelab needs, then it is going to be stuck in your homelab for a very long time.

If you have a variety of less powerful mini PCs, you can decide to upgrade and retire one early to reuse that slightly outdated mini PC for something else. You could send it to a friend’s house to use as an off-site Proxmox server and backup host. You could retire it to the living room to stream your Steam library. You could set it up to control your 3D-printer farm.

I expect to get better overall use out of three $150 mini PCs than I would out of a single $450 mini PC, and it is nice knowing that I can upgrade my homelab one slowly instead of having to replace nearly everything all at once.

Your stack of mini PCs doesn’t have to be identical

I fall into this trap a lot when I stare at the spreadsheet. I want to say things like, “Three N100 mini PCs are equivalent to one 6900HX mini PC,” but I shouldn’t be thinking that way. The obvious problem is that the various mini PCs don’t quite divide evenly into each other, but that isn’t the only thing wrong with this line of thinking.

Maybe you have a single virtual machine that needs 16 GB of RAM, then a while mess of other machines that only need 1 or 2 GB. That seems like a good excuse to build your homelab out of one Ryzen 5700U mini PC and one or two Intel N100 mini PCs.

You can mix and match whatever makes sense for you. Heck! Your homelab doesn’t even have to be 100% mini PCs!

We didn’t talk about iGPUs!

From a gaming perspective, the table at the top of this blog goes from worst to best. The Ryzen 7840U and 8845U have ridiculously fast integrated GPUs! They are a generation ahead of the Steam Deck, and they have even more GPU compute units. They can run a lot of games quite well, and they can even manage to run many of the most demanding games as long as you turn the settings down.

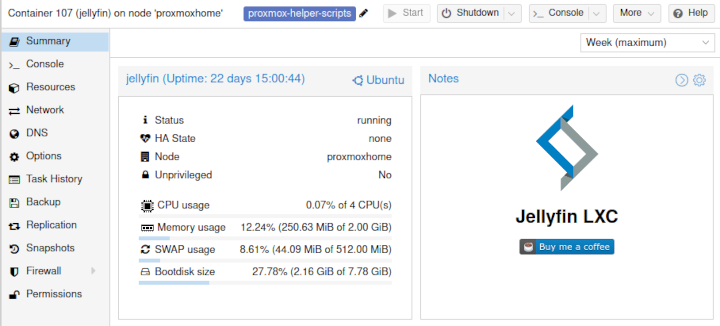

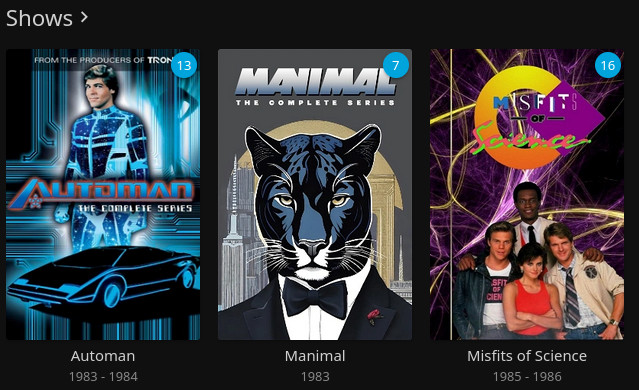

A common use case for the iGPU in your homelab would be video transcoding for Plex or Jellyfin. There isn’t nearly as big of a gap in transcoding performance as there is in gaming performance between these boxes. They only set aside a small corner of these GPUs for the video encoding and decoding hardware, and they are all really only aiming to make sure you have enough horsepower to encode and decode 4K video in real time.

My Intel N100 mini PC can transcode 2160p HDR video with Jellyfin at 77 frames per second. That is enough to keep three movies playing on three televisions. I don’t know where my upper limit is on the N100. Transcoding two movies at the same time utilizes more of the GPU and each stream maintains better more half of that 77 frames per second. I wouldn’t be surprised if there is enough room for me to feed five televisions with transcoded 10-bit 2160p video.

Even better, my Jellyfin server rarely has to transcode because the majority of my devices can play back the raw video streams straight out of the video files.

If transcoding is your goal, every mini PC on the list is up to the task. I wouldn’t be surprised if the performance goes up a bit towards the higher end of the list, but I bet it doesn’t go up all that much.

Conclusion

I guess the real conclusion is that the best bang for your buck is easily the Beelink SER5 with the Ryzen 5560U or with the N5095 and N100 boxes coming in a close second, but the important thing to remember is that these aren’t miles ahead on value. You can spend a little more on beefier mini PCs without paying too much of a premium.

Sometimes that premium even buys you something you can us. The Beelink SER6 MAX has a 2.5 gigabit Ethernet interfaces and room for two NVMe drives, and if that isn’t enough for you, the CWWK N100 mini PC in my homelab has four 2.5 gigabit Ethernet ports and room for FIVE NVMe drives. There are so many options available in small packages to piece together a nifty homelab cluster for yourself that might fit in a lunchbox or shoebox.

What do you think? Are you building out a full-size 42U server rack at home? Or are you trying to build something quieter and more efficient? Are you already running a short stack of mini PCs, or are you looking to build up a diminutive server farm at home? What’s the most unexpected use case you’ve found for a mini PC? Tell us about it in the comments, or stop by the Butter, What?! Discord server to chat with me about it!

- Pat’s Mini PC Comparison Spreadsheet

- Degrading My 10-Gigabit Ethernet Link To 5-Gigabit On Purpose!

- The Sipeed NanoKVM Lite Is An Amazing Value

- When An Intel N100 Mini PC Isn’t Enough Build a Compact Mini-ITX Server!

- Topton DIY NAS Motherboard Rundown!

- I Added A Refurbished Mini PC To My Homelab! The Minisforum UM350

- Trigkey N100 Mini PC at Amazon

- CWWK Mini PC 4-port router (Intel N100) at Amazon

- Beelink SER5 Mini PC (Ryzen 5560U) at Amazon

- Beelink SER6 Mini PC (Ryzen 6900HX) at Amazon

- Beelink SER7 Mini PC (Ryzen 7840HS) at Amazon

- Beelink SER8 Mini PC (Ryzen 8845HS) at Amazon