The short answer seems to be yes, but why did I decide to write about this question today?!

Through the magic of Tailscale, my Seafile server, a Dropbox-like cloud-storage server, has been running remotely on a Raspberry Pi in Brian Moses’s home office for 22 months. The Pi boots off of a cheap micro SD card with all logging turned off, and Seafile stores all its data on a 14 TB Seagate USB hard drive.

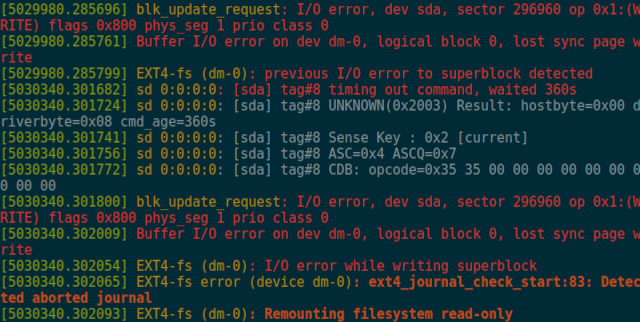

Last week I had a scare! The ext4 filesystem on my USB hard drive set itself to read-only, and there were errors in the kernel’s ring buffer. Did my big storage drive fail prematurely?!

- Self-Hosted Cloud Storage with Seafile, Tailscale, and a Raspberry Pi

- My Self-Hosted Cloud Storage with Seafile and Tailscale is Already Cheaper Than Dropbox or Google Drive!

What happened?!

We may never know. I rebooted my little server, and then I ran an fsck. When that finished, I mounted the LUKS encrypted filesystem on the 14 TB USB hard drive, fired Seafile back up, and everything was happy. I haven’t seen another error in 13 days.

What do you think might have gone wrong? Is my USB cable flaky? Did the USB-to-SATA hardware in the USB drive get into a weird state? Did my little friend Zoe run through Brian’s office and bang into the cabinet and jostle the USB cable enough at just the right time to generate an error?

This is the first error I’ve seen in 22 months outside of a couple of random power outages.

This is good enough for me to say that it is safe to run a USB hard drive on my Raspberry Pi server. Maybe that’s not good enough for you. If not, then I hope this is at least a useful data point in your research.

The power outages all wind up being long Seafile outages, because I have to SSH in to punch in the passphrase to mount the encrypted filesystem before Seafile can start. Not only that, but I have to notice that Seafile is down before I can spend two minutes firing it back up again!

- Self-Hosted Cloud Storage with Seafile, Tailscale, and a Raspberry Pi

- My Self-Hosted Cloud Storage with Seafile and Tailscale is Already Cheaper Than Dropbox or Google Drive!

I am pleased that my USB hard drive didn’t fail!

I have been comparing my Dropbox-style server to Google Drive’s pricing. Google sells 2 TB of cloud sync storage for $100 per year, and I paid about $300 for my 14 TB drive and my Raspberry Pi. I am getting my off-site colocation for free by storing my Pi at Brian Moses’s house on his 1-gigabit fiber connection. You can just barely see my Pi server in the background of our Butter, What?! Show episodes!

I have done a bad job of properly keeping track of how much money I haven’t paid Google Drive or Dropbox. When I started out, I would have needed to rent 4 TB of storage. At some point during the first year, I would have had to add another 2 TB, and then I would have had to add another 2 TB at some point during this year.

I am currently using 6.8 TB out of a possible 14 TB.

Is it OK if I simplify the payments I may have had to pay to Google Drive? How about we just say I would have spent $200 last year, $400 this year, and I would be spending another $400 in two months? I expect that’s underestimating by something around $100.

I have already paid for all the hardware, and I would still be at least $50 ahead even if I had to order a replacement 14 TB drive last week.

That would have been fine, but I would have been bummed out if I had to do the work of resyncing my data to my new Seafile server. I also might have to set up accounts for the friends I share work with, and they might have to get data synced back up. That would all be a bummer.

- Self-Hosted Cloud Storage with Seafile, Tailscale, and a Raspberry Pi

- My Self-Hosted Cloud Storage with Seafile and Tailscale is Already Cheaper Than Dropbox or Google Drive!

I don’t account for the cost of labor in my “savings”

I know what I used to charge in the short spans of time when I did hourly consulting. I understand that my time is valuable, and that value almost certainly exceeds the cost of a few years of Google Drive storage.

There are quite a number of advantages to what I am doing, and they are very difficult to put a price on.

- I own all my data

- My server is only accessible from my Tailscale network

- Everything except Tailscale is blocked on the LAN port

- My hard disk is LUKS encrypted

- My Seafile libraries are encrypted client side

- I don’t get stopped to pay more at every 2 TB

Aside from all of this, it is a bit disingenuous for me to imply that getting my Seafile setup running requires time and effort while setting up Google Drive or Dropbox requires none. I have my data split up into a dozen Seafile libraries, and I have no idea what the equivalent functionality would look like with Dropbox or Drive. I’d still have to make sure that the correct data is synced to the correct devices.

Even though getting Seafile running on a Pi was more persnickety and time consuming than I anticipated, I still spent the majority of the time setting up clients on all my machines and syncing the correct data to each one.

- Self-Hosted Cloud Storage with Seafile, Tailscale, and a Raspberry Pi

- My Self-Hosted Cloud Storage with Seafile and Tailscale is Already Cheaper Than Dropbox or Google Drive!

I didn’t intend for this to be an update on the Seafile Pi with Tailscale

Even though this wasn’t my intention, this seemed like a good time for an update, since the server has been in operation for nearly two years. I am quite pleased with how things have been working.

A few months ago, I started booting Raspbian’s 64-bit kernel, and I replaced the 32-bit Tailscale binaries with 64-bit static binaries. That increased my Tailscale throughput from 70 megabits per second to just over 200 megabits per second.

I also swapped out the 4 GB Pi with a 2 GB model. They’re both identical except for the RAM, but the 2 GB Pi will not negotiate gigabit Ethernet. It is stuck at 100 megabit, so I have given up my some of my Tailscale encryption speed boost!

Would I do this with a Raspberry Pi today?

Not a chance! The hardware is working just fine, but Raspberry Pi boards are never in stock. When they are in stock, they are overpriced.

My friend Brian Moses recently grabbed a Beelink box for $140 from Amazon. It has a Celeron N5095, 8 GB of RAM, and a 256 GB SSD.

Here’s that Beelink MiniS that I shared yesterday. It was such a good deal, I couldn’t resist buying one. #bananaforscale #macminiforscale pic.twitter.com/UKZfPFUjzw

— Brian Moses (@briancmoses) November 4, 2022

You get so much more with the Beelink! There’s a real SSD inside. The CPU is several times faster, and so is the GPU. You get full-size HDMI ports. You get 802.11ac. The Beelink isn’t just a bare board. It comes in a nice case, and it comes with a power supply!

The Raspberry Pi 4 is more than enough for my purposes, and I would gladly use one for this purpose again if they were still under $50.

One of the neat things about the Beelink boxes is that you can get a lot more horsepower than the Celeron version that Brian bought. You can also get a Beelink with 16 GB RAM and a 6-core Ryzen 5560U that is about three times faster. One of our friends on our Discord server bought one of those when it was on sale for only $300.

- Beelink Mini S (Celeron N5095) at Amazon

- Beelink SER5 Mini PC (Ryzen 5560U) at Amazon

Conclusion

I am relieved that my unexpected and unexplained SATA error didn’t wind up being a drive failure, and I am super excited that the minor gamble that is this entire Seafile Pi experiment is paying off. I have invested less than $300 in hardware, and in two months I will have not had to pay Google $1,000 or Dropbox $1,200 for cloud storage.

Even if we assign me a rather high hourly rate, I am confident that I have crossed the point where I am saving money. Isn’t that awesome?!