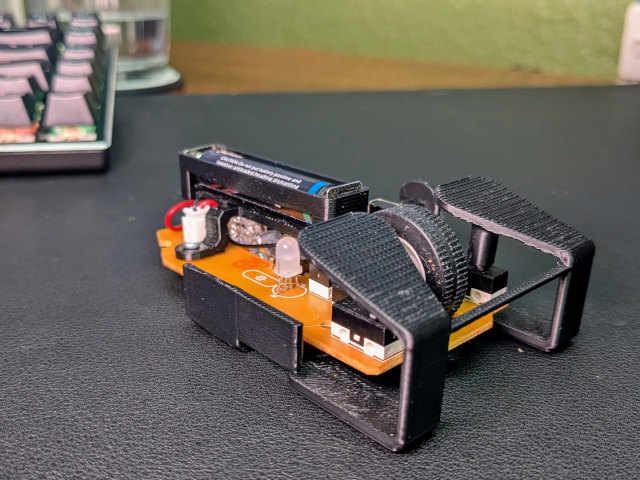

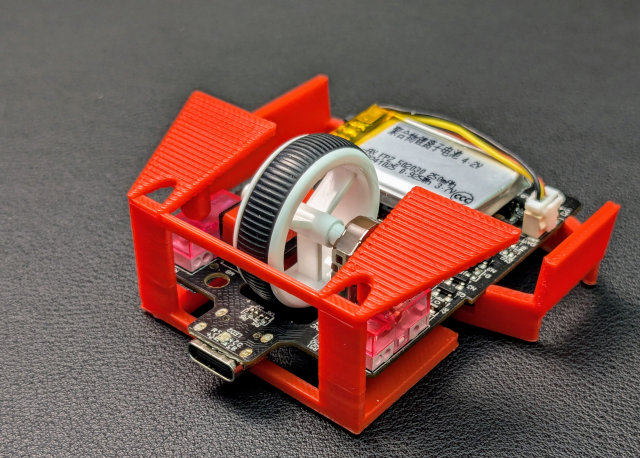

I am just going to put the tl;dr right up at the front. I wanted something like Optimum Tech’s Zeromouse. I tried the fake Logitech G304 upgrade, and it was awesome using a 29-gram mouse, but the electronics were jittery and terrible. I wound up discovering the 49-gram VXE Dragonfly R1 Pro for about $45. I ordered one. I love it. And I managed to design a skeletal shell that brings the mouse down to under 24 grams. I would still consider it a prototype, but it works great.

I have ordered a $22 VXE Dragonfly R1 SE. It should fit the same 3D-printed skeleton with minor tweaks. It might wind up being a couple of grams heavier, but I am excited that you will be able to print your own Zeromouse-style ultralight mouse for under $25!

UPDATE: The $22 VXE R1 SE+ is in my hands, I’ve since named my fingertip mouse frame the Li’l Magnum!, and I have already been gaming with my new $22 Li’l Magnum! for several days.

I am not even sure how to refer to this style of mouse. “Ultralight” seems appropriate, and I have heard them referred to as fingertip mice. I am partial to calling them skeletal gaming mice, but I may have made that up on my own!

- Li’l Magnum! Fingertip Mouse Mod in my Tindie store

- Li’l Magnum! 22-Gram 3D-Printed Fingertip Mouse Mod For The VXE Dragonfly R1 and R1 SE

- Ultralight Fingertip Gaming Mice – Two Weeks With My 21-Gram L’il Magnum

- Can We Make A 33-Gram Gaming Mouse For Around $12?

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 Pro at MakerWorld

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 Pro at Printables

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 / R1 SE at MakerWorld

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 / R1 SE at Printables

- VXE Dragonfly R1 Series mice at ATK.store

- VXE Dragonfly R1 Series mice at Aliexpress

The 49-gram VXE R1 Pro is amazing

I was gaming with a 100-gram Logitech G305 last month. My ultralight-mouse shenanigans clued me in that I could pair a tiny piece of aluminum foil with a 7-gram USB-C rechargeable AAA battery, and that dropped the G305 to 79.5 grams. That was an upgrade that I noticed, and I thought it felt fantastic. After spending a week with the VXE Dragonfly R1, the Logitech G305 feels like a brick.

I don’t think you need to modify your VXE Dragonfly at all. Mine was 49 grams out of the box, and it feels amazing. The battery lasts about a week on a charge for me.

My 49-gram VXE R1 Pro next to my 100-gram Logitech G305

The lightweight VXE R1 Pro feels a little cheap, but I don’t think that can be helped. The Logitech feels premium because of the heft. You can’t shave weight off every single part of the mouse without making things feel a little flimsy.

If you are still using a heavy, old-school mouse, I most definitely think you should try one of these mice. I don’t even think you need to swap it into one of my 3.7-gram shells. A 50-gram mouse is a HUGE upgrade, and you don’t even have to adjust to a weird style of mouse. The VXE R1 has a similar enough size and shape to my Logitech G305.

The R1 Pro isn’t even the lightest mouse from VXE. I just gravitated toward this model for this project because it is quite light, and I was tickled by the fact that there is an $18 model. You can buy a 36-gram VXE Mad R mouse with an 8K receiver for $46, and with a 36-gram mouse, do you even need to mod it down to 21 grams?

If you are planning to use my 3D-printed skeletal shell, then you want the VXE R1 Pro and not the Pro Max. The Pro Max has a larger battery that adds an additional 6 grams.

- VXE Dragonfly R1 Series mice at ATK.store

- VXE Dragonfly R1 Series mice at Aliexpress

I think Optimum Tech’s Zeromouse Blade is worth every penny

Though I am saying this as someone who hasn’t tried the real Zeromouse. It is 3D printed using laser-sintered nylon. Nylon is a much more premium material, and the printing process generates a more premium-feeling product than my Bambu Lab A1 Mini makes at home. Not only that, but the custom lightweight electronics in the Zeromouse are known to be high quality and extremely low latency. I believe $150 is a reasonable price for a custom piece of hardware like the 19-gram Zeromouse Blade.

I wasn’t even aiming at the new Zeromouse Blade when I ordered the VXE mouse. I was just hoping to make something similar to the previous iteration of the Zeromouse.

Aside from the previous revision of the Zeromouse shell being out of stock, I was disappointed that it required an $80 Razer V2 donor mouse. That is an outdated model now, which does make it cheaper than the Razer Pro V3, but it might not be available to purchase for all that much longer. I’d hate to design a mouse shell around something that is going away!

I also didn’t like the idea of spending $150 on a mouse that is so much different that what I have been using for 35 years. What if I hated it? What if I used it for an evening, threw it in a drawer, and never looked at it again? That would be a bummer!

I already tried a cheap fingertip mouse with a terrible sensor, and I enjoyed it a lot. My VXE Dragonfly mod is my second ultralight mouse. I can tell you that I would happily pay $150 for the new Zeromouse Blade, but that would be a lot less fun than designing my own and sharing it with you!

Where are we so far?

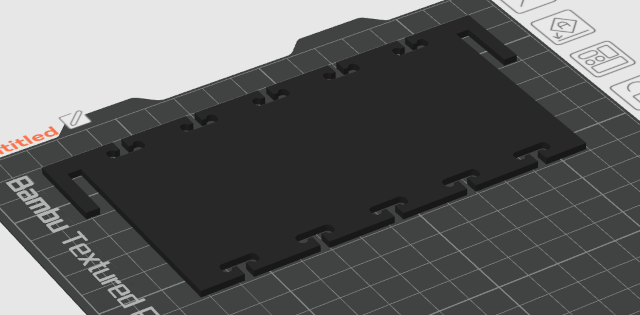

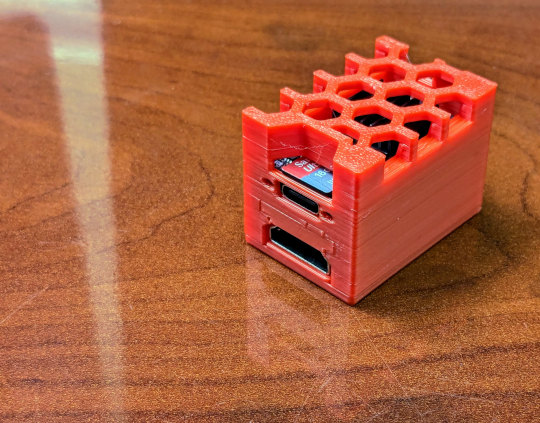

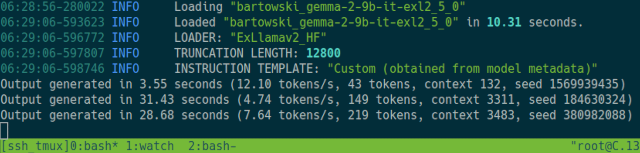

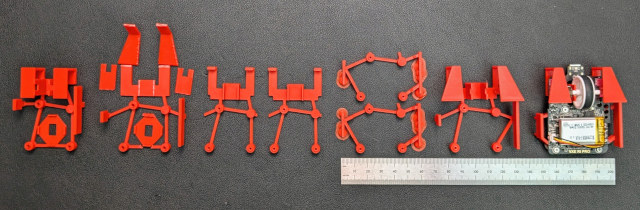

I am honestly amazed at how quickly this is progressing. I messed around in OpenSCAD for a couple of days before even taking the mouse apart, and I had all the screw holes within 0.5 mm of correct before I even had the PCB in hand to test out. My first full print was a little too small, but my second print was an actual functional mouse!

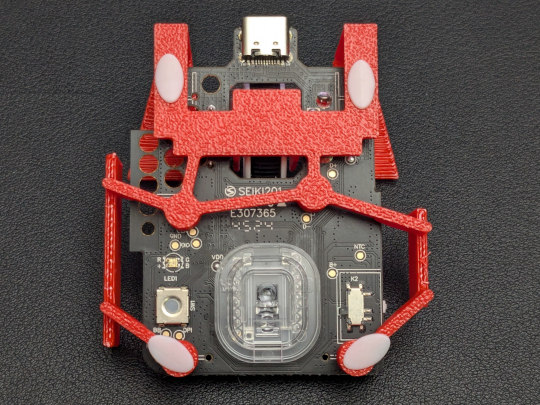

It took two more partial test prints to both perfectly line up the button positions with the paddles and to get a snug lateral fit on the PCB. Hugging the sides of the PCB significantly increased the rigidity of the mouse, and it feels quite solid now. That is the model that I uploaded to Printables and MakerWorld before starting to write this blog post.

A couple of days later, yesterday as I am writing this paragraph, I came up with a better solution for keeping the mouse wheel in place. I printed the tiny new part that slips over the microswitch, and I snipped out the old wheel support brackets from my existing print. This makes it SO MUCH easier to assemble a working mouse, it weighs slightly less, and I knew it would allow me to tighten up the wiggle in the button paddles.

I significantly tightened up the paddles this morning, put a slight angle on the finger grips, and eliminated the wheel supports. That print was only 0.1 grams heavier, way sturdier, and seemed to fit great, but the extra rigidity in the paddles meant that my buttons were both stuck in the on position! I am waiting for that part to print as I write this paragraph. I want that plunger length dialed in perfectly!

I feel like I am just about in a place where I get away with calling the STL file version 1.0, but the OpenSCAD source needs a lot of tidying up.

I think I am doing pretty well at aiming for six days of testing!

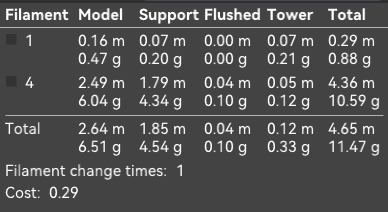

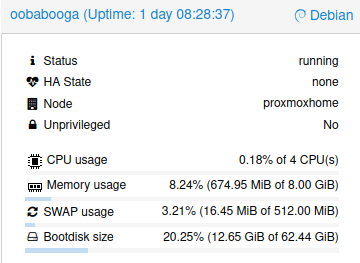

We have a 23-gram, 26,000-DPI, low-latency mouse that you can build for less than $50. I think that is fantastic, and I wouldn’t be surprised if I can get that down under 22 grams with a few slicer tweaks and a switch from PLA to ABS filament. The entire skeleton is 5.7 grams when printed in PLA, so there isn’t a ton of room for improvement here.

UPDATE: Printing my skeletal fingertip mouse with single walls and fewer top layers brought my total mouse weight down to 21.4 grams, and the whole thing feels nearly as rigid as it did with default print settings. I haven’t switched to ABS yet, but that ought to save another 0.7 grams or so. I don’t believe this is necessary.

I am confident that it won’t take much effort to tweak this design to fit the $18 VXE Dragonfly R1 or the $22 R1 SE. I imagine that those mice will feel indistinguishable during gameplay, except that I don’t know how much their circuit boards weigh. I do know for sure that we will have to print a small, dark piece of plastic to cover the giant LED in those cheaper mice!

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 Pro at MakerWorld

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 Pro at Printables

I have had to shim the buttons

Some of the skeletons I printed felt fantastic. Others have buttons that felt spongy. One of the skeletons with all the same offsets was so tight that the buttons were constantly pressed without even touching the mouse.

The trouble is that the little plungers sit on top of support material, and they don’t always come out exactly the same. I have tiny support pegs under the switches, and I figured out that I can cut a few tiny pieces of electrical tape to use as shims between the PCB and that peg to dial in the feel of the buttons.

The mouse I am using at the moment has one layer of tape under the right button and three layers of tape under the left. Each layer is about 0.15 mm, but the rubbery tape is a bit squishy, so it might take up less space in practice.

I have been printing my prototypes on my Bambu A1 Mini at my usual layer height of 0.16 mm. I can definitely switch to 0.1 mm layers for more precision, and I can also switch to a PETG support interface layer with zero gap to set the height of the plunger with more accuracy. That will help for my own mouse, but I have no idea what the tolerances are on your PCB!

NOTE: I may have mostly figured out the solution to this problem. We’ll see if it holds true. I am excited that the easy fix also dropped the total weight of the mouse to 21.4 grams! The explanation of the problem and why the solution works would be almost as long as this blog post.

- VXE Dragonfly R1 Series mice at ATK.store

- VXE Dragonfly R1 Series mice at Aliexpress

My hopes for the OpenSCAD source code

I would love it if we could just punch some new mounting hole locations and button positions into the OpenSCAD code and generate a skeletal mouse for any similar gaming mouse PCB, but I wasn’t nearly careful enough in the layout of the code to allow for that.

I ordered a huge pack of 6-mm PTFE skates. I don’t have many of these ancient football-shaped skates on hand. Thankfully, the textured bottom surface makes them easy to remove, or I would have run out of them on the first day!

I will be stoked if I can clean things up enough that you could go to MakerWorld’s parametric model maker so you can enter your own button height and length, thumb and finger pad positions and lengths, and tweak some settings to make the buttons stiffer or looser. I’m not far from that being possible, so I plan to set that up once I put the finishing touches on my own mice.

I would like to add a curve to the button paddles and finger grips, and that should be configurable in the parametric configurator as well.

How do I like gaming with a 23-gram fingertip mouse?

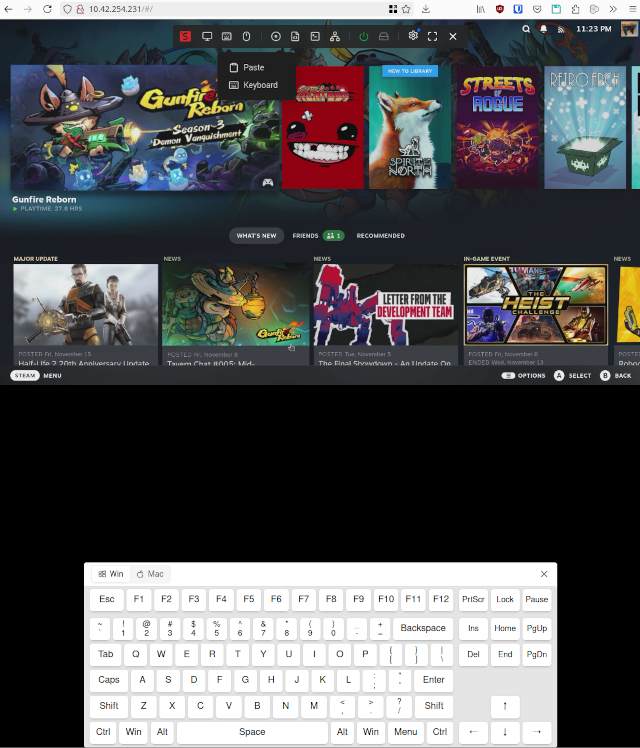

It is weird, and I am most definitely not quite acclimated to it yet. If you think switching to a featherweight mouse will instantly improve your FPS-gaming abilities, you will be extremely disappointed. You are almost definitely going to get worse before you get better!

My old Corsair mouse was nearly 100 grams. My recently purchased Logitech G305 is around 100 grams with a single AA Eneloop battery installed, but I have been gaming with it using a 7-gram USB-C rechargeable lithium-ion battery and a small piece of aluminum foil. That has brought the weight down to 79 grams.

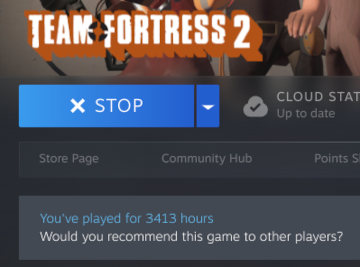

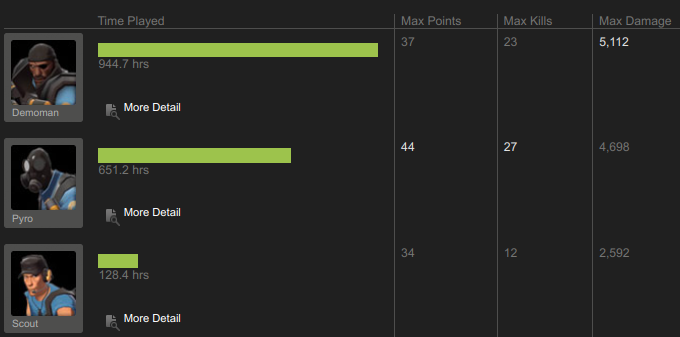

Playing Team Fortress 2 with the stock 49-gram VXE R1 Pro already made the 79-gram Logitech mouse feel like an absolute brick. Switching from the 23-gram skeletal mouse back to the brick feels even more dramatic.

I have both mice set to 3,200 DPI, and I can execute reasonably precise 90- and 180-degree turns with either mouse, but the skeletal mouse FEELS faster. It is especially noticeable when an enemy gets close, and you have to quickly adjust your aim to lock on and track them. I keep overshooting with the lightweight mouse. I had the same problem when I switched from the G305 to the R1 Pro, but it is more extreme with the skeletal mouse.

It takes so much less effort to get the mouse to start moving, so I am tending to push the mouse too hard for small and medium moves.

I am not good at playing scout in Team Fortress 2, but I assumed this would be the class where I would most notice the missing weight of the mouse, and I am definitely doing worse playing scout at 23 grams than I was at 49 grams. That said, I am pretty good at landing pills with the demoman, and I feel like I am doing a reasonable job there.

I suspect that the answer is going to be lowering my sensitivity. It will be easy to adjust to needing to move my mouse farther to turn around, and a lower sensitivity will help me be more precise on the smaller movements. I just figured that I should give myself more time to acclimate before making changes.

Do you want to know what the weirdest thing is about these light mice? You can’t spin the scroll wheel unless you are gripping the mouse. When you attempt to spin the wheel on a loose mouse, you wind up just pushing the mouse around!

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 Pro at MakerWorld

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 Pro at Printables

Conclusion

It is exceedingly difficult to not print just one more mouse. I keep saying that I am going to stop, but then a couple of hours go by, and I print a slightly different mouse. I am really going to stop now. At least for a while. I feel like I need to put a week or two into actually using a good skeletal fingertip mouse. That way I will have a better idea of what improvements I should actually be making.

I am aware that I have not yet met the expectations of this blog post’s title. I have only modded a $45 mouse down to 21 grams. The $23 mouse is on its way, though, and I am confident it will be easy to tweak the design to make its hardware fit my shell!

What do you think? Are you excited about the idea of a $25 fingertip mouse? Or do you think something like the 36-gram VXE Mad R is light enough? Do you prefer using a heavier mouse? Are you planning on trying out my model, or are you already using a different fingertip mouse mod? Tell me about it in the comments, or join our friendly Discord community where we talk about 3D printing, video games, and many other geeky topics!

- Li’l Magnum! Fingertip Mouse Mod in my Tindie store

- The L’iL Magnum! Fingertip Mouse Is Now Customizable on MakerWorld!

- Li’l Magnum! 22-Gram 3D-Printed Fingertip Mouse Mod For The VXE Dragonfly R1 and R1 SE

- Ultralight Fingertip Gaming Mice – Two Weeks With My 21-Gram L’il Magnum

- Can We Make A 33-Gram Gaming Mouse For Around $12?

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 Pro at MakerWorld

- Li’l Magnum! Fingertip Frame for VXE Dragonfly R1 Pro at Printables

- VXE Dragonfly R1 Series mice at ATK.store

- VXE Dragonfly R1 Series mice at Aliexpress